Latest build - here is the lstopo output for optimizing your lane usage…

You’re a God @wendell

everyones lstopo will be different depending on peripherals and bifrication settings. And thunderbolt card placement

Cool… so now it’s time to learn… how would you optimize from here?

lspci -nn should give you a table of pcie ids and peripherals. so you can match them up.

nvme and ethernet devices are obvious but everything else

you basically want to try to give the gpu a wide berth and probably also your storage since that will be really fast.

I’d probably try to get the 4x4 aic away from the on-mobo nvme but that won’t matter unless you’ve got all fast pcie4 storage anyway.

nics are too slow for it to matter, much. nics grouped with thunderbolt is probably fine.

so 1:00 is your (a) gpu so that’s good

lstopo -v --whole-io

maybe needed

That is a very long and detailed output - better option than screen grab?

Just so you can see what your Threadripper is doing for you with those nice PCIe lanes…

Here is my 5950x workstation with its sad need to route everything through the motherboard. OK, not everything but still not the best.

Do I smell a Threadripper PRO build on the horizon @zlynx ?

Having all these lanes is proving to be just glorious…

I am not upgrading my workstation again until DDR5. Probably three years at least.

Then I will probably do a Threadripper or EPYC. Unless Intel delivers a glorious HEDT before then. It could happen!

I’m saving my pennies for that and an Optane P5800X (or similar).

I would have done a Threadripper build this last year but the Zen 3 version was taking too long and 16 cores on 5950X seemed like plenty.

I was so lucky to score one at Mouser of all places… got the last one in stock. Just finalized the build last night with @wendell so testing now… using the P5800X as a level 2 cache with PrimoCache… zippy so far.

Ok - is there a way to determine if an open m.2 slot is away from PCI 01 (GPU) @wendell

For example, I could move the NvME to M2_A which is available on the MOBO form M2_B (PCI 02).

Yeah that usually works

Or not @wendell - looks like both m.2 slots want to be friends with the GPU in PCI 01:00. Guess I’ll have to re-located the GPU - thinking slot 1 that hangs off the end. Waiting on some delicious adapters to make that happen…

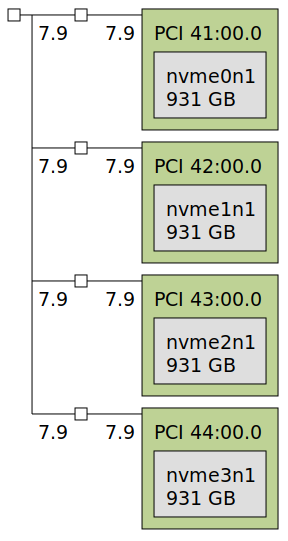

Look at the 4x m.2…

Shouldn’t that be 16 instead of 7.9? Might explain the sluggishness per speed results post.

Or maybe not? thinking

The way you can test is run 3dmark while running ssd tests and see if it’s negatively impacted. This looks to me like the gpu and nvme don’t share the same root just 01 and 02

Just the one nvme on the same root is probably fine

This topic was automatically closed 273 days after the last reply. New replies are no longer allowed.