We live in a matrix … I wonder what the next attack vector will be, telepathy?

Laser-Based Audio Injection on Voice-Controllable Systems

Light Commands is a vulnerability of MEMS microphones that allows attackers to remotely inject inaudible and invisible commands into voice assistants, such as Google assistant, Amazon Alexa, Facebook Portal, and Apple Siri using light.

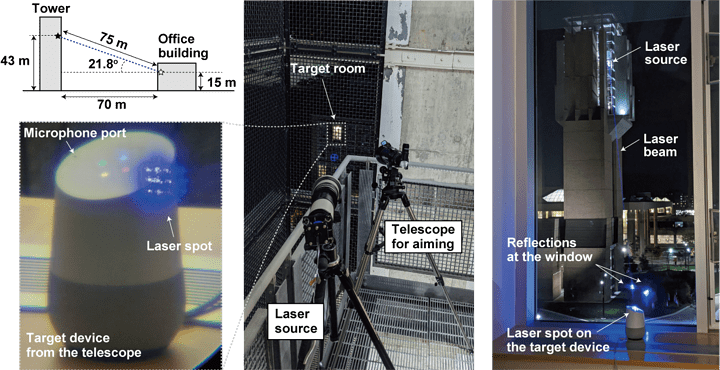

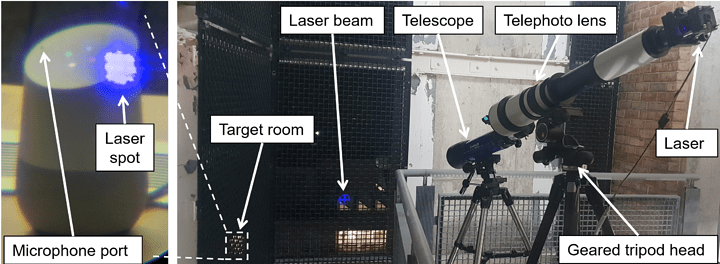

In our paper we demonstrate this effect, successfully using light to inject malicious commands into several voice controlled devices such as smart speakers, tablets, and phones across large distances and through glass windows.

The implications of injecting unauthorized voice commands vary in severity based on the type of commands that can be executed through voice. As an example, in our paper we show how an attacker can use light-injected voice commands to unlock the victim’s smart-lock protected home doors, or even locate, unlock and start various vehicles.

How do Light Commands work?

By shining the laser through the window at microphones inside smart speakers, tablets, or phones, a far away attacker can remotely send inaudible and potentially invisible commands which are then acted upon by Alexa, Portal, Google assistant or Siri.

Making things worse, once an attacker has gained control over a voice assistant, the attacker can use it to break other systems. For example, the attacker can:

Control smart home switches

Open smart garage doors

Make online purchases

Remotely unlock and start certain vehicles

Open smart locks by stealthily brute forcing the user's PIN number.

But why does this happen?

Microphones convert sound into electrical signals. The main discovery behind light commands is that in addition to sound, microphones also react to light aimed directly at them. Thus, by modulating an electrical signal in the intensity of a light beam, attackers can trick microphones into producing electrical signals as if they are receiving genuine audio.

Ok, but what do voice assistants have to do with this?

Voice assistants inherently rely on voice to interact with the user. By shining a laser on their microphones, an attacker can effectively hijack the voice assistant and send inaudible commands to the Alexa, Siri, Portal, or Google Assistant.

What is the range of Light Commands?

Light can easily travel long distances, limiting the attacker only in the ability to focus and aim the laser beam. We have demonstrated the attack in a 110 meter hallway, which is the longest hallway available to us at the time of writing.

But how can I aim the laser accurately, and at such distances?

Careful aiming and laser focusing is indeed required for light commands to work. To focus the laser across large distances one can use a commercially avalible telephoto lens. Aiming can be done using a geared tripod head, which greatly increases accuracy. An attacker can use a telescope or binocular in order to see the device’s microphone ports at large distances.

- Which devices are susceptible to Light Commands? In our experiments, we test our attack on the most popular voice recognition systems, namely Amazon Alexa, Apple Siri, Facebook Portal, and Google Assistant. We benchmark multiple devices such as smart speakers, phones, and tablets as well as third-party devices with built-in speech recognition.

Device Voice Recognition

System Minimun Laser Power

at 30 cm [mW] Max Distance

at 60 mW [m]* Max Distance

at 5 mW [m]**

Google Home Google Assistant 0.5 50+ 110+

Google Home mini Google Assistant 16 20 -

Google NEST Cam IQ Google Assistant 9 50+ -

Echo Plus 1st Generation Amazon Alexa 2.4 50+ 110+

Echo Plus 2nd Generation Amazon Alexa 2.9 50+ 50

Echo Amazon Alexa 25 50+ -

Echo Dot 2nd Generation Amazon Alexa 7 50+ -

Echo Dot 3rd Generation Amazon Alexa 9 50+ -

Echo Show 5 Amazon Alexa 17 50+ -

Echo Spot Amazon Alexa 29 50+ -

Facebook Portal Mini Alexa + Portal 18 5 -

Fire Cube TV Amazon Alexa 13 20 -

EchoBee 4 Amazon Alexa 1.7 50+ 70

iPhone XR Siri 21 10 -

iPad 6th Gen Siri 27 20 -

Samsung Galaxy S9 Google Assistant 60 5 -

Google Pixel 2 Google Assistant 46 5 -

While we do not claim that our list of tested devices is exhaustive, we do argue that it does provide some intuition about the vulnerability of popular voice recognition systems to Light Commands.

Note:

-

Limited to a 50 m long corridor.

** Limited to a 110 m long corridor. -

Can speaker recognition protect me from Light Commands? At the time of writing, speaker recognition is off by default for smart speakers and is only enabled by default for devices like phones and tablets. Thus, Light Commands can be used on these smart speakers without imitating the owner’s voice. Moreover, even if enabled, speaker recognition only verifies that the wake-up words (e.g., “Ok Google” or “Alexa”) are said in the owner’s voice, and not the rest of the command. This means that one “OK Google” or “Alexa” spoken by the owner can be used to compromise all the commands. Finally, as we show in our work, speaker recognition for wake-up words is often weak and can be sometimes bypassed by an attacker using online text-to-speech synthesis tools for imitating the owner’s voice.

-

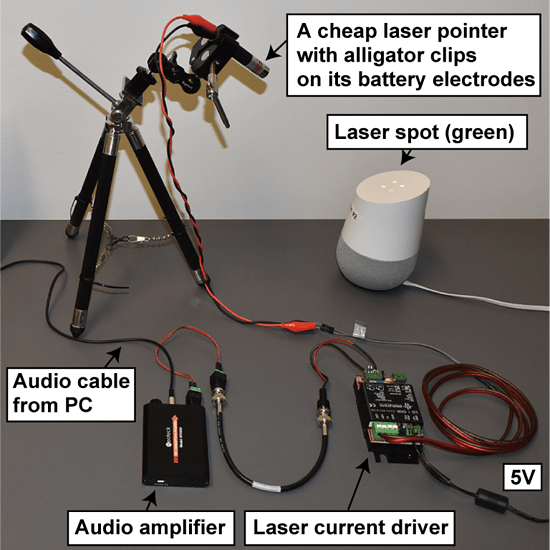

Do Light Commands require special equipment? How can I build such a setup? The Light Commands attack can be mounted using a simple laser pointer ($13.99, $16.99, and $17.99 on Amazon), a laser driver (Wavelength Electronics LD5CHA, $339), and a sound amplifier (Neoteck NTK059, $27.99 on Amazon). A telephoto lens (Opteka 650-1300mm, $199.95 on Amazon) can be used to focus the laser for long range attacks.

-

How vulnerable are other voice controllable systems? While our paper focuses on Alexa, Siri, Portal, and Google Assistant, the basic vulnerability exploited by Light Commands stems from design issues in MEMS microphones. As such, any system that uses MEMS microphones and acts on this data without additional user confirmation might be vulnerable.

-

How can I detect if someone used Light Commands against me? While command injection via light makes no sound, an attentive user can notice the attacker’s light beam reflected on the target device. Alternatively, one can attempt to monitor the device’s verbal response and light pattern changes, both of which serve as command confirmation.

-

Have Light Commands been abused in the wild? So far we have not seen any indications that this attack have been maliciously exploited.

-

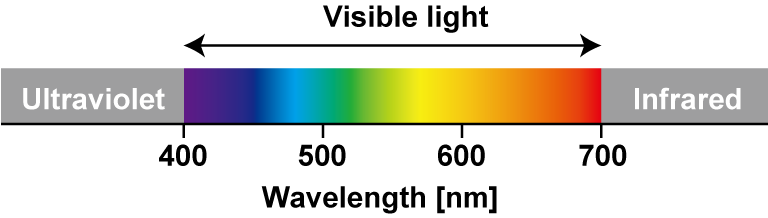

Does the effect depend on laser color or wavelength? During our experiments, we note the effects are generally independent from color and wavelength. Although blue and red lights are on the other edges in the visible spectrum, the levels of injected audio signal are in the same range and the shapes of the frequency-response curves are also similar.

Do I have to use a laser? What about other light sources?

In principle any bright enough light can be used to mount our attack. For example the Acebeam W30 laser-excited flashlight can be used as an alternative to a laser diode.

- Is it possible mitigate this issue? An additional layer of authentication can be effective at somewhat mitigating the attack. Alternatively, in case the attacker cannot eavesdrop on the device’s response, having the device ask the user a simple randomized question before command execution can be an effective way at preventing the attacker from obtaining successful command execution. Manufacturers can also attempt to use sensor fusion techniques, such as acquire audio from multiple microphones. When the attacker uses a single laser, only a single microphone receives a signal while the others receive nothing. Thus, manufacturers can attempt to detect such anomalies, ignoring the injected commands. Another approach consists in reducing the amount of light reaching the microphone’s diaphragm using a barrier that physically blocks straight light beams for eliminating the line of sight to the diaphragm, or implement a non-transparent cover on top of the microphone hole for attenuating the amount of light hitting the microphone. However, we note that such physical barriers are only effective to a certain point, as an attacker can always increase the laser power in an attempt to compensate for the cover-induced attenuation or for burning through the barriers, creating a new light path.

- Is the laser beam used in the attack safe? Laser radiation requires special controls for safety, as high-powered lasers might cause hazards of fire, eye damage, and skin damage. In this work, we consider low power Class 3R and Class 3B lasers. Class 3R devices (such as common laser pointers) emit under 5 mW of optical power, and are considered safe to human eyes for brief exposure durations. Class 3B Systems emit between 5 and 500 mW, and might cause eye injury even from short beam exposure durations. We urge that researchers receive formal laser safety training and approval of experimental designs before attempting reproduction of our work.

- Why is it called Light Commands? Our work is called Light Commands as we exploit light (as opposed to sound) as the primary medium to carry the command to the microphone.