I would like to build a “simple” NAS - NAS which will be mainly a way to remove HDDs from my PC and place it somewhere where I can ensure I can withstand a failure of one or more and and have an acceptable (but not 50%) capacity loss from parity drives.

In the future I want to run Jellyfin (or some other home media server w/o transcoding, just streaming and providing a unified interface - there will be time to do the research later though).

However, I am still missing some pieces, so I would like to ask you for a help as I think I am not sure how to move forwards now…

HDD Allocation + ZFS:

I would like to run ZFS - I like the benefits of speed and (imo) reliability plus regular error-checking and such. As for the allocation, I am considering (I hope a terminology is correctly used):

- 3 stripped RaidZ1 Vdevs of 3 HDD per VDev (9 drives in total)

- 2 stripped RaidZ1 Vdevs of 4 HDD per VDev (8 drives in total = 110€ saved)

which one would you suggest? Or is this as simple that whether I get more redundancy for more money or less redundancy for less money?

I will first build one Vdev and copy my ~7.5TB of data to it, then I will erase those, test them (as per this guide and if turn out well, I will add them together with the rest of new drives to the other Vdev (or in case of 3 Vdevs, each old HDD to one Vdev). Please correct me if this is not possible.

Hardware:

My current build draft:

CASE: Fractal Define R6 (already purchased, incl. extra HDD bays (10 in total available now))

PSU: Corsair RM550x (I will use one extra SATA power cable from my RM750x model in my rig)

MB: Supermicro X11SSH-CTF (unfortunately it is only for Intel’s 6th/7th gen, a shame they don’t have the same board for 8th/9th gen, I would then save 10€ on G5400 as opposed to an older G4560 (I cannot find anyone selling one))

CPU: Intel Pentium G4560 (for my use it should be plenty)

RAM: 2x Kingston KSM24ED8/16ME (not QVL’d by SuperMicro, but Kingston states X11SSH-CTF on its compatibility list)

HDD: 6-7x WD RED 4TB WD40EFRX (expensive for what it is, but whatever, I also need to live not far away from that server…)

Is there anything else you would recommend? Especially in terms of the board - I somehow know just of Supermicro in the “server world” and if so, I would like to have:

- at least 10 SATA ports (whether straight or with additional ones via miniSAS

- 10GbE NIC (RJ-45)

- board where I can shove a cheap Pentium.

For me, 450€ is a lot of money and I lack an overview over this segment of the market, so maybe this price is an utter bargain (I would like to know if it is or it if it is just okay price or what not).

What about RAM, I think I could spend extra for 64GB of RAM, but would that be more useful in case I would have a bigger capacity array or does caching benefit from it in any case? I cannot really imagine what it would do for me in the real world in terms of a performance.

As far as the connection speed goes, I will buy Qnap 5GbE USB adapter for my PC (I use a vertical GPU mount, so there is no space left for an add-in card). I will then directly interconnect NAS and my PC for the maximum speed and use the second ETH port for the rest of the network (1Gbit).

I will also add UPS (for both, NAS and my PC - power outages are rare, but I hate when they occur and all cuts out), but I need to do more research first (especially what capacity I need), I heard about a protocol to communicate via USB and an option to leave NAS automatically shut down in such scenario, so I want to use it (will that affect my OS choice below??).

OS:

Which would you suggest? I don’t want to use UnRaid (I don’t need its VM capabilities and I don’t want to be required to have at least 1 UR array (from what I read in the piece with GN)).

From my mainstream point of view it leaves me with probably just two options, FreeNAS or OMV. FreeNAS could be the best to not require any (or the least) fiddling, while OMV would require me to install Proxmox Linux kernel and the ZFS plugin, but afterwards it should be all configurable via GUI afaik.

Just a note, I am not shy of CLI, but I work in CLI all day in work and I would prefer to have this in GUI form, I am not sure if I would feel comfortable to do a lot of ZFS configuration in CLI first.

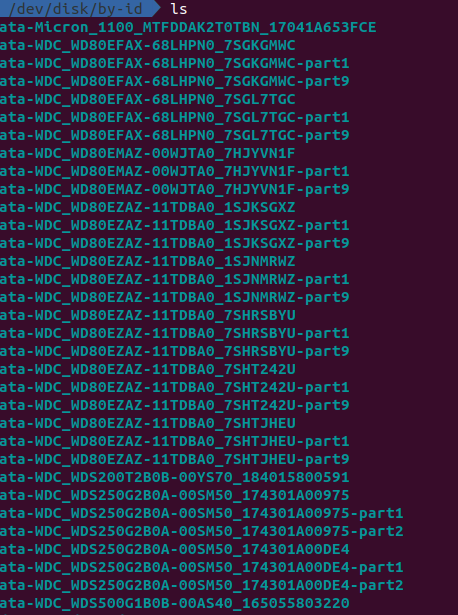

Would you mind also advising me on how I can pair ID in OS with the particular HDD in the system? Is there a S/N readout in OS which I could pair with S/N from labels on HDDs?

Thanks anyone for sharing a thought or two, I really appreciate it.