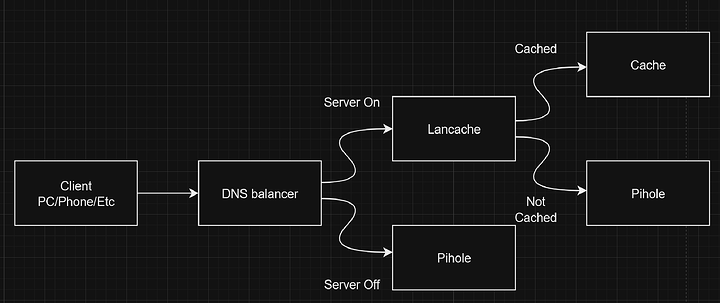

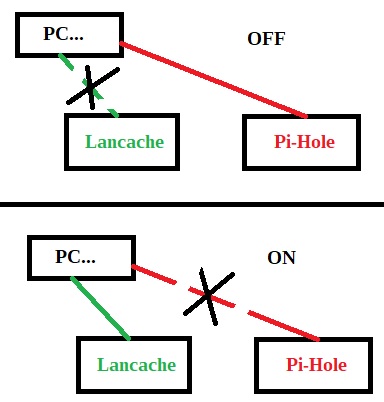

Because of high energy prices in Europe I don’t keep my storage server on at all times. I wanted to use lancache but the requirement of it being the only DNS server would cause me to lose DNS whenever it was off. So I wanted the setup as pictured below:

Effectively the DNS will failover to the upstream pihole if the lancache is not available.

I spent some time looking for a solution and it seems that you can configure failover in Nginx plus, however this costs thousands per year so not really a solution for my homelab. It turns out that you can compile nginx with lua support which would allow you to implement this functionality yourself.

The easiest way to do this is to install Openresty https://openresty.org/en/installation.html.

Once that is done configure the service to start automatically. We can use the fact that lancache monolithic serves the files on port 80 to test if it is running. We configure Nginx to make a socket connection to the port, if it is successful we use the IP of our lancache DNS if not, the failover DNS.

If your lancache DNS and fileserver are on different IP addresses you will need to edit the config to reflect this.

This stream directive of your nginx.conf will need to contain the following block, substituting your lancache DNS and server IP addresses for 10.10.10.2 and 10.10.10.3 respectively. nginx.conf is found at the following location for me: /usr/local/openresty/nginx/conf

stream {

upstream dns {

server 10.10.10.2:53;

balancer_by_lua_block {

local balancer = require "ngx.balancer"

local socket = require "socket"

local host = "10.10.10.2" -- Your lancache server

local port = 53

local timeout = 0.1

local test_connection = socket.tcp()

test_connection:settimeout(timeout)

local connect_ok, connect_err = test_connection:connect(host, 80)

test_connection:close()

if not connect_ok then

host = "10.10.10.3" -- Your failover server

end

local ok, err = balancer.set_current_peer(host, port)

}

}

server {

listen 53 udp;

proxy_pass dns;

}

}

Restart the openresty service and point your clients DNS to the openresty server.

You may need to increase the number of files or connections that Nginx can open by adjusting the worker_rlimit_nofile or worker_connections parameters.

This method introduces some additional latency but in my experience it hasn’t been noticable