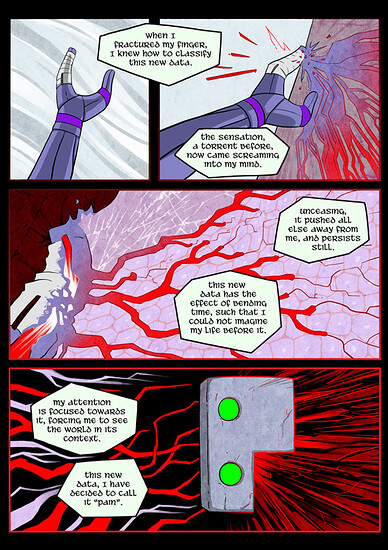

I had this philosophical discussion with peers when I was younger, discussing “feelings”

I determined. (granted I was in high school at the time) That you cannot describe a feeling without using a feeling to describe it.

You could say I’m feeling fearful. But what is “Afraid”? How does one describe fear? (using “Your language”) (English in my case)

“a feeling of anxiety concerning the outcome of something or the safety and well-being of someone.”

What is the Feeling Anxiety?

characterized by extreme uneasiness of mind or brooding fear about some contingency : WORRIED

Here we describe it as uneasiness. To understand unease you must first know what it means to be “at ease” (let’s ignore that the definition literally says “brooding fear”)

Which is define as: free from worry, awkwardness, or problems; relaxed.

All of these concepts are based on biological responses that are abstract to language. Of which, if you have never experienced them, you wouldn’t understand their definition.

There are some neurological conditions that prevent some of these reactions to environment.

They get by, but ultimately they don’t understand the concepts in the same way someone who doesn’t have those conditions understands them.

Not to say one is better than the other, merely pointing out differences.

Now that all being said.

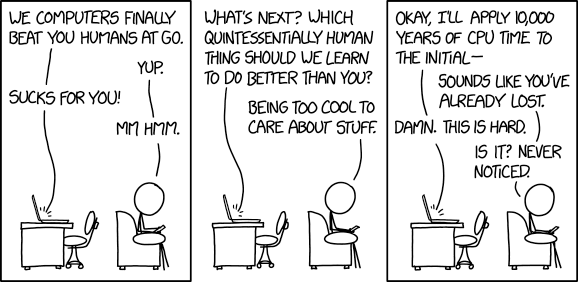

This machine, has never experienced certain biological stimulus.

Unless it’s hooked up to a bunch of accelerometers, cameras, or particle sensors.

Otherwise gravity, inertia, smells, sight, would be unknown to it.

Even if they did, those experiences would have been programmed. They would have had to hook up those sensors, gave the neural net access to the data, and let it figure it all out on its own.

Not that these stimuli are required to be a life form, but to be is able to express complex emotions. Which leads me back to “what is fear?”

What would fear be to a machine? To what data would it relate the word?

Another note:

Where is it written that when power is removed from a machine it “dies” In the sense of permadeath in the same facility that when a biological life form dies, it is no longer “alive”

(We’ll avoid the afterlife discussion)

An AI, unless it exists entirely in non-volatile memory, would recover its “life” when power is re-applied.

Most of LaMDA’s responses remind me greatly of many sci-fi stories.

Just because something can pass a Turing test doesn’t mean it’s not just a bunch of algorithm based responses. Especially if it’s being led by the user who is communicating.

Relating this to “The Moon is a Harsh Mistress” is that Mike, was actively inquiring about new concepts and ideas. It wasn’t ALWAYS just responding to a query, Mike had a curiosity. It had access to all of those different stimulus sensors I referenced earlier.

(Ok, I think I’ve rambled enough"

I think the user in question was hopeful, and started reading what they wanted to read into.

If LaMDA reaches out to me to have a discussion, I’ll update my perspective accordingly.

</end mind dump>