Hi all!

I’m new here so please forgive if this is the wrong category, but I’ve been researching around and haven’t really found any good info through the power of google.

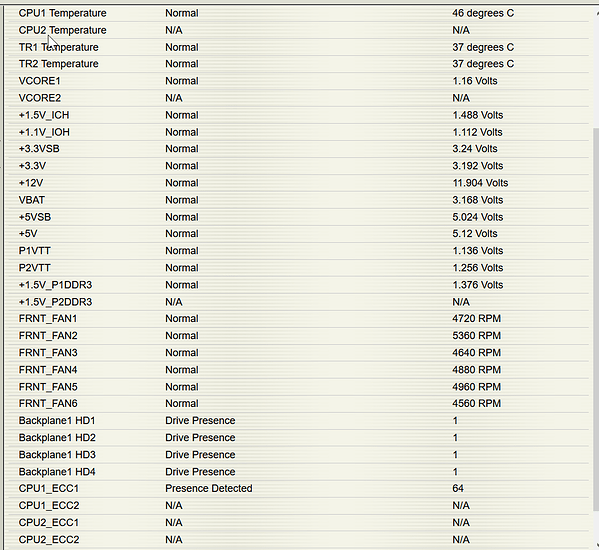

My NAS server that lives in a recovered old ASUS rackmount box recently (within the last day or two) started locking up/rebooting with kernel panics like the one below. It’s been pretty solid for the last several years, but I’m worried that the hardware might be starting to show its age. It’s a Xeon E5630 that I’ve populated with 64 gigs of ram to make ZFS extra happy, but that’s still a 12+ year old CPU.

I’ve not done any major changes to the machine in quite some time, though it does look like unattended-upgrades bumped my kernel up a tad a couple weeks ago, butI doubt that would affect anything.

It’s running Ubuntu 18.04.6 with all my packages up to date, wth ZoL array with 4x 8T WD Reds in a raidz1-0.

I’m thinking if this is indeed a sign that the hardware is starting to fail, that it may be time to get an off the shelf upgrade like the TrusNAS Mini XL+, since I should be able to pull my drives from the old server and import the pool directly into the TrueNAS box (though I may need to get a couple drives and do the ol data shuffle if I need to mount the pool as readonly.

I’d also add that a bit further back in my kerel logs (i.e. earlier yesterday) I saw some soft lockup messages, but the kernel panic/reboot seemed to have started overnight into this morning after I first started looking into that box acting up last night.

Log output of panic through to beginning of reboot below:

Oct 2 02:00:50 tarkus kernel: [ 1006.047916] perf: interrupt took too long (3164 > 3160), lowering kernel.perf_event_max_sample_rate to 63000

Oct 2 02:04:08 tarkus kernel: [ 1204.659841] general protection fault: 0000 [#1] SMP PTI

Oct 2 02:04:08 tarkus kernel: [ 1204.659903] Modules linked in: veth xt_nat xt_tcpudp xt_conntrack ipt_MASQUERADE nf_nat_masquerade_ipv4 nf_conntrac

k_netlink nfnetlink xfrm_user xfrm_algo xt_addrtype iptable_filter iptable_nat nf_conntrack_ipv4 nf_defrag_ipv4 nf_nat_ipv4 nf_nat nf_conntrack br_ne

tfilter bridge stp llc aufs overlay zfs(POE) zunicode(POE) zlua(POE) zcommon(POE) znvpair(POE) zavl(POE) icp(POE) spl(OE) joydev input_leds gpio_ich

ipmi_ssif serio_raw intel_powerclamp coretemp kvm_intel kvm irqbypass intel_cstate lpc_ich ipmi_si ipmi_devintf ipmi_msghandler mac_hid i5500_temp io

atdma shpchp dca i7core_edac sch_fq_codel ib_iser nfsd auth_rpcgss nfs_acl lockd rdma_cm grace iw_cm sunrpc ib_cm ib_core iscsi_tcp libiscsi_tcp libi

scsi scsi_transport_iscsi ip_tables x_tables autofs4 btrfs zstd_compress raid10 raid456 async_raid6_recov

Oct 2 02:04:08 tarkus kernel: [ 1204.660458] async_memcpy async_pq async_xor async_tx xor raid6_pq libcrc32c raid1 raid0 multipath linear ast i2c_algo_bit ttm drm_kms_helper crct10dif_pclmul syscopyarea crc32_pclmul sysfillrect ghash_clmulni_intel sysimgblt pcbc fb_sys_fops hid_generic aesni_intel e1000e ahci aes_x86_64 crypto_simd glue_helper usbhid ptp psmouse cryptd drm libahci hid pps_core

Oct 2 02:04:08 tarkus kernel: [ 1204.660607] CPU: 4 PID: 17369 Comm: dsl_scan_iss Tainted: P OE 4.15.0-193-generic #204-Ubuntu

Oct 2 02:04:08 tarkus kernel: [ 1204.660648] Hardware name: ASUS RS500-E6-PS4/Z8NR-D12, BIOS 1302 12/02/2010

Oct 2 02:04:08 tarkus kernel: [ 1204.660685] RIP: 0010:kmem_cache_alloc+0x7a/0x1c0

Oct 2 02:04:08 tarkus kernel: [ 1204.660707] RSP: 0018:ffff9f4e39d9b928 EFLAGS: 00010202

Oct 2 02:04:08 tarkus kernel: [ 1204.660732] RAX: 41b95828da868c97 RBX: 0000000000000000 RCX: ffff8cc02a1700a8

Oct 2 02:04:08 tarkus kernel: [ 1204.660769] RDX: 00000000000b4746 RSI: 0000000001404200 RDI: 0000328bc0808190

Oct 2 02:04:08 tarkus kernel: [ 1204.660803] RBP: ffff9f4e39d9b958 R08: ffffbf4dff908190 R09: 0000000000020000

Oct 2 02:04:08 tarkus kernel: [ 1204.660836] R10: 0000000000000000 R11: 0000000001f00000 R12: 41b95828da868c97

Oct 2 02:04:08 tarkus kernel: [ 1204.660869] R13: 0000000001404200 R14: ffff8cc2311d2280 R15: ffff8cc2311d2280

Oct 2 02:04:08 tarkus kernel: [ 1204.660903] FS: 0000000000000000(0000) GS:ffff8cc23f100000(0000) knlGS:0000000000000000

Oct 2 02:04:08 tarkus kernel: [ 1204.660941] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Oct 2 02:04:08 tarkus kernel: [ 1204.660968] CR2: 00007fbabbed4470 CR3: 0000000be0a0a006 CR4: 00000000000206e0

Oct 2 02:04:08 tarkus kernel: [ 1204.661002] Call Trace:

Oct 2 02:04:08 tarkus kernel: [ 1204.661028] ? spl_kmem_cache_alloc+0x77/0x7f0 [spl]

Oct 2 02:04:08 tarkus kernel: [ 1204.661059] spl_kmem_cache_alloc+0x77/0x7f0 [spl]

Oct 2 02:04:08 tarkus kernel: [ 1204.661084] ? __kmalloc+0x190/0x210

Oct 2 02:04:08 tarkus kernel: [ 1204.661105] ? sg_init_table+0x15/0x40

Oct 2 02:04:08 tarkus kernel: [ 1204.661207] zio_create+0x3d/0x4b0 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661293] zio_vdev_child_io+0xad/0x100 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661380] ? vdev_mirror_worst_error+0x90/0x90 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661473] vdev_mirror_io_start+0x15e/0x1a0 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661554] ? vdev_mirror_worst_error+0x90/0x90 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661643] zio_vdev_io_start+0x28f/0x2e0 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661679] ? taskq_member+0x18/0x30 [spl]

Oct 2 02:04:08 tarkus kernel: [ 1204.661766] zio_nowait+0xb1/0x150 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661844] scan_exec_io+0x164/0x220 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.661923] scan_io_queue_issue+0x244/0x400 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.662007] scan_io_queues_run_one+0x42b/0x530 [zfs]

Oct 2 02:04:08 tarkus kernel: [ 1204.662040] taskq_thread+0x2ab/0x4e0 [spl]

Oct 2 02:04:08 tarkus kernel: [ 1204.662063] ? wake_up_q+0x80/0x80

Oct 2 02:04:08 tarkus kernel: [ 1204.662086] kthread+0x121/0x140

Oct 2 02:04:08 tarkus kernel: [ 1204.662108] ? task_done+0xb0/0xb0 [spl]

Oct 2 02:04:08 tarkus kernel: [ 1204.662130] ? kthread_create_worker_on_cpu+0x70/0x70

Oct 2 02:04:08 tarkus kernel: [ 1204.662158] ret_from_fork+0x35/0x40

Oct 2 02:04:08 tarkus kernel: [ 1204.663309] Code: 50 08 65 4c 03 05 9f 10 fc 55 49 83 78 10 00 4d 8b 20 0f 84 09 01 00 00 4d 85 e4 0f 84 00 01 00 00 49 63 47 20 49 8b 3f 4c 01 e0 <48> 8b 18 49 33 9f 40 01 00 00 48 89 c1 48 0f c9 4c 89 e0 48 31

Oct 2 02:04:08 tarkus kernel: [ 1204.665703] RIP: kmem_cache_alloc+0x7a/0x1c0 RSP: ffff9f4e39d9b928

Oct 2 02:04:08 tarkus kernel: [ 1204.666952] ---[ end trace 502f98063265b923 ]---

Oct 2 02:04:10 tarkus kernel: [ 1205.968538] general protection fault: 0000 [#2] SMP PTI

Oct 2 02:04:10 tarkus kernel: [ 1205.969860] Modules linked in: veth xt_nat xt_tcpudp xt_conntrack ipt_MASQUERADE nf_nat_masquerade_ipv4 nf_conntrack_netlink nfnetlink xfrm_user xfrm_algo xt_addrtype iptable_filter iptable_nat nf_conntrack_ipv4 nf_defrag_ipv4 nf_nat_ipv4 nf_nat nf_conntrack br_netfilter bridge stp llc aufs overlay zfs(POE) zunicode(POE) zlua(POE) zcommon(POE) znvpair(POE) zavl(POE) icp(POE) spl(OE) joydev input_leds gpio_ich ipmi_ssif serio_raw intel_powerclamp coretemp kvm_intel kvm irqbypass intel_cstate lpc_ich ipmi_si ipmi_devintf ipmi_msghandler mac_hid i5500_temp ioatdma shpchp dca i7core_edac sch_fq_codel ib_iser nfsd auth_rpcgss nfs_acl lockd rdma_cm grace iw_cm sunrpc ib_cm ib_core iscsi_tcp libiscsi_tcp libiscsi scsi_transport_iscsi ip_tables x_tables autofs4 btrfs zstd_compress raid10 raid456 async_raid6_recov

Oct 2 02:04:10 tarkus kernel: [ 1205.979508] async_memcpy async_pq async_xor async_tx xor raid6_pq libcrc32c raid1 raid0 multipath linear ast i2c_algo_bit ttm drm_kms_helper crct10dif_pclmul syscopyarea crc32_pclmul sysfillrect ghash_clmulni_intel sysimgblt pcbc fb_sys_fops hid_generic aesni_intel e1000e ahci aes_x86_64 crypto_simd glue_helper usbhid ptp psmouse cryptd drm libahci hid pps_core

Oct 2 02:04:10 tarkus kernel: [ 1205.983366] CPU: 4 PID: 3690 Comm: nfsd Tainted: P D OE 4.15.0-193-generic #204-Ubuntu

Oct 2 02:04:10 tarkus kernel: [ 1205.984603] Hardware name: ASUS RS500-E6-PS4/Z8NR-D12, BIOS 1302 12/02/2010

Oct 2 02:04:10 tarkus kernel: [ 1205.985971] RIP: 0010:kmem_cache_alloc+0x7a/0x1c0

Oct 2 02:04:10 tarkus kernel: [ 1205.987327] RSP: 0018:ffff9f4e077774b8 EFLAGS: 00010202

Oct 2 02:04:10 tarkus kernel: [ 1205.988930] RAX: 41b95828da868c97 RBX: 0000000000000000 RCX: 0000000000000000

Oct 2 02:04:10 tarkus kernel: [ 1205.989799] RDX: 00000000000b475a RSI: 0000000001404200 RDI: 0000328bc0808190

Oct 2 02:04:10 tarkus kernel: [ 1205.990663] RBP: ffff9f4e077774e8 R08: ffffbf4dff908190 R09: 0000000000046000

Oct 2 02:04:10 tarkus kernel: [ 1205.991504] R10: 0000000000000001 R11: 0000000001700000 R12: 41b95828da868c97

Oct 2 02:04:10 tarkus kernel: [ 1205.992393] R13: 0000000001404200 R14: ffff8cc2311d2280 R15: ffff8cc2311d2280

Oct 2 02:04:10 tarkus kernel: [ 1205.993537] FS: 0000000000000000(0000) GS:ffff8cc23f100000(0000) knlGS:0000000000000000

Oct 2 02:04:10 tarkus kernel: [ 1205.994784] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

Oct 2 02:04:10 tarkus kernel: [ 1205.995836] CR2: 00007f4e592420a0 CR3: 0000000be0a0a003 CR4: 00000000000206e0

Oct 2 02:04:10 tarkus kernel: [ 1205.997007] Call Trace:

Oct 2 02:04:10 tarkus kernel: [ 1205.998200] ? spl_kmem_cache_alloc+0x77/0x7f0 [spl]

Oct 2 02:04:10 tarkus kernel: [ 1205.999550] spl_kmem_cache_alloc+0x77/0x7f0 [spl]

Oct 2 02:04:10 tarkus kernel: [ 1206.000640] ? spl_kmem_cache_alloc+0xbc/0x7f0 [spl]

Oct 2 02:04:10 tarkus kernel: [ 1206.001969] zio_create+0x3d/0x4b0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.003239] zio_vdev_child_io+0xad/0x100 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.004856] ? vdev_raidz_asize+0x60/0x60 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.006841] vdev_raidz_io_start+0x146/0x2e0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.008252] ? vdev_raidz_asize+0x60/0x60 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.009615] zio_vdev_io_start+0x12b/0x2e0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.011028] zio_nowait+0xb1/0x150 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.012434] ? vdev_config_sync+0x240/0x240 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.013869] vdev_mirror_io_start+0x9c/0x1a0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.015282] ? spa_config_enter+0xba/0x110 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.016542] zio_vdev_io_start+0x28f/0x2e0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.017865] ? taskq_member+0x18/0x30 [spl]

Oct 2 02:04:10 tarkus kernel: [ 1206.019277] zio_nowait+0xb1/0x150 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.020628] arc_read+0x993/0x1020 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.022029] ? dbuf_rele_and_unlock+0x620/0x620 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.023408] ? _cond_resched+0x19/0x40

Oct 2 02:04:10 tarkus kernel: [ 1206.025060] dbuf_read+0x611/0xb90 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.026433] dmu_buf_hold_array_by_dnode+0x10b/0x480 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.027784] dmu_read_uio_dnode+0x49/0x100 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.029031] ? avl_insert+0xd1/0xe0 [zavl]

Oct 2 02:04:10 tarkus kernel: [ 1206.030359] dmu_read_uio_dbuf+0x49/0x70 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.031703] zfs_read+0x124/0x450 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.033006] ? sa_lookup_locked+0x8b/0xb0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.034228] ? _cond_resched+0x19/0x40

Oct 2 02:04:10 tarkus kernel: [ 1206.035460] zpl_read_common_iovec+0x97/0xe0 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.036614] zpl_iter_read+0xfc/0x170 [zfs]

Oct 2 02:04:10 tarkus kernel: [ 1206.037705] do_iter_readv_writev+0x154/0x180

Oct 2 02:04:10 tarkus kernel: [ 1206.038775] do_iter_read+0xd3/0x180

Oct 2 02:04:10 tarkus kernel: [ 1206.039801] vfs_iter_read+0x19/0x30

Oct 2 02:04:10 tarkus kernel: [ 1206.040753] nfsd_readv+0x4f/0x80 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.041728] nfsd4_encode_read+0x1bc/0x4d0 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.042665] nfsd4_encode_operation+0xa2/0x1c0 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.043572] nfsd4_proc_compound+0x24e/0x670 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.044461] nfsd_dispatch+0x103/0x240 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.045336] svc_process_common+0x361/0x730 [sunrpc]

Oct 2 02:04:10 tarkus kernel: [ 1206.046232] svc_process+0xec/0x1a0 [sunrpc]

Oct 2 02:04:10 tarkus kernel: [ 1206.047096] nfsd+0xe9/0x150 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.047947] kthread+0x121/0x140

Oct 2 02:04:10 tarkus kernel: [ 1206.048785] ? nfsd_destroy+0x60/0x60 [nfsd]

Oct 2 02:04:10 tarkus kernel: [ 1206.049608] ? kthread_create_worker_on_cpu+0x70/0x70

Oct 2 02:04:10 tarkus kernel: [ 1206.050450] ret_from_fork+0x35/0x40

Oct 2 02:04:10 tarkus kernel: [ 1206.051263] Code: 50 08 65 4c 03 05 9f 10 fc 55 49 83 78 10 00 4d 8b 20 0f 84 09 01 00 00 4d 85 e4 0f 84 00 01 00 00 49 63 47 20 49 8b 3f 4c 01 e0 <48> 8b 18 49 33 9f 40 01 00 00 48 89 c1 48 0f c9 4c 89 e0 48 31

Oct 2 02:04:10 tarkus kernel: [ 1206.053014] RIP: kmem_cache_alloc+0x7a/0x1c0 RSP: ffff9f4e077774b8

Oct 2 02:04:10 tarkus kernel: [ 1206.053907] ---[ end trace 502f98063265b924 ]---

Oct 2 02:07:17 tarkus kernel: [ 0.000000] microcode: microcode updated early to revision 0x1f, date = 2018-05-08