i know it can reject them but, are there other ways they can get lost or dropped in the application layer? can’t say i’v ever heard of such things?

Your question sounds very strange. By “application layer” you mean e.g. client or server software or e.g. http protocol implementation.

If this is what we’re talking about, and by packets, you mean udp datagrams or some kind of custom frames such as e.g. in http2, which is all data that said software can receive and choose to ignore, then yes.

It’s also possible that you run into tcp buffers being full, thus any new incoming data would be dropped, due to server or client software not reading from it in due time for whatever reason. This is subtle from software perspective, typically something the kernel handles along with other tcp and socket stuff.

Drop packets? NO, packets are handled by the kernel.

Not respond to packets? Sure. If you are sending packets to a TCP or UDP port and get no response, it could certainly be due to application performance (e.g., the application can’t keep up with its queue). The packets themselves, however would have been delivered by the kernel. By the time they get to the application layer, TCP/IP and the kernel is done, and the network side is completed.

The lack of response in that case is not “packet loss”.

ok is it possible for there to be an issue at the session layer but, intermittent and random and not consistent?

Maybe the app being used and the exact issue might help us or is this all theoretical ?

Yes, for sure.

I’ll even give you an example:

- an overloaded SQL server - the service either crashes/runs out of memory or is so busy that it can’t service new requests. the data gets delivered over the network, but the server can’t deal with it.

TO diagnose this you’re best off breaking out a copy of wireshark (and/or looking at the “server” memory, disk throughput and cpu utilisation), and observing what is going over the wire. If it’s TCP, you should see ACKs getting sent back and forth over the network.

If it’s TCP, if there is any loss, then you should see a re-transmit.

If it’s UDP, then this won’t happen as there is no session state (and UDP is usually used when there is no point trying to re-transmit lost data as it is out of date - e.g., voice - if it doesn’t get there, there’s no point trying to re-sent data from a second ago as it will be out of order and just garble the data and make it worse).

Alternatively (to determine network loss vs. application response), run network diagnostics with ping and the same packet sizes as the application uses. If there is actual network packet loss, this should be apparent with other data as well, unless there is QoS or other rate limiting/capping in place somewhere on the network for your application’s protocol.

edit:

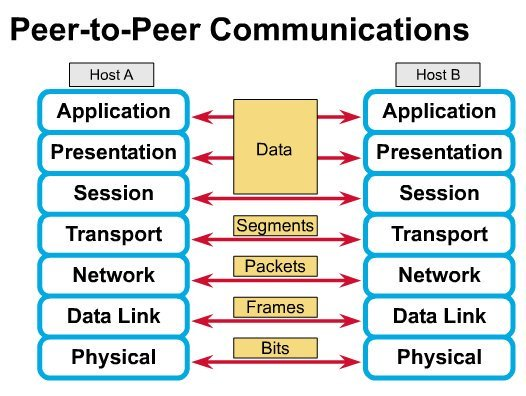

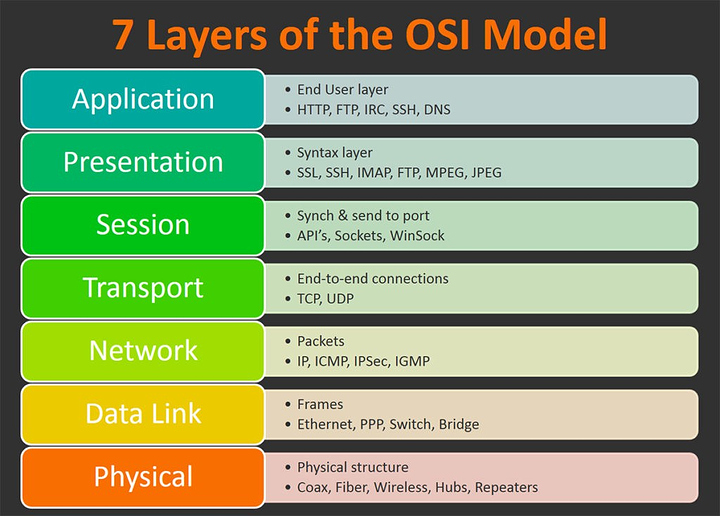

above is application layer. the OSI model above is kinda out of date (maybe useful for older protocols, but tcp/ip has its own model) and overly verbose/needlessly complicated for TCP/IP. See the “internet/TCP model”:

Only 4 layers, and in that model you’d be dealing with the application layer (“sessions” are handled by the application).

This was our thought as well. In this case the device which is an IP Camera isn’t the issue so throughout the network in various certain points on the way the to server wireshark was setup to see if there was any network loss. it checked out good. so the server was looked at and also upgraded. reason being as the number of cameras is increasing 60+ IP cameras going to a geovision server. wireshark on the server doesn’t show dropped packets from the network either. Yet Geo Vision is showing cameras being disconnect randomly at times despite the packets not going missing.

This all might make sense to me if it was something like a say a Realtek or Broadcom NIC but, as far as know with it being an intel based server it’s Intel NIC plus i know engineer on this project and he wouldn’t do anything like run a non intel NIC. Infact the guy is no slouch. 99% shure anything i could ever think of he’s already looked at or considered.

even if all 60 cameras were 4k 30fps that still wouldn’t be 1000mbps. He thinks it’s application side. i’m not smart enough to argue it or logic another possibility.

What storage do you have on the box?

60 + cameras at 30 fps = 1800 IOPs (or say, 24 SATA disks in RAID0 - one SATA disk being capable of ~75 random 4 KB IOPs) even at only 1 disk operation per frame. And i’ll wager there is significantly more than one disk operation per frame. And that doesn’t count any reads of the data once it has been written.

You may find that it isn’t network, but storage throughput… unless you’re on a fairly decent disk array or flash storage, i’d look seriously at your storage performance.

Throughput is one thing, but IO operations (on the server) is another… you might need to talk to the vendor to determine how many disk operations per camera are required at X resolution by Y frame-rate; and then verify that your disk subsystem can keep up (with some headroom left over for people to connect to it and read/review the footage, backups to happen if required, etc.).

The numbers might shock you. There’s a reason a lot of security footage is black and white and 10 fps

Drives sound like the issue with that many cameras not the network

Yeah, video storage is no joke.

There may be some significant smarts in the capture software to reduce the disk IO required and/or sequence it into large IO bundles, but as per my above post, even assuming some extremely optimal write workload, you’re still looking at a lot of spinning rust (in terms of number of drives, not capacity), or a flash back end to keep up, purely due to the number of operations, no matter how big they are.

Here’s a good primer on how IOPs can be calculated and why they matter, etc.

VM focused, but the same idea applies.

edit:

Ah i thought that article had more substance. But definitely, google for IOPs and do some reading. Pay special attention to the raid penalty given you’re storing video. If you’re spending most of your time writing to RAID5 for example, you need 4x as many disks to keep up as if you were doing RAID0… array configuration really does matter.

With large numbers of machines or users writing to the storage concurrently, it often becomes more about getting enough drives to keep up rather than having enough drives for storage capacity reasons - and in this application you may find it more efficient to go with raid0 or striped mirrors (i.e., RAID10) if redundancy is required, to get the speed with less spindles… Or a small number of high capacity SSDs.

Like i said, check with the software/camera vendor for the IOPs required if you can, and then measure/monitor performance on the server to determine whether or not that’s where your bottleneck is. This may necessitate a re-evaluation by the customer as to whether or not they REALLY need 4k30 for this particular video application, or if say, 1080p30 or lower frame rate will suffice (if the budget is not there for the disks). Because they will certainly pay for it in terms of hardware if required.

Unless this is open source and you’re both talented programmers with enough time to figure this out and make life better for everyone… just buy another server?

Btw, are you transcoding camera video by any chance, what’s your setup?

They did just buy another server, i think twice? or do you mean offloading on to 2 servers? that was one though of mine. i’ll have to double check they aren’t already doing so.

Whats weird is at work we use Exacq vision and we over 70 cameras all on 1 server, in a VM too! no issues.

Yes, half of the cameras, and another half of the cameras