Hey Everyone,

I’m hoping I can get some clarification on IOMMU passthrough and host isolation. I’ve searched around linux boards and youtube videos and while the majority of the content revolves around GPU passthrough, I imagine the process is the same for other PCI devices. Nothing I’ve found answers my specific question.

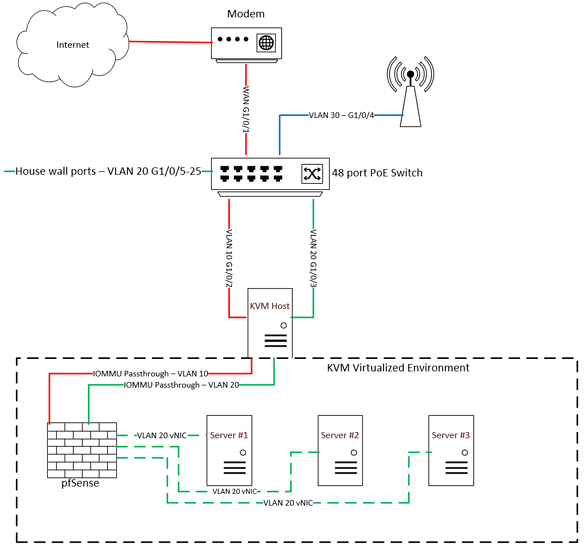

Here is a network diagram to better illustrate what I want to do:

Basically it goes like this, I’ve got a modem going to a managed switch which is then going to a KVM Host (Debian 10). In that KVM environment I have a pfsense VM, and then a couple other VM’s (pihole, dev environment, etc). I want pfSense to be my network edge firewall and to have everything behind that. Nothing should have access to either the network or the internet before pfSense. So with that, some questions:

- How can I do that when the host OS is physically connected to the network?

- If I use IOMMU to passthrough both physical NICs, will the Host OS still have access to them?

- If doing the passthrough means that the host OS no longer has access to the NICs, how do I manage the VMs?

- Should I passthrough only the WAN side NIC to pfsense and then do either a network bridge or a vswitch or something on the Host OS to the LAN side of pfSense?

- Is there a better way to do this?

I’ve read articles and seen youtube videos on GPU passthrough and all of them show the host OS still having access to the GPU, but then when I read the Red Hat Virtual Administration Guide it states:

Device assignment allows virtual machines exclusive access to PCI devices for a range of tasks, and allows PCI devices to appear and behave as if they were physically attached to the guest operating system

Which to me sounds like the host OS loses access to that device.

I’ve got the Host OS installed and IOMMU and KVM setup on it, but I haven’t built any of the VMs yet. So if I need to tear this whole thing down and re-architecture it I don’t mind doing so.

You manage your host using it’s motherboard based NIC. You can then pass through one or more add-on NICs to your guests.

The host will briefly have access to the card, only for the purpose of attaching it to the VFIO stub driver. It won’t have access long enough to initialize any sort of network stack on it.

You could also break out the VLANs as virtual adapters on the host, and pass those through to the guests using VIRTIO (not VFIO), this would allow you to overload a single adapter, like the one on the motherboard. Management vlan would talk to the host, other vlan’s would go to the guests, for example.

So like have one vNIC for VLAN 20, one for VLAN 30, etc. And then attach those vNIC’s to the clients as needed? That could certainly work. Thank you for the suggestion.

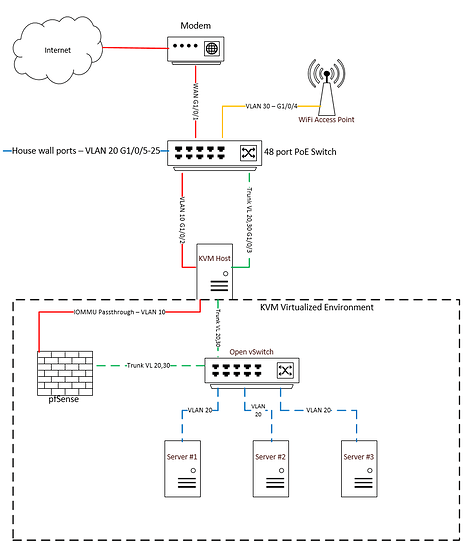

I was also thinking about using Open vSwitch in a configuration something like this:

Do you think this would work just as well? This way if I needed access to the Host, I can just assign it an IP on vlan 20.

1 Like

I’ve never worked with open vswitch, but your plan sounds reasonable, I don’t see why it wouldn’t work. It would take more to setup but maybe that’s not so bad, learning the technology could be useful.

Depending on your IOMMU groups, vfio passthrough of NICs might be difficult, but attaching guests to vNICs will always work. I’m not up to speed on the isolation between these different layers. I would suspect if someone compromised your pfSense guest, they could compromise the host using vectors other than the network interface.

The concern isn’t really compromise of the pfsense guest, but rather a compromise of the host OS as it is “sitting in front of” pfsense. Wihtout passing the WAN NIC through to pfsense, the host OS will receive a public IP address from the modem and will just be hanging out there on the open internet.

Thank you for all of your help. I’m going to try this setup tonight.

Disable DHCP on that interface for the host, or unbind tcp/ip on it all together.

So it turns out that both my onboard and PCI NIC are in the same IOMMU group. Which means I would have to passthrough both devices to get passthrough working at all.

To solve this, I found out about the linux-acs-kernel patch to breakout the devices into separate IOMMU groups. I downloaded a patched kernel and set pcie_acs_override=downstream,multifunction in /etc/default/grub and applied it with sudo update-grub and a reboot.

Doing find /sys/kernel/iommu_groups/ -type l after the reboot shows that both NICs are now in different IOMMU groups. Great.

However now when I try to pass the onboard NIC by following a guide I found (cannot post links apparently) doing a lspci -nnv gives me Kernel driver in use: r8165 (or whatever it is) whereas before applying the ACS patch I could do the exact same process in the guide and that would give me Kernel driver in use: vfio-pci I cannot for the life of me get this to work with the ACS patch applied and I’m about ready to scrap the whole project and just go with some manner of isolating the raw internet traffic with VLAN’s, and virtio in Private mode though I have no idea what this means for security of the hostOS.

Are there large security implications for going this route?