I think this is in the correct category.

Running:

Windows 8.1 Pro w/ Media Center

Intel RST 15.9.0.1015 (AFAIK latest with support for Win 8)

Hardware:

ASUS Z97 Deluxe WiFi/NFC

i7 4790k at stock, MCE on.

Noctua NH-U9S w/ 2x NF-A9

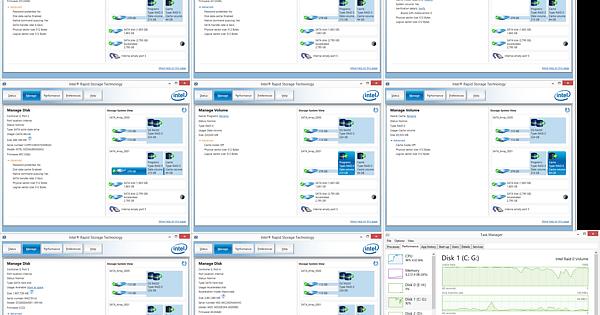

Sapphire RX 580 Nitro+ Storage: see image.

EVGA 80+ White 800W

Custom SFF case (~ 20 Litres), 2x NF-A8 intake, PSU exhaust.

Description:

The above Task Manager screenshot is not long after boot, and I’d just restored a Chrome session with a bunch of tabs. Regardless, latencies >1s are common. Between POST and the Windows boot option between 8.1 and 10, it takes ~2 mins where the spinny circles are spinning. This is considerably slower than when I was running just a single 250 GB drive; see bottom paragraph.

I should add that when little IO activity is taking place, I have seen latencies as low as 0.1 ms - it’s only when there’s significant amounts of IO - which RAID 0 is supposed to be twice as good at compared to a single drive.

My thoughts and potential diagnoses, in order of what I think the probability of fixing the issue is:

(1) The stripe size is sub-optimal (though I followed what appeared to be the recommended configuration from Intel)

(2) I am running in a SFF case with not brilliant airflow, so the PCH could be overheating and throttling

(3) a reinstall would fix this due to the way I migrated my install onto the RAID. (see next paragraph)

Migration process:

RAID’d the two SanDisks inside Windows 8.1 running on my single Samsung 850 Evo 250 GB, (did a bunch of upgrades accross multiple machines and ended up with 2x 120 GB drives I figured I’d RAID 0 in place). I then used gparted after installing mdadm in a live Ubuntu Linux to clone all partitions across, though had to move the start of my Linux home partiton forward due to migrating from a 250GB to a 240GB volume.

The above text is duplicated in the imgur post.

Forgot to explicitly ask a question; what could be causing response times so slow, and what could be done to fix it?