Looks like BFQ is multi-actuator aware in 6.3 kernel.

The document doesn’t suggest using LUN aggregation with ZFS… they give a few examples of different pool topologies, all using raw LUNs from what I can recall.

The new gen dual actuator drives have a lot of quality of life improvements fwiw. ![]()

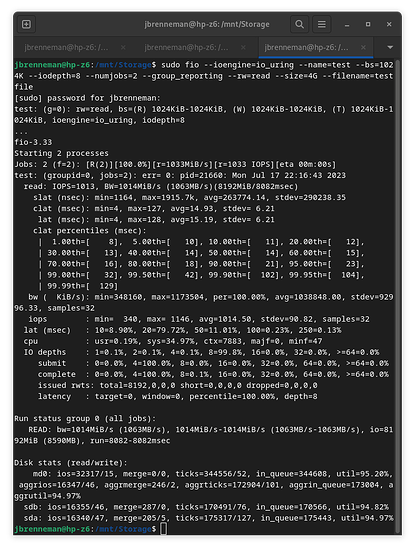

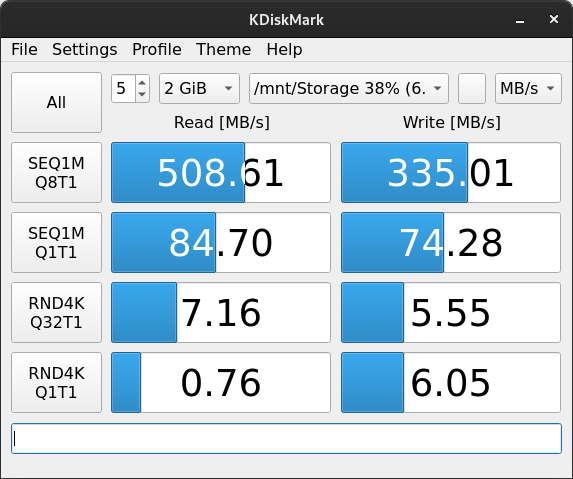

Yeah, I was just referring to the beginning of his comment here - How to ZFS on Dual-Actuator Mach2 drives from Seagate without Worry - #14 by John-S, for me the simplicity > potentially “optimal” performance of splitting it all up, and I see little to no performance degradation in fio tests

![]() For the SAS variant, what kind of advantages? I’m definitely excited for them being bigger at least… once they’re more affordable

For the SAS variant, what kind of advantages? I’m definitely excited for them being bigger at least… once they’re more affordable ![]()

Generally speaking you never want anything sitting in-between ZFS and your disks. You don’t want any middlemen that can “lie” to ZFS about where the blocks are and what state they’re in. That’s why there’s such an incessant push for known-good SAS HBAs presenting raw LUNs to the operating system in IT mode, or (worst case) single-disk “JBODs” in IR mode. No RAID, no volume managers, no off-brand SATA HBAs, no nothing.

It might perform fine—and you might never experience data corruption directly attributable to this configuration—but in this case you have done extra work and introduced additional variables and uncertainties into your storage design for (IMO) no good reason. You say it’s for “simplicity,” which I suppose is subjective, but in my experience adding extra moving parts tends to make things less simple.

I wanted to create a zpool in TrueNas Scale 22.12.3.1 (virtualized XCP-NG 8.3 beta) using the SAS versions of the Mach.2. The GUI wouldn’t recognize half of each drive - showing 4 drives with 6.37 TiB available instead of 8. Some light research indicates that TrueNAS can’t distinguish between two drives sharing a serial number even if they have different LUNs.

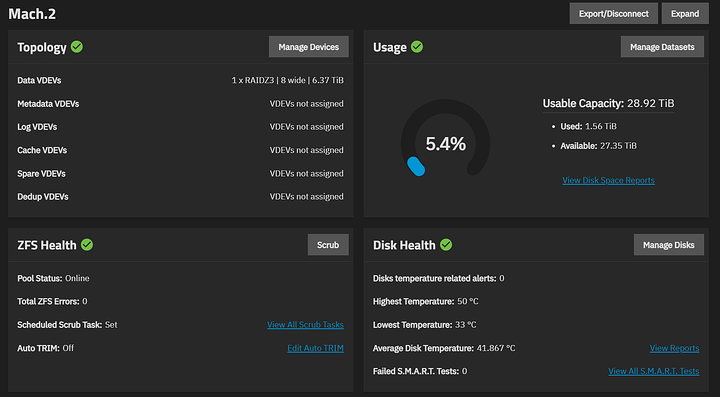

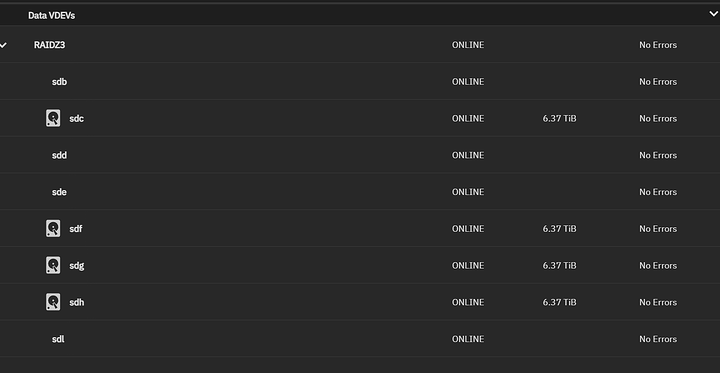

I went into the Linux CLI and ran lsblk, which detected all 8 drives and then used zpool create sdb etc… to create a RaidZ3 zpool. I imported that pool via the TrueNAS GUI and I now have an 8 drive RaidZ3 zpool with about 29 TiB of available storage - consistent with what you might expect. Transferred a 10gb iso from TrueNAS to an Alma Linux 9.2 VM at a rate of 475 MiB/second.

When looking the VDEV from the Storage menu, I see 4 drives listed with their available space and the drives that the GUI wouldn’t recognize show up as ONLINE but without capacity.

I’m a novice at all of this and wanted to make sure what I did is not unacceptably risky for a reason I might not understand.

My hardware is: 7900x, 64GB of DDR5 SuperMicro RAM, an Imagr 9300-8i HBA in IT mode flashed to 16.x firmware.

I probably wouldn’t do a single raidz3 vdevs with all eight luns. You’re losing three LUNs worth of parity data but you can only withstand a single drive failure (because if you lose a second drive, that’s four LUNs total, which one too many). And pool IOPS will be bounded by the peak IOPS of your slowest actuator.

I think two raidz1 or raidz2 vdevs (or mirrored pairs across the board) makes more sense:

raidz1 vdevs: double IOPS, lose two LUNs worth of space (vs three), can withstand a single drive failure.

raidz2 vdevs: double IOPS, lose four LUNs worth of space (vs three), can withstand two drive failures.

mirrored pairs: quadruple IOPS, lose four LUNs worth of space (vs three), can withstand at least one drive failure, possibly two if you get lucky.

I haven’t had my morning coffee yet but I think that’s right.

Thanks for the feedback! I agree on the raid setup. I was doing it in the wee hours of the night and realized afterwards it didn’t make sense for the reasons you stated.

I think I was really asking (apparently not well) if it’s okay to build raids in Linux CLI with these drives. I’m more concerned features of TrueNAS like scrubs/snapshots/smart won’t work correctly.

I think the drives are niche/rare enough, that maybe we don’t yet know?

But, try it and feed back!

I just picked up a couple from

@ $101/unit (try to make an offer).

Just bought two 16TB Exos drives ![]()

…but these are SATA so I’ll have to jump on a pair!

Hey all, so I did in fact buy a pair.

While both drives “function” perfectly, the maximum read/write speeds I’ve been able to observe in benchmarking or otherwise is just over 270Mb/s. That is to say, the same speed as a single actuator drive.

My belief was that I should be seeing about double the data rate for sequential reads; i.e. from Seagate’s user manual: “MACH.2 dual actuator technology enables up to 2X the sequential data rate and up to 1.9X the random performance of a single actuator drive.”

I have the drives hooked to the standard Intel C622 SATA controller, although I don’t know if this makes a difference. The drives show as having negotiated the full 6Gbps connection rate.

Any ideas?

so for sas, the devices don’t show up as two devices, explained above. For sata, it can only be one device, so you have to partition it to use each piece of the disk at the same time.

What you will want to do is create two partitions on each half of the disk. each half of the disk can run independently.

Thanks for the explanation. Is there an exact methodology to doing this, or should I just split the disk into two equal sized partitions in gparted?

What shows up in /sys/block/<device>/queue/independent_access_range?

See the following for where that comes from

Q: How do you identify these LBA ranges in Linux?

A: In Linux Kernel 5.19, the independent ranges can be found in /sys/block//queue/independent_access_range. There is one sub-directory per actuator, starting with the primary at “0.” The “nr_sectors” field reports how many sectors are managed by this actuator, and “sector” is the offset for the first sector. Sectors then run contiguously to the start of the next actuator’s range.

See also

# Linux: Partitioning Each Actuator of an Exos 2X SATA

parted /dev/sda mklabel gpt

parted --align optimal /dev/sda mkpart primary 0% 50%

#replace “optimal” with option “min” to save space

parted --align optimal /dev/sda mkpart primary 50% 100%

# Linux: Create a LVM Striped Partition Using Both Actuators of an Exos 2X SATA

parted /dev/sda mklabel gpt

parted --align min /dev/sda mkpart primary 0% 50%

parted --align min /dev/sda mkpart primary 50% 100%

pvcreate /dev/sda1 /dev/sda2

#create two pvs

vgcreate demo_test /dev/sda1 /dev/sda2

#create a vg named demo_test

lvcreate -n lv_demo --stripes 2 demo_test -L ‘vgdisplay --units S

demo_test| grep “VG Size” | awk ‘{print $3}’ ‘S

#create a LVM partition lv_demolocated at /dev/demo_test/lv_demo

Thanks for the link to their FAQ, I hadn’t seen this on the page for these particular X18s.

Here’s a single threaded benchmark of the RAID 10, created with…

sudo mdadm --create /dev/md0 --run --level=10 --layout=n2 --chunk=128 --assume-clean --raid-devices=4 /dev/sda1 /dev/sda2 /dev/sdb1 /dev/sdb2

And here’s a 2-threaded benchmark. Simultaneously reading two threads from the RAID 10 allows the system to read from all four actuators, demonstrating an impressive ~1.1 GB/s for reads!

I just bought very similar drives. 2x18. So I am guessing the optimal way in zfs is to set up a pair is to create 2 mirrors and then mirage the 2 v devs together.

Union of. m1. : drive A partition 1 mirror drive b partition 1

m2 : A2 mirror B2

Can I accomplish something similar in Linux with Btrfs. I know how to create M1 and M2. How do I union them at a block level for performance?

EDIT : figured it out. lvm to raid 0 A1 + A2 and B1 + B2 . then btrfs raid 1 the 2 vg devices. … or go zfs on these 2 drives. I don’t need to expand for a while. good info above. thanks.

Howdy folks, now that I’ve ordered for my own needs ![]() I wanted to share that Newegg has refurb SATA Exos 2x18TB “Refurb” drives available for $219 each.

I wanted to share that Newegg has refurb SATA Exos 2x18TB “Refurb” drives available for $219 each.

The SAS version is a bit easier to configure as each actuator is presented as a unique LUN. The SATA version presents a linear block address range where the “split” between actuators is precisely at MAX_LBA/2. Even though I have to create two properly aligned GPT portions while accounting for GPT partition overhead, I find the SATA versions to be far more versatile and performant while negating the need for complicated/expensive 12G SAS topologies and HBAs.

The precise “magic” to get the provisioning right is here:

That script also happens to be a relatively complete TUI for prepping SATA DA drives for ZFS.

In other news, I’ve a 45 drives chassis on the way, hope to add some modules to “Huston/Cockpit” to provision SATA dual actuator drives.