Tune everything back to defaults and take a gander at zpool iostat -vl 10 while you’re doing an iSCSI transfer and then a SMB transfer, to get a set of baselines for comparisons.

If i were you id drop the cache discs, and just run a raid setup.

You’re wayyy overgearing the raid setup, since you’re allready running it as a network connection.

8 drives could saturate a 10Gbit connection without even drawing a sweat e.g. 8x160Mb/s, no need for the 4Gbyte+ cache read speeds on top.

I’m guessing this is because either the client simply cannot cache the datastream, or the server is doing redundant read/writes, because of cache.

Your current setup has 1Gb cache meaning once that cache is used up, it has to write old cache to disc, delete old cache from cache discs, recieve new data, wrinse and repeat.

On top add a ~1Gb/s data bandwidth(over network), then your cache discs solution can only keep up so long, and form there on it only creates overhead.

Also if your client running windows? MS has a retarded way of pumping performance numbers where they cache a lot of the stream in memory giving you a LARGE bump to begin with but it levels out for larger files.

I’ve seen file transfers start at 600Mb/s and level out at 50-100Mb/s before done.

Thanks for the reply, If you’d like to take a look here https://www.reddit.com/r/zfs/comments/bkv5rd/improving_sequential_write_performance_on_a/

Those two screenshots in my post are actually using the default settings.

Its also a cleaner post detailing my problem and what I’d like to achieve.

To recap, I’m just looking for a way to increase the usage of RAM and delay the commits to pool. The server is power protected so I’m not too worried about data loss from outages.

I just want the file transfer to be seen as “completed” more quickly on the client side, even if it means caching all that data in RAM and letting ZFS do its commit more slowly at a later time.

What is your Arc Max set to?

With 4x 970s… I’d use two for log and two for cache.

I have read this thread. The images on reddit aren’t loading, but I suspect they’re the same as the ones posted here. I haven’t seen any measurements indicating a ZFS write performance issue. It’s time to start observing utilization, saturation, and errors so we don’t waste any time working off false assumptions. This is how you tune a system’s performance, not by guessing what the problem is and tweaking random knobs until it looks fast enough.

no one’s seemed to mention this yet so:

make sure duplication is off

Any ideas where I could start?

Even though “tweaking random knobs” is a pretty solid description of what I’ve been doing for the last few hours, it seems that the problem is with ZVOLs and iSCSI.

I’ve gotten to the point where long writes over Samba can sustain 900MB/s, but theres multiple sources on the net which address the problem of ZVOLs and iSCSI having poor write performance. They all describe a similar issue where the transfer starts out great, but drops down to sub-gigabit speeds where it remains there for the duration of the transfer.

Are zvols slow locally?

How do you export the zvols?

Targetcli?

Wendell said he was ‘SATURATING’ 10GbE with SPINNING drives using disk shelves. AFAIK, he never bothered to create a thread explaining how it was that he accomplished that.

Is the IOM6 + DS*** just … FASTER than a computer which isn’t purpose built as a NAS / SAN setup?

Or was there some other wizardry that caused this level of performance?

Was it a matter of using Netapp DS + IOM6

Was it a matter of using 20 HDs …

Was he striping the shelves?

It seems like people can get ~400 MB/s if they use SSD ! drives.

Thanks

1GB/s isn’t terribly difficult to achieve.

assuming 120MB/s sequential read on rust (I see approx 150 on my WD Reds), you can pretty much accomplish this with 8 disks.

For SATA SSDs that’s true. For NVMe, you can get much higher.

I’d assume either mirrors or several raidz2 volumes “striped” together.

Looking through this thread, I think the issue is that you’re expecting sustained 10GbE out of a single raidz3 volume. You can throw ram, l2arc and slog at it all you want, but the underlying pool is not fast. If you need reliable, sustained 10GbE saturation, you should reconfigure your pool as mirrors.

Also, did you ever mention what OS you’re using? If you’re on Linux and ZoL version is <0.8, I believe you won’t have TRIM on your SSDs.

350MB/s would be reasonable.

What’d be funny is if there’re people who’ve never even DEALT with trying to figure out why they can barely crack 200MB/s with 8 drives.

Because I’ve only ever seen this like, 10-20x … and only seen a device perform faster a couple of times (it was a RAID card, surprisingly).

I hope it’s possible. But I don’t understand why (as of yet) it never has been for any device I’ve seen do NETWORK … RAID. Local device? Yes. NAS … just never see it.

No no, I’m happy to add an L2arc/slog … but before adding ‘tunables’ I’d like to get the base working as well as it’s going to work. And, Id SERIOUSLY like to figure out what the hell the problem is once and for all.

This is NOT cheap / crappy equipment. The drives are GOOD … HGSTs, minimal usage. 10TB (which means quite dense per rotation.

LSI controller, etc. Xeon (yes, it could be higher clock speed but that CANNOT be the bottleneck … yet! lol. No modern CPU is ‘taxed’ when dealing with a whole 200MB/s).

Yes, I understand (and agree) that mirrored would improve R and mixed R/W … and striped will also improve writes. I get that.

This cannot yet be the bottleneck. I barely get the speed of ONE drive. I don’t mean sustained yet … I mean at all.

Dell PowerEdge T320

Xeon E5-2403 v2 Quad 1.80GHz

48GB 1333MHz DDR3 ECC

LSI SAS 9205-8i

8x 7.2K IBM/HGST SAS

10TB x 8 in RAIDZ-2 = approx 50TB

Configuration: RAIDZ2

Network - SFP+ (10GbE)

DDR4 doesn’t seem necessary

Bc DDR3 is cheap so I chose a v2 Xeon.

What’s the weak link though ? You know?

What’s more, the whole thing is under an AC that I keep at 68.

And this is the ONLY thing I have that array doing. Just makes no sense.

I’ve only seen CRAPPY performance (30MB/s) like 20x over the years with QNAP / Synology, etc…

[I owned a Mac retail store, 85% of the laptops required repair; desktops were often ‘complex configs’. I fixed and diagnosed over 10k laptops in the last 9 years. That isn’t to ‘brag’ – I need help (if help exists).  I’m just annoyed that despite my troubleshooting background … either “I’m below the competency threshold” … or a lot of these products are not likely to work for most people. ]

I’m just annoyed that despite my troubleshooting background … either “I’m below the competency threshold” … or a lot of these products are not likely to work for most people. ]

Granted: Local RAIDs 2-drive in 0 and 1 even … but also RAID-5 I’ve seen reach 900MB/s (empty).

But NETWORKED … RAIDs? Never once! Have I seen them exceed the performance of a striped pair.

It feels like I’m going to find a switch inside of them labelled, “You’re stupid!!”

Regardless, I thank ANYONE who can help me … and apologize for being so frustrated with the status quo.

Try these tunables for 10g networking (I am assuming you’re running FreeNAS, sorry if this is not the case): http://45drives.blogspot.com/2016/05/how-to-tune-nas-for-direct-from-server.html

The amount of performance increase when tuned for 10g network cards is pretty freaking high.

It’s this:

A pool of one raidz2 will get writes equivalent to a single drive. You need many vdevs in a pool to achieve the performance you’re looking for.

It’s really only bad for random writes or many write requests concurrently. If it’s low queue sequential it’ll still give fairly decent write performance (more than he has)

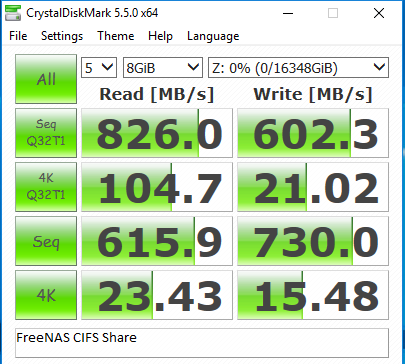

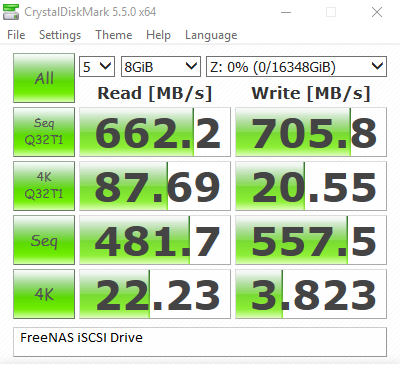

For reference, here’s some 8 x 4TB RAIDZ2 tests I did with Samba and iSCSI

You don’t have a zil or anything? I never get those numbers on a single vdev.

Nope, no ZIL. Unfortunately away from my NAS atm, so can’t give more current numbers with the current version of FreeNAS and the new Ryzen CPU, but mostly I just applied the 45drives tunables and that was it

When I get back I’ll post more recent screen shots (those were taken 2 years ago)

Yeah, the 45drives tunables are definitely legit.

I swear those speeds are much faster than what I see on single vdevs… Is crystal definitely using incompressible data for the tests? I know it’s a very common tool, but I usually use the Aja disk test (coming from Apple content creation environment).