This is of particular interest to Pascal GPU owners because LFC is incorrectly implemented on these cards on Linux. By default, if a monitor can go below the advertised Freesync range, it should not blank out when the refresh rate is EXACTLY below it’s advertised range. Unfortunately, my BenQ EX2510 on Pascal GPUs on Linux is a culprit.

This problem is so common the official Blur Buster himself had to put out a statement recently on this: Firmware Fixes To Fix for VRR Blank Out Issues (Monitors Randomly Going Black For 2 Seconds) - Blur Busters Forums

And in the statement itself, it states 2 potential minimum refresh rates to fix it that others have tried using ToastyX CRU: 55hz and 65hz.

65hz is impractical because the majority of content with a 60fps frame rate cap will suffer at 65hz, that leaves us with 55hz.

How do we do this on Ubuntu 20.04 without using Wine or CRU? There’s a project that has evaded all but the ArchWiki’s attention and that’s wxEDID.

Unfortunately, it’s hosted on Sourceforge. *vomits*

But it’s the only tool we can use natively on Linux to change the limits properly. (Seriously, someone port this to something like Qt5 and GitLab, please. CRU is already an important enough Windows tool, we need something easily accessible on Linux like in an AppImage using Qt5.)

I am presuming with this guide you are running a NVIDIA Pascal GPU and want to fix this once and for all.

You will need to build from scratch as there is no Debian installer. (This means you may need gcc or clang or build-essential.) You will also need to find the correct wxwidgets development package version using apt list libwx*.

For Ubuntu 20.04, you will need the libwxbase3.0-dev package and libwxgtk3.0-gtk3-dev packages. Both are required since the GTK3 package as stated in the README in source applies to Debian systems.

Get the source from *vomits* sourceforge:

cd into the extracted directory and run these to build:

./configure

make

sudo make install

Unfortunately it’s so early stages you have to launch the program from Terminal. (Yup, that’s right, a GUI program with no .desktop file and running from terminal)

Just type wxedid into any terminal (doesn’t have to be root) to run.

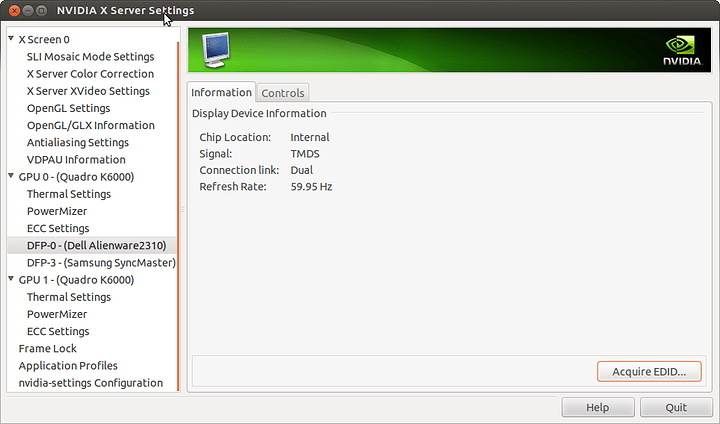

To dump your EDID, use nvidia-settings and click on the display you’re currently running. (likely DP-0)

Nvidia have made a convenient page for this here: Managing a Display EDID on Linux | NVIDIA

But the relevant image from it:

To be absolutely sure you’re grabbing the Monitor’s current EDID, turn Blur Reduction/DyAC off (on BenQ monitors) and have no xorg.conf or xorg.conf.d. Believe it or not, if you pre-specify a custom EDID file in xorg.conf, this function in the nvidia-settings utility will grab from the custom EDID FILE instead of your monitor EDID. Having no xorg.conf fool-proofs it from grabbing the wrong EDID.

Save this as any name (no spaces or special characters) with a .bin extension. The ASCII part of this is outdated.

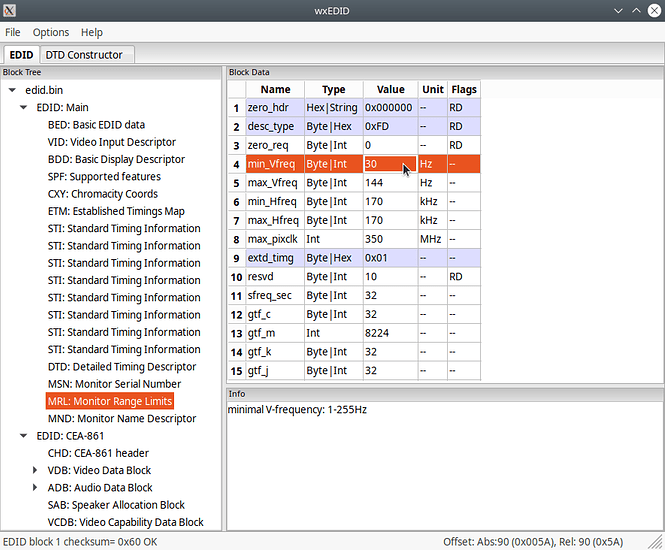

Open wxedid with Terminal, then open the .bin file you just dumped from nvidia-settings under File > Open EDID binary.

The key option here is the MRL: Monitor Range Limits in the left list. Open that part of your EDID.

On this EX2510, you notice the EDID specifies a minimum of 30hz when it should be 48. We’re not setting 48, we’re setting 55hz. Click on the Value column where the Name column for that row is min_Vfreq.

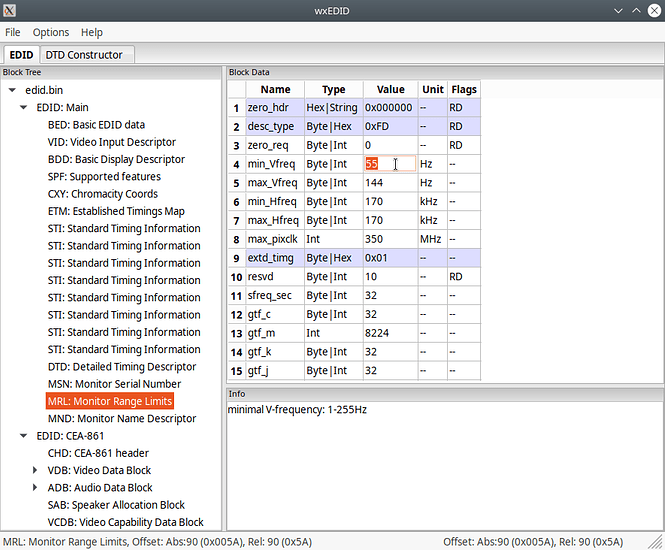

Change this to 55.

Under the Options menu at the top, click Assemble EDID. The result should be EDID block 1 checksum= 0x60 OK in the status bar. If it isn’t, there’s something else causing a problem preventing the checksum from being proper so the NVIDIA driver can read it.

Save the EDID file under File > Save EDID Binary. Choose a new name (once again, without spaces or special characters) and save it where there’s adequate permissions (your /home/user folder root, for example)

Next, use the ArchWiki guide on how to add a Custom EDID to a Nvidia xorg.conf: Variable refresh rate - ArchWiki

One extra option can potentially help:

Option "UseEDIDFreqs" "Off"

Save the changes in xorg.conf. Reboot, and get Unigine Superposition:

Install it by making the .run file executable. Run a benchmark. This is a consistent minimum Freesync refresh rate test while the benchmark is initially loading. If your Freesync refresh is 55-57hz, you’ve succeeded! if it’s 30hz, the NVIDIA driver is still parsing the display EDID and you may have to check xorg.conf or defeat some of the safeties in place for the driver using Option "ModeValidation" settings.

I go into ModeValidation settings in my other thread for getting custom refresh rates working. The same technique can help you increase your Vertical Total pixels for BenQ Blur Reduction/DyAC while not using Freesync:

A better primer on Vertical Total pixels is on Blur Busters’ site themselves:

However, in my own experience, high vertical totals don’t play nice with Freesync enabled. Not to mention what it could do to a Ninja recorder’s HDMI receiver chip. (Would highly advise anyone using a Ninja recorder to use Reduced Blanking timings) Only do this at fixed refresh rates and if backlight strobing is your primary goal.

Report back Pascal owners if this has fixed your Freesync issues. If this has successfully defeated needing ToastyX CRU to do this on Linux, and you want to help, pretty please port wxEDID to Qt5 and make it available on GitLab as an AppImage. That would be so much help to people looking for a CRU alternative, or maybe even rebuild it in Qt from the existing code to function like CRU and we’d have a legit CRU clone on Linux.

Oh and, leave a nice reply if this has once and for all fixed your Freesync issues on Pascal and NVIDIA.