Hey, sorry to respond so many months later.

I’ve figured out what I want, and it requires I rethink my data storage strategy on this NAS.

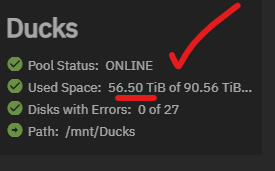

Secondary NAS is fine (for now)

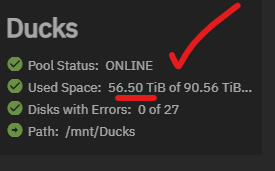

On my secondary NAS with a HDD cluster, it’s all mirrors, but it has 100TB of space. So far, that’s enough:

Data filling up

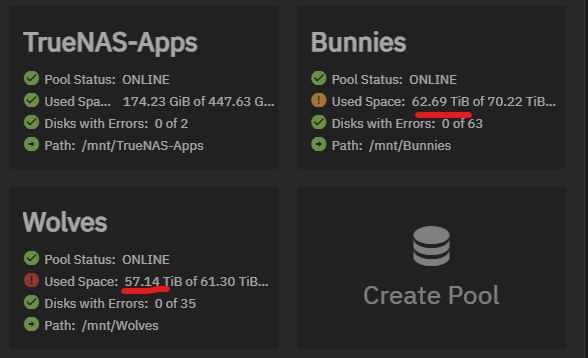

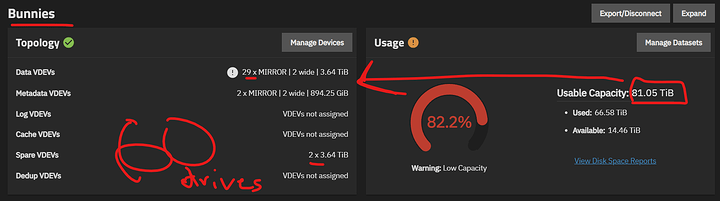

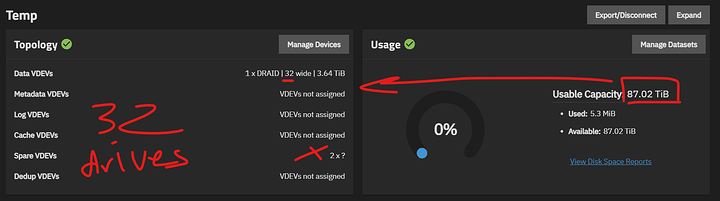

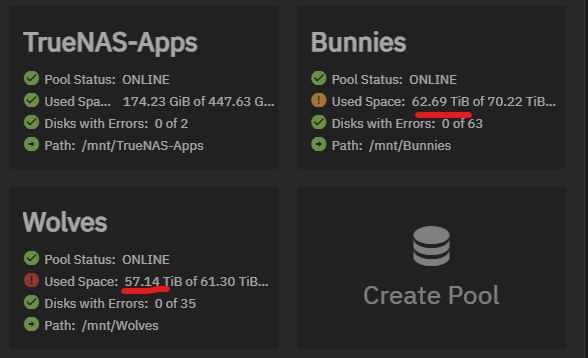

But my main NAS is the problem, and I need a solution quick:

While it might look like “wow, 5TB will take some time”, I’ve been ripping every movie, show, and CD discs I buy (into MKVs, not the whole ISO :P), and as you can see, it’s quite a bit of space; only ~7TB are snapshots.

Bunnies in my main pool and Wolves is the every-6-hour-backup.

Rethinking my strategy

Instead of buying another Storinator XL60 or continually buying more 4TB SSDs, I think a new strategy is in order.

I went with mirrors because:

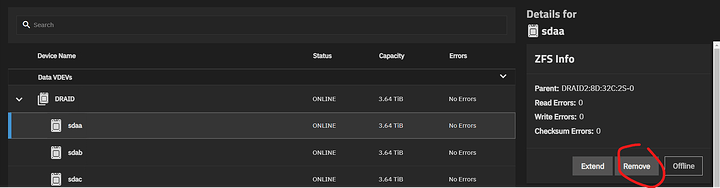

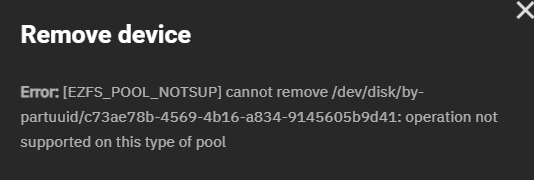

- They’re more flexible. You can add and remove drives for redundancy at will.

- You can easily upgrade the capacity of a mirror by purchasing only 2 drives. Adding or removing a RAID-Z vdev requires I replace 10-15+ at a time, and I typically only buy drives in sets of 2-4.

- You don’t even need to remove any existing drives from the mirror until after resilvering is complete.

- You can add more mirrors with a minimum of 2 drive purchases.

- You can remove mirror vdevs very quickly. Is this the case with RAID-Z?

- Mirrors can fully maximize the speed of ZFS.

- 2-drive mirrors can only sustain 1 drive death, but they’re also much faster to resilver, so the risk of data loss is minimized.

Still, mirrors are only good if you have a ton of money and enough drive slots.

I have more physical ports on my SAS expanders and more SAS controllers, but I don’t have enough slots in my case.

Mirrors simply don’t scale for home use. I shouldn’t have to buy another Storinator XL60 this soon after buying the other two. I really wanna rethink my strategy.

Using what I have

RAID-Z seems like the best option. Without even buying new drives, I can go from 50% usable capacity from mirrors to 80% with RAID-Z!

Which should I choose?

I’m thinking either of these:

- 10-drives in RAID-Z2

- 15-drives in RAID-Z3

vdevs are nice because they don’t need to match in size or capacity, so I can always mix 'n match based on your suggestions.

Should I do more or less in either case?

Should I do more or less in either case?

Which RAID-Z should I go with?

Which RAID-Z should I go with?

My current drives

- I have 38 2TB drives and 22 4TB drives in the top case using all 60 slots.

- I have 34 4TB drives in the bottom case. That leaves me with 26 slots for this transition.

- I also have 15 4TB drives in shipping to my house right now. Exactly enough for a single RAID-Z3 vdev.

Rethinking hotspares

I dedicate 2 drives to hotspares in both zpools. Those need to be 4TB drives. I’m wondering if I even need hotspares if I go RAID-Z3.

At that point, cold-spares make more sense and allows more total capacity and full utilization of each slot in the case.

Steps to upgrade

I have two ideas on how to upgrade.

- Add vdevs to the main pool first and eventually move all 2TB drives to the backup pool.

- Add vdevs to the backup pool first and once that’s complete, start adding vdevs to the main pool; slowly removing all mirrors.

Which should I do?

Which should I do?

I’m thinking the first one is better because it has the possibility of removing all 2TB drives this way. Eventually, I’d like to have all 4TB drives, but maybe that won’t matter if I move to RAID-Z3.

Steps for the first idea

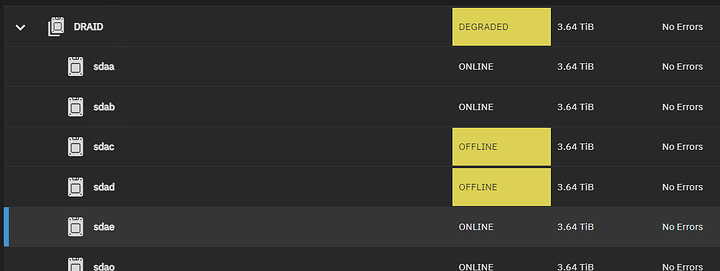

- With the 15 4TB drives on the way, I will make a single RAID-Z3 vdev and add it to the main pool.

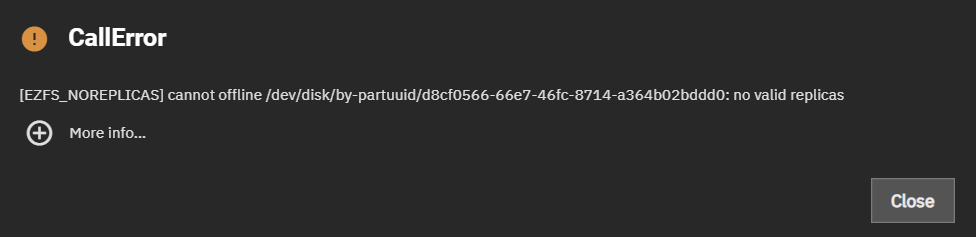

- I’ll start removing 4TB mirrors from the pool until I have enough to make another 15-drive RAID-Z3. Then add that back to the main zpool. I’ll probably need to take my four 4TB hotspares to have enough drives.

- Just two 4TB vdevs is enough to keep this pool happy for a while at 96TB.

- I can start removing every 2TB mirror from the main zpool now.

- Once I’ve removed all those 2TB mirrors, I can work on upgrading the backup pool.

- I can add a bunch of 2TB 10-drive RAID-Z2 to the backup pool temporarily (I’ll need to borrow two 4TB drives since I only have 38 2TB drives). That’s 64TB of space which is more than the backup pool’s total capacity today.

- Once those are created, I can remove all 4TB mirrors from the backup pool.

- With only 30 of those 34 4TB drives, I’ll make two new 15-drive 4TB RAID-Z3 vdevs in the backup pool. That’s another 96TB.

- Now, I can remove all four 2TB RAID-Z2 vdevs from the backup pool, and I’m done for now.

All in all, this will result in 96TB of usable capacity in each zpool with 30 slots remaining in each case. I’ll have and 11 4TB and 38 2TB drives just lying around at this point ready to be used in case I need more capacity. Having extra 4TB drives is nice for cold storage.

With 38 2TB drives I can also add two more 15-drive RAID-Z3 vdevs. Not bad considering it’s another 24TB of usable capacity.

What do you think of this new strategy?

What do you think of this new strategy?