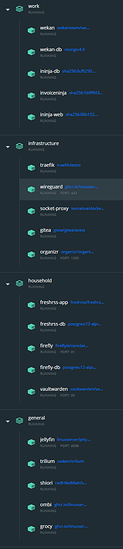

So it’s time for a fairly major update on how the server project is going. Let’s start with a look at Docker.

As you can see, the (listed following in case you can’t see the image) containers (and any associated dependencies like the […]-db containers) are running. It’s been a long time since I made a major progress update, but this it. We’ve made some real progress.

- Wekan

- Invoice Ninja

- Traefik

- Wireguard

- DSP

- Gitea

- Organizr

- Fresh RSS

- Firefly III

- Vault Warden

- Jellyfin

- Trilium Notes

- Shiori

- Ombi

- Grocy

As @TheCakeIsNaOH noted … almost a year ago (gee, I’ve been working on or planning for this a long time now, haven’t I? well, lucky me that I’m the primary client here I guess), multiple compose files are much easier. Especially with Gitea, since the files are smaller and easier to navigate. After about 8 or 10 containers total, this way is just better.

We currently have 4 stacks (grocy was implemented in the wrong stack at some point and fixing that is most definitely on the list). The containers in the ‘work’ stack primarily have to do with my business, and will be transferred to their own domain that’s more appropriate for that in near future – and I have obtained a 3rd domain for the gameservers and matrix homeserver as well.

Databases, even without multi-tenancy, are still our largest single problem. Anytime a container requires another container in some manner (outside of, for example, everything on here requiring traefik and traefik requiring DSP, since that’s all contextual), it creates first-boot problems. Even with .ENVs and configuration directly off of the creators’ github pages, something always breaks with that first boot. However, eventually we get there.

SSL is coming along well, and now I think SSL basically works across the server for both local and remote users (waiting on inevitable bug reports to come in… eventually). Unfortunately the rsync method (recommended by @PhaseLockedLoop) of ensuring that the gateway server and the local server always have their SSL files synced is unlikely to be implementable in the near future.

Hardware acquisition is very much in the research phase. There is a lot to learn about the 5-10 year old “complete units” (that is, server case plus PSU(s), boards, drive bays, CPUs, and sometimes even RAM) available on ebay for $300-$500 a pop or less, and I have no foundation in this area. I do know that I don’t need all that much in terms of performance, but of course that’s not an objective, binary evaluation is it?

There’s still a wordpress blog hosted elsewhere, distinct from the server, and I’ve been considering whether it’s worth transitioning it over (probably including converting it over to Ghost). For now, I’ve concluded that the answer is probably not – but later in the process, potentially.

As you can see in the list, we have Gitea running. Not only that, but with Git it’s managing the actual live server folders, so we have direct VC in prod. That’s probably a scary statement to some of you. The repository is not currently public, because of secrets having to live in ENV files currently. While there is a likely at least two to three workarounds for this, the primary purpose of gitea for us is VC, and it’s doing that – so further modifications are relatively low priority. We’ll get there.

Grocy’s been a bit bothersome to implement, and we’re not actually 100% there yet. I really need to get a cheap chinese nonsense tablet for the kitchen for it, something that I can deny internet access to so I don’t have to worry about actually trusting it. That will help a lot, so long as the device can actually run both Grocy web-interface and/or mobile client, plus the barcode buddy thing that really, truly, should not be a separate piece of software. It’s functionality is core to what Grocy is trying to achieve, after all. However, Grocy is already proving quite useful regardless. The only problem is that it doesn’t seem to want to store it’s data in the volumes that I’ve provided it – but that’s an issue for a later pass, when we implement container+data automated backups. The household members haven’t really complained much about it yet, but don’t really have the full habit of using it yet either.

Firefly III isn’t useful yet. It runs, and the functionality we’re looking for is absolutely there – for the most part, anyway, and certainly above the minimum bar. Many of the alternative options fall short for a number of reasons, chief among them, ironic or not, being monetization. What Firefly needs is time and use to fully come into it’s own – rather like Grocy in that.

Vaultwarden, meanwhile, is useful right out of the gate. I’ve had no major issues with it once we got it running. I cannot recommend Vaultwarden enough, and I mean to everyone. And that’s why I’ll pick Vaultwarden for our code snippet for this post. It’s fairly basic, but that it’s requirements are so simple is a good thing.

vaultwarden: # Alternative bitwarden server

image: vaultwarden/server:latest

container_name: vaultwarden

restart: unless-stopped

networks:

- t2_proxy

# Usually I'd put ports right about here, but with Traefik they're unnecessary.

env_file:

- ./defaults.env

# No need for an env for Vaultwarden - or any db container, for that matter. I call that a well-maintained image, myself.

labels:

- "traefik.http.routers.vaultwarden.rule=Host(`vw.$DOMAINNAME`)"

# Typing out the entirety of 'vaultwarden' as the subdomain seemed a bit much when I'm trying to encourage better security habits in users.

volumes:

- vault-data:/data

# Single volume requirement - nice!

security_opt:

- no-new-privileges:true

Invoice Ninja ended up replacing Akaunting for a number of reasons. Both were difficult to get running, but Akaunting’s locking of several basic features behind paywalls and integration with central servers was too much for me. Invoice Ninja does have monetization problems of it’s own, but they’re less important for the most part. Besides, Invoice Ninja seems to be much more of a direct Wave competitor, so I’m already comfortable with many of it’s preconceptions and assumptions. It also has time tracking, and trying to find a container with just time tracking was a nightmare. So that’s nifty.

FreshRSS would make me happier if my RSS feed collection – along with the books, music, and audiobooks – weren’t lost during that several month break of working on the server. However, it’s working, so I’m building that feed collection back up.

Anyway, that’s where we’re at. More progress is continuing, and we should have a synapse container for a Matrix homeserver soon  ,

,

,

,

The private key generated by Certbot that goes alongside the certificate is also not password protected and for good reason it only need to be protected by filesystem permissions and shouldn’t be shared. This is up to you. There is no good reason to share it. There is no sound reason to encrypt it within the permissions it already sits in. If your system is compromised to this level your data is already done for with or without this cert being protected.

The private key generated by Certbot that goes alongside the certificate is also not password protected and for good reason it only need to be protected by filesystem permissions and shouldn’t be shared. This is up to you. There is no good reason to share it. There is no sound reason to encrypt it within the permissions it already sits in. If your system is compromised to this level your data is already done for with or without this cert being protected. you are beholden to them. and guess what… A signature does not make something trustworthy. When a message X is signed and the signature is successfully verified with public key XKp, then cryptography tells you that the message X is exactly as it was when the owner of the corresponding private key XKpriv computed that signature. This does not automatically tell you that the contents of X are true… Now moving on to what the certificate does … it moves the key distribution problem: initially your problem was that of knowing the server’s public key right? I said this earlier

you are beholden to them. and guess what… A signature does not make something trustworthy. When a message X is signed and the signature is successfully verified with public key XKp, then cryptography tells you that the message X is exactly as it was when the owner of the corresponding private key XKpriv computed that signature. This does not automatically tell you that the contents of X are true… Now moving on to what the certificate does … it moves the key distribution problem: initially your problem was that of knowing the server’s public key right? I said this earlier