So i got my KVM setup and everything seems to be working fine and my latency is in good ranges for the standard latency mon test.

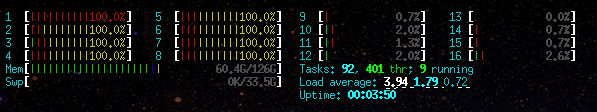

But when i do the in depth test i get a few latency spikes on specific cores after running the program for a while.

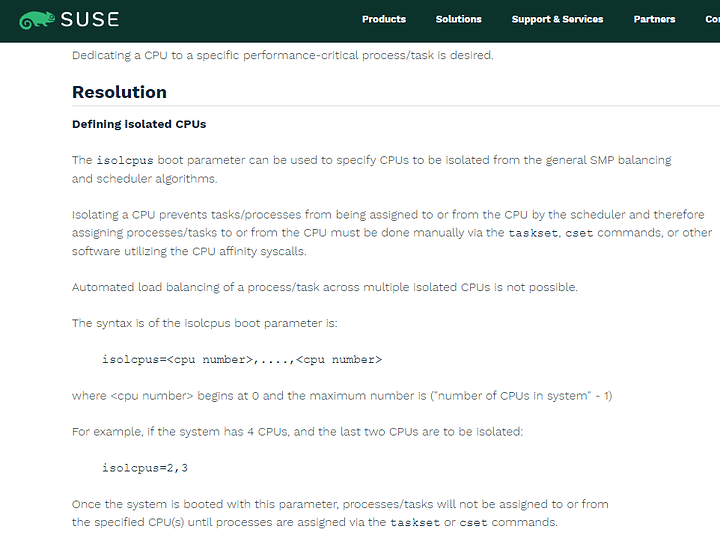

I was reading that if you use isolcpus, that qemu is not able to balance the system process across the threads which is why all the usage goes to core 0?

Am i understanding this correctly? I was also reading that you have to use taskset to spread the qemu processes across the isolated cores.

This seems to be something most people miss from my research…

I did a test and also tried my same set up, but without isolcpus and the kernel usages is spread across the cpu cores (the Red bars in HTOP)

My windows 10 KVM is running on CPU0, i have done everything possible to make sure the host and guest are sharing no hardware but the usb controller to reduce latency as much as possible. Passing through an nvme helps latency alot from what i have see as you are not using a virtual hard drive file shared with the host. This also stopped my Audio crackling issues.

From what i have seen my latency is better than most on the standard latency test and i have no issues playing games at bare metal performance.

Here is my system setup.

Precision T7610

TSC Clocksource - Stable

HT Off

Kernel Compiled With Performance Governor)

Specter / Meltdown Disabled

Hardware on CPU1 (Gentoo Linux - Realtime kernel)

E5-2687w v2 Xeon

64GB ram

4x 1.6 TB HGST SAS SSDS Raid 10 btrfs

Quadro M4400

10GB broadcom SFP fiber card

Hardware on CPU0 (Windows 10 KVM)

E5-2687w v2 Xeon

64GB ram (Hugepages)

2TB NVME

GTX 1080

10GB broadcom SFP fiber card

I have spent 1 month benchmarking libvirt xml configs and reading red hat and libvirt xml documentation to create what is probably one of the best virtualized setups possible. I just seem to be stuck on the isolcpus issue not properly balancing the qemu host processes…

My libvirt xml

<domain type="kvm">

<name>win10</name>

<uuid>185f91df-a679-4672-9c11-27c3334762e0</uuid>

<metadata>

<libosinfo:libosinfo xmlns:libosinfo="http://libosinfo.org/xmlns/libvirt/domain/1.0">

<libosinfo:os id="http://microsoft.com/win/10"/>

</libosinfo:libosinfo>

</metadata>

<memory unit="KiB">50331648</memory>

<currentMemory unit="KiB">50331648</currentMemory>

<memoryBacking>

<hugepages>

<page size="2048" unit="KiB" nodeset="0"/>

</hugepages>

<nosharepages/>

<locked/>

<access mode="private"/>

<allocation mode="immediate"/>

</memoryBacking>

<vcpu placement="static" cpuset="0-7">8</vcpu>

<iothreads>1</iothreads>

<cputune>

<vcpupin vcpu="0" cpuset="0"/>

<vcpupin vcpu="1" cpuset="1"/>

<vcpupin vcpu="2" cpuset="2"/>

<vcpupin vcpu="3" cpuset="3"/>

<vcpupin vcpu="4" cpuset="4"/>

<vcpupin vcpu="5" cpuset="5"/>

<vcpupin vcpu="6" cpuset="6"/>

<vcpupin vcpu="7" cpuset="7"/>

<emulatorpin cpuset="4-5"/>

<iothreadpin iothread="1" cpuset="6-7"/>

</cputune>

<numatune>

<memory mode="strict" nodeset="0"/>

<memnode cellid="0" mode="strict" nodeset="0"/>

</numatune>

<os>

<type arch="x86_64" machine="pc-q35-5.1">hvm</type>

<loader readonly="yes" type="pflash">/usr/share/qemu/edk2-x86_64-code.fd</loader>

<nvram>/var/lib/libvirt/qemu/nvram/win10_VARS.fd</nvram>

</os>

<features>

<acpi/>

<apic/>

<hyperv>

<relaxed state="on"/>

<vapic state="on"/>

<spinlocks state="on" retries="16384"/>

<vpindex state="on"/>

<synic state="on"/>

<stimer state="on"/>

<reset state="on"/>

<vendor_id state="on" value="elitekvm"/>

<frequencies state="on"/>

<reenlightenment state="on"/>

<tlbflush state="on"/>

<ipi state="off"/>

<evmcs state="off"/>

</hyperv>

<kvm>

<hidden state="on"/>

</kvm>

<pmu state="off"/>

<vmport state="off"/>

<ioapic driver="kvm"/>

</features>

<cpu mode="host-passthrough" check="full" migratable="on">

<topology sockets="1" dies="1" cores="8" threads="1"/>

<cache mode="passthrough"/>

<feature policy="require" name="rdtscp"/>

<feature policy="require" name="x2apic"/>

<numa>

<cell id="0" cpus="0-7" memory="50331648" unit="KiB"/>

</numa>

</cpu>

<clock offset="localtime">

<timer name="hypervclock" present="yes"/>

<timer name="rtc" tickpolicy="catchup"/>

<timer name="pit" tickpolicy="delay"/>

<timer name="hpet" present="no"/>

<timer name="tsc" present="yes"/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>destroy</on_crash>

<pm>

<suspend-to-mem enabled="no"/>

<suspend-to-disk enabled="no"/>

</pm>

<devices>

<emulator>/usr/bin/qemu-system-x86_64</emulator>

<controller type="usb" index="0" model="qemu-xhci" ports="15">

<address type="pci" domain="0x0000" bus="0x01" slot="0x00" function="0x0"/>

</controller>

<controller type="sata" index="0">

<address type="pci" domain="0x0000" bus="0x00" slot="0x1f" function="0x2"/>

</controller>

<controller type="pci" index="0" model="pcie-root"/>

<controller type="pci" index="1" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="1" port="0x8"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x0" multifunction="on"/>

</controller>

<controller type="pci" index="2" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="2" port="0x9"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x1"/>

</controller>

<controller type="pci" index="3" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="3" port="0xa"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x2"/>

</controller>

<controller type="pci" index="4" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="4" port="0xb"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x3"/>

</controller>

<controller type="pci" index="5" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="5" port="0xc"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x4"/>

</controller>

<controller type="pci" index="6" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="6" port="0xd"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x5"/>

</controller>

<controller type="pci" index="7" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="7" port="0xe"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x6"/>

</controller>

<controller type="pci" index="8" model="pcie-root-port">

<model name="pcie-root-port"/>

<target chassis="8" port="0xf"/>

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x7"/>

</controller>

<input type="mouse" bus="ps2"/>

<input type="keyboard" bus="ps2"/>

<hostdev mode="subsystem" type="usb" managed="yes">

<source>

<vendor id="0x046d"/>

<product id="0xc077"/>

</source>

<address type="usb" bus="0" port="1"/>

</hostdev>

<hostdev mode="subsystem" type="usb" managed="yes">

<source>

<vendor id="0x0c45"/>

<product id="0x5004"/>

</source>

<address type="usb" bus="0" port="2"/>

</hostdev>

<hostdev mode="subsystem" type="pci" managed="yes">

<source>

<address domain="0x0000" bus="0x03" slot="0x00" function="0x0"/>

</source>

<address type="pci" domain="0x0000" bus="0x02" slot="0x00" function="0x0"/>

</hostdev>

<hostdev mode="subsystem" type="pci" managed="yes">

<source>

<address domain="0x0000" bus="0x03" slot="0x00" function="0x1"/>

</source>

<address type="pci" domain="0x0000" bus="0x03" slot="0x00" function="0x0"/>

</hostdev>

<hostdev mode="subsystem" type="pci" managed="yes">

<source>

<address domain="0x0000" bus="0x04" slot="0x00" function="0x0"/>

</source>

<address type="pci" domain="0x0000" bus="0x04" slot="0x00" function="0x0"/>

</hostdev>

<hostdev mode="subsystem" type="pci" managed="yes">

<source>

<address domain="0x0000" bus="0x04" slot="0x00" function="0x1"/>

</source>

<address type="pci" domain="0x0000" bus="0x05" slot="0x00" function="0x0"/>

</hostdev>

<hostdev mode="subsystem" type="pci" managed="yes">

<source>

<address domain="0x0000" bus="0x02" slot="0x00" function="0x0"/>

</source>

<boot order="1"/>

<address type="pci" domain="0x0000" bus="0x06" slot="0x00" function="0x0"/>

</hostdev>

<memballoon model="none"/>

</devices>

</domain>

But don’t get your hopes up, because it seems way too easy, so there may be some quirk that prevents it from working.

But don’t get your hopes up, because it seems way too easy, so there may be some quirk that prevents it from working.