It appears the voices in my head that have been forcing me to read arcane specifications and datasheets for the better part of 40 years know what they are on a about. I’m happy to report some success :

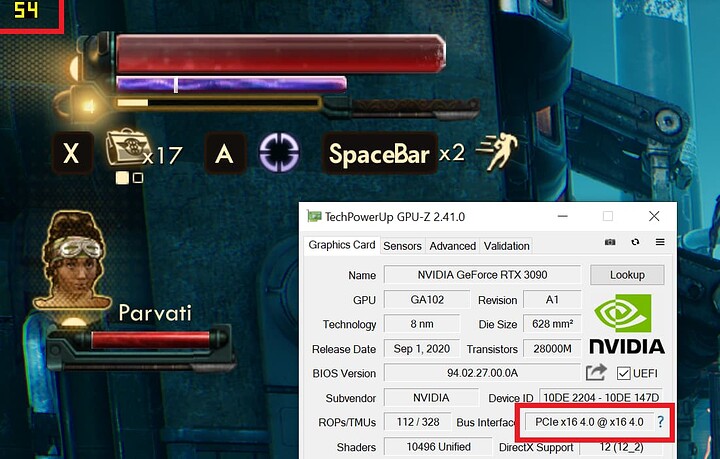

This is my “benchmarking tool” Outer Worlds, running in 4K with every setting maxed out, and with the GPU interfaced in PCIe 4.0, and the frame rate is almost where it should be.

Most importantly, the way to that result was exactly what I anticipated it should be.

First I raised the gains for slot 7’s TX redrivers from 2.1 / 3.3 / 4.8 / 8.5 to 3.0 / 4.2 / 5.8 / 9.4 (one step). The machine booted normally, but the game still stuttered. However, instead of running at around 10-15 FPS, it was now running at 15-30 FPS and with less jitter on those figures. Clearly this was a step in the right direction.

So I went back to the BIOS and raised those gains to 3.2 4.6 6.5 10.4 dB (yet another single step). This led to the result I posted above : 54-60 FPS.

In case you’re wondering, raising those gains cannot damage your hardware : the actual line voltages are constrained by the output buffer swing settings, which I have left to their default 1000 mV peak-to-peak. Let me be clear on this : changing those voltages would be the absolute last thing you do when all else has failed.

Increasing voltages will lead to increased power consumption (each redriver IC burns up to 1.35 W nominal, however if you go that far without heatsink I don’t know what the result would be, long term) . It may also affect signal integrity negatively as higher voltages impact rise/fall time.

Going back to my earlier call for naked photos of motherboards : I’m still on the market for those, but this experiment already provides some hints that signal quality is in fact worse on the CPU-redriver segment than the redriver-GPU segment : I did not have to boost signals on the RX pathway. The way I see it, we’ve got horrible insertion loss around the CPU socket area, so that the CPU can’t talk “loud enough” to reach the end of the motherboard. However, whatever comes out of the redrivers is “loud enough” for the CPU to read clearly.

We’re not done with this just yet. This is definitely a path to solving our PCIe issues but I don’t know how good my solution is. I suspect that increasing the gain reduces the BER but I don’t know by how much. For all I know, my GPU is still wasting a lot of time going through PCIe recovery many times a second. It might not be very important while playing a game, but for applications like networking and machine learning, that’s a different story.

My intuition is that if I keep boosting the gains, eventually I’ll get back to crappy frame rates, signifying that I’m now saturating the receivers on the GPU. Then, in theory, picking gains in the middle of the “it seems to work” band should give the best result in terms of BER and overall link stability.

I also don’t know for sure how good the signals really look on the RX path, so I would be tempted to apply the same treatment. But since it appears to work so far, I may have to reduce gains first just to see with how little amplification I can get away with.

By the way, if you’re interested, that process is called “characterization”. It’s in the same field as calibration, certification and metrology, and normally you’re supposed to do it with instruments that cost more than a sports car. Much more. I have some at work… sadly I have been told in no uncertain terms that I would not be allowed to borrow the 33 GHz oscilloscope for my week-end projects. Bummer. If you have one lying around, though, I’d appreciate a loan ![]()