Good evening, guys. The adventure continues. I already apologize, because this is gonna get technical and possibly boring. Plus, this is “stream of thought”, so it’s not Shakespeare

I’ve come up with a dodgy method for testing PCIe 4.0 stability on our motherboards. It involves :

- Forcing PCIe 4.0 on the slot that is vexing us

- For multiple-GPU users, using only one GPU at a time

- Booting your OS of choice (Win10 in my case)

- Running a graphics-intensive test (Outer Worlds in 4K, for me)

I’ve run that test using the Asus “vanilla” redriver settings. The machine takes longer to boot, and under Outer Worlds I get 10 to 15 FPS instead of 60 (my monitor is a 60 Hz, I’m not a gamer).

The FPS number doesn’t tell the whole story, however. In a typical scenario of using an underpowered GPU to run a game, those 10-15 FPS would be “smooth”, meaning a somewhat constant interval between frame where it feels like the world is slowing down. In this case however, imagine a very jerky frame rate, where you get a smooth 60 FPS for half a second, then 5 frames here and there, then nothing for half a second, in a completely random jittery way.

My intuition is that I’m looking at a link that’s borderline. It works at some PCIe speeds, not so well at others.

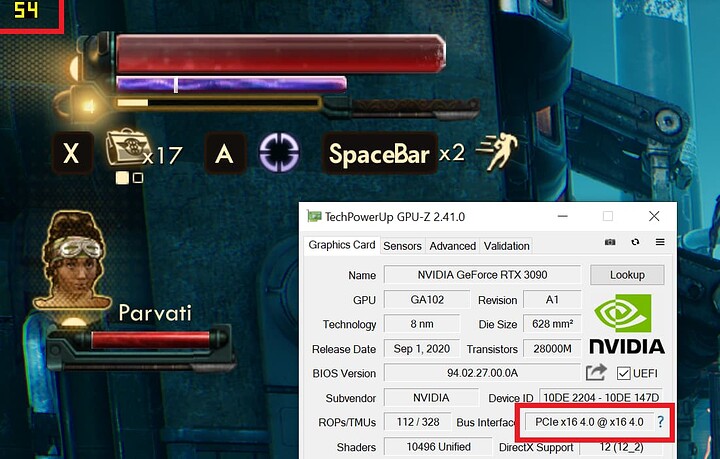

As you may or may not know, PCIe links don’t usually operate in a single mode from power on to power off. A tool like GPU-Z will show you that your GPU’s interface switches through all flavors of PCIe depending on workload, down to 1.0 when you’re on the Windows desktop doing nothing. That is the reason why a dodgy GPU connection might still let you boot : you don’t need PCIe 4.0 graphics to display the Windows logging screen.

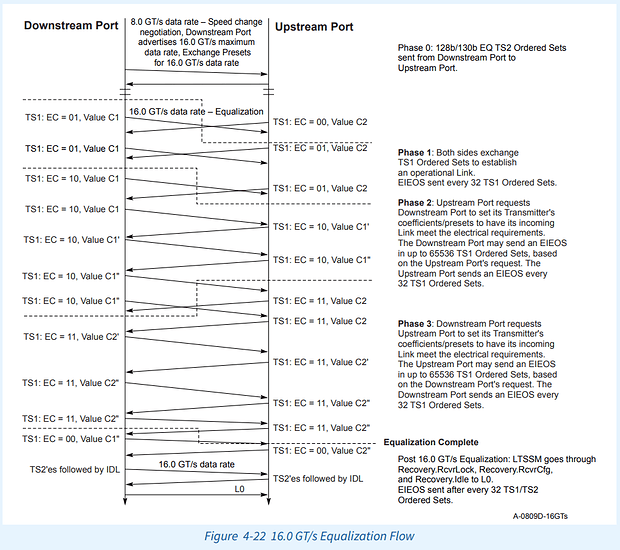

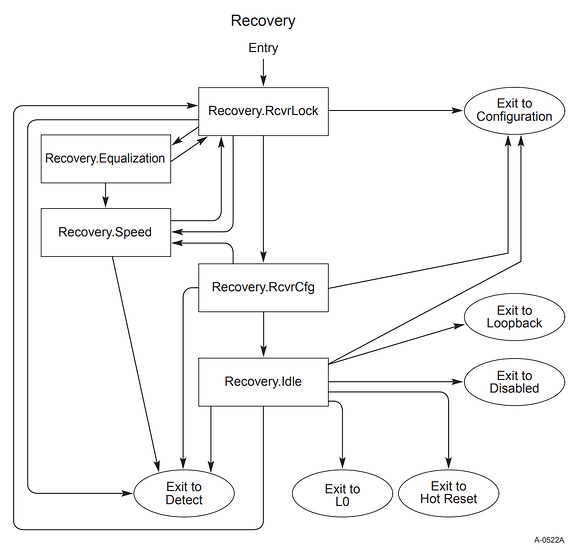

The process by which PCIe links dynamically change speed is called “recovery”. Here’s the state machine :

Yeah, I know, it’s Klingon to most people. This diagram basically tells you that recovery (a speed change) can occur for any number of reasons, one of which being an “electrical idle” state on the link. This shall trigger a speed change (in this case to a lower speed) with a new equalization phase. In cases of abject failure, recovery can lead to a link being disabled, or to a reset. “L0” is the “perfect ending” : it’s the state where your PCIe link just works.

My guess is that by forcing PCIe 4.0 and then starting a video game, I cause the system to attempt to reach PCIe 4.0 (even though 3.0 would be more than enough). At 4.0 I get terrible signal quality, which appears as an electrical idle state to the GPU and/or CPU, at least long enough (128 µs, per spec) to trigger the recovery automaton.

My system ends-up in a stuttering loop of :

- Equalizing to 8 GHz

- Working for a few video frames

- Recovering to 4 GHz

- Recovering to 8 GHz again, immediately

The answer is clear, I need to fix signal integrity at 8 GHz, and 8 GHz only.

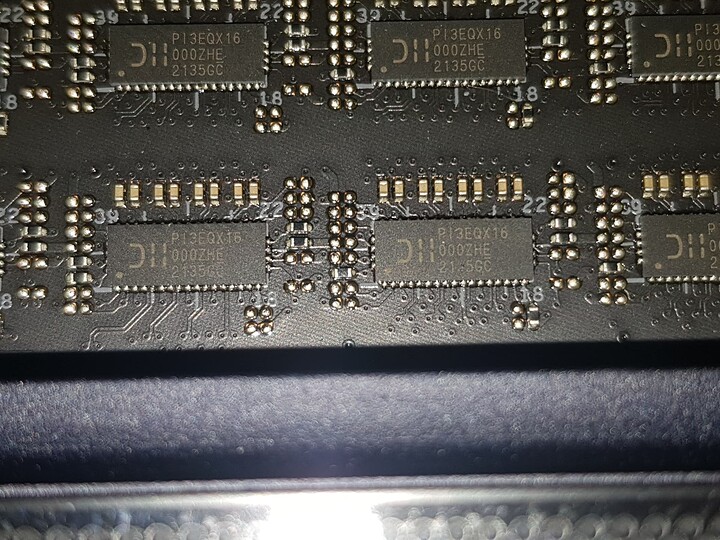

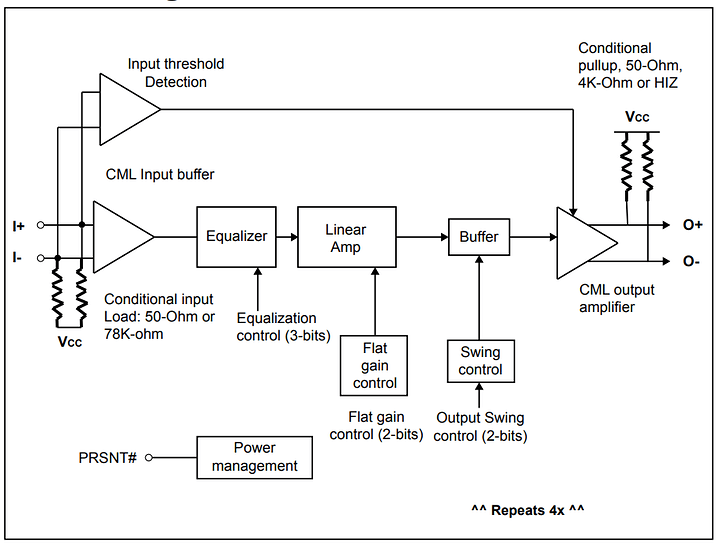

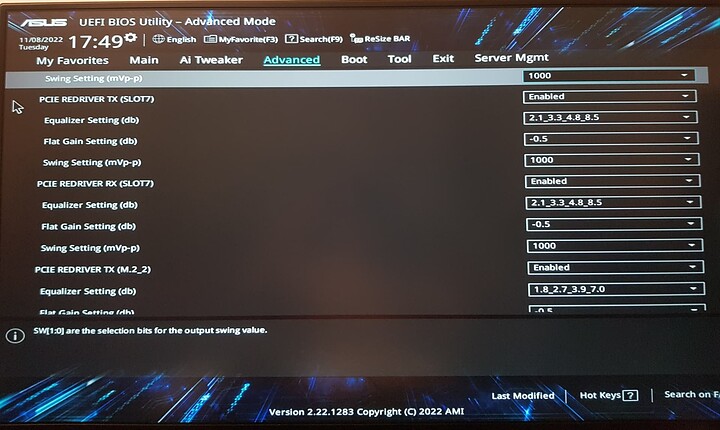

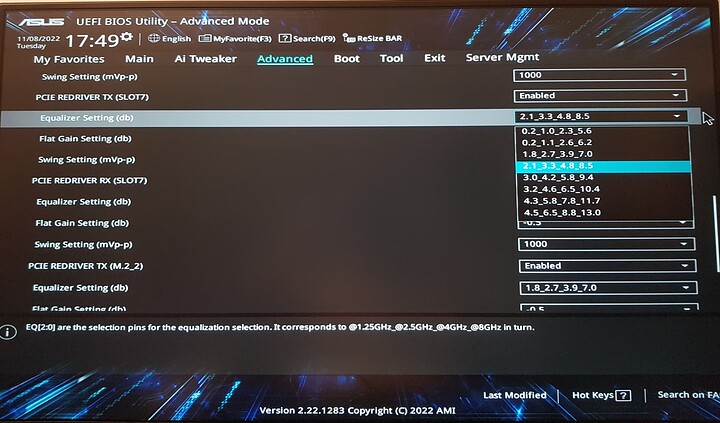

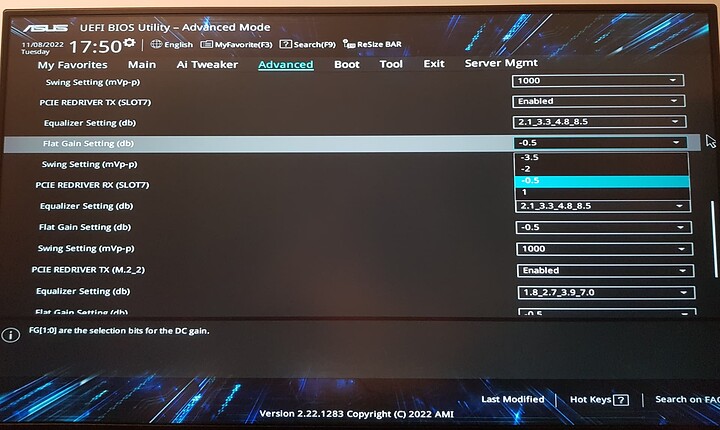

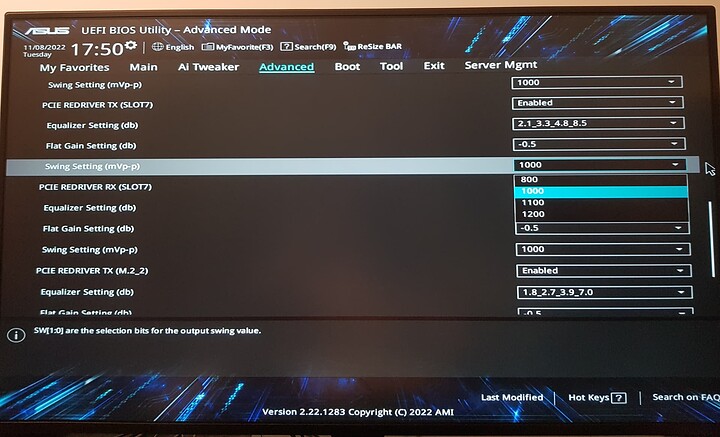

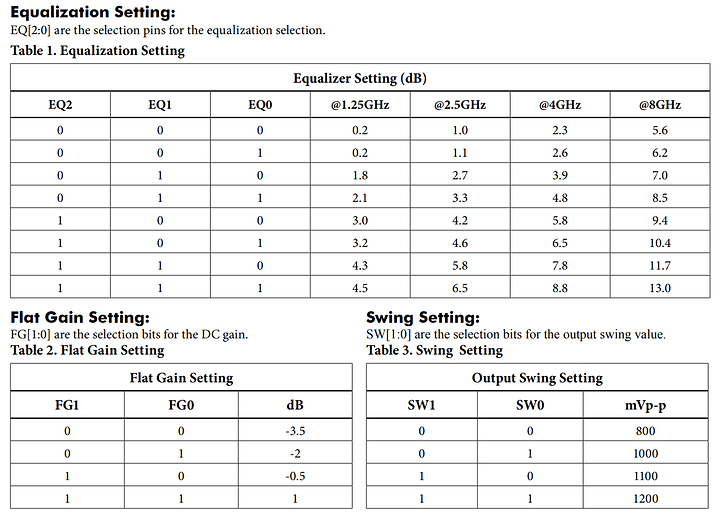

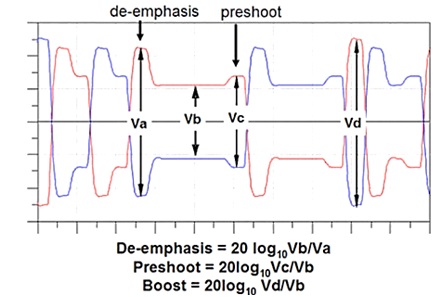

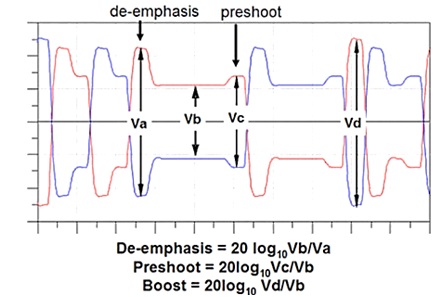

That’s why our redrivers have multiple filtering coefficients. Each one applies to one PCIe speed, from 1.0 to 4.0 : 1.25 GHZ, 2.5 GHz, 4 GHz, 8 GHz. A redriver allows us to apply a different level of amplification to each frequency band. That is because slower signals are much more tolerant of line loss and you don’t want to saturate those signals. The reason you don’t want that is because PCIe signals are analog, they aren’t exactly “ones and zeroes”. They look like this :

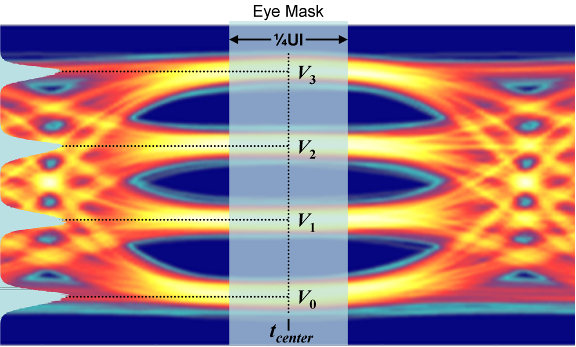

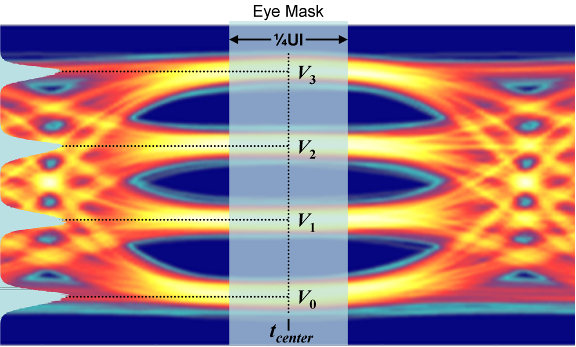

That’s for PCIe 3.0 and 4.0, whereas PCIe 5.0 uses PAM4 signaling (4 levels per “bit”) which looks like that on the old eye-diagram scope :

That is why, earlier, I said the solution to our problem wasn’t going to be as easy as cranking-up everything to the maxxx. If we overshoot, then we might actually degrade signal integrity at the lower speeds and that would suck, because we actually need PCIe 1.0 : it’s the starting point, like you can’t start your car in fourth speed. If we mess up PCIe 1.0 we’ll be in a worse place than we already are.

BUT WAIT, there’s another wrinkle. PCIe is a full-duplex interface. There’s every chance that our signal integrity issues are asymmetrical, meaning they are worse on the outgoing lanes than on the incoming lanes (or vice versa). So we can’t use the same settings on the TX and RX redrivers. We might, once again, saturate the signal at the lower frequencies and shoot ourselves in the feet.

So here’s my plan for the next step :

- Find out as much as I can on the topology of the motherboard’s PCIe lanes. Unfortunately, Asus does not provide CAD files for their products, for some reason (cough capitalism cough)

- Based on that, determine which direction(s) requires which amount signal boost.

- Increase the gain in that direction, little by little. That is another complicated aspect.

- Test each settings with a round of Outer Worlds. Parvati is the cute engineer girlfriend I’ve never had.

Long post again. Time to eat and get to work. I’ll see you on the other side.