Stop right there.

Snapshots are either a feature of the file system, the virtual disk, or the NAS / SAN. Let’s go into FS first.

Take ZFS, BTRFS and LVM as an example. ZFS and BTRFS have builtin snapshot capabilities. Taking a snapshot of a ZFS file system is easy. LVM is just a logical volume manager (it’s its name, duh), so you can place other file systems on it, like ext4 or xfs. LVM, if the volume group (vg) has enough free space to write new data to an empty space in your volume (copy-on-write, or COW), LVM will allow you to keep the data that is in place (snapping the FS) and it will just keep the data outside the actual partition, but inside the same volume, and point… well… pointers to the new data. Once you delete a snapshot, the volume becomes free for the taking, either by a new logical volume on the volume group, or for a new snapshot.

Let’s get into virtual disks. VMWare’s vmdks and KVM’s qcow2 disk type have builtin support for snapshotting. Much like LVM, these work on the same principle, copying data to another location and pointing to it. qcow2 is literally QEMU Copy on Write (2). Keep in mind KVM also has raw disk types, which basically emulate physical hardware. It has better performance and if you don’t need snapshots, you can select that, but by default Proxmox uses qcow2 in absence of another snap engine. If you use Proxmox builtin storage, it will use LVM(-thin) (which is basically LVM, but only telling the FS on top that it has a certain size, to enable you to basically overprovision a server - I hate overprovisioning).

The last snapshot type would be the NAS / SAN one. To make it easy, imagine that you have another proxmox or a truenas server running ZFS and having an NFS share. You give the NFS share to another server and if you want to take a snapshot of it, you just do a ZFS snapshot on the NAS itself. If you are sharing an entire partition, then you have to snap it in its full. If you have your NFS shares split into multiple smaller chunks, then you may only need to snap that particular one.

Now, to LXC. Proxmox by default, if I’m not mistaken, doesn’t use any of the above for LXC. I believe it’s just a folder inside one of Proxmox partitions. Which is why you cannot take a snapshot of it. It’s not that you are not able to, you can do either an LVM or ZFS snapshot depending on what you installed Proxmox on, but you would have to take a snapshot of the entire Proxmox FS, not just the sole LXC. Looking into Proxmox LXC creation, it seems like you can only select the storage pool (local LVM or your ZFS pool or your NFS share). But you cannot create a virtual disk, like you can with VMs and make it for example qcow2.

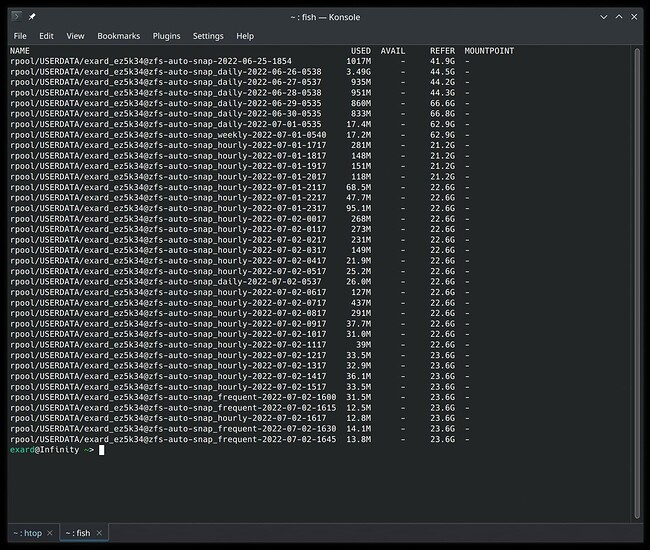

This is the reason why you cannot take a snapshot in proxmox. However, interestingly enough, my last proxmox box standing has 2 containers on its internal ZFS pool, and the storage is a subvolume of the main pool. In theory, I should be able to take a snapshot of the LXC container. The GUI doesn’t seem to allow it, but lo and behold:

root@ps12:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

zastoru 2.93T 595G 112K /zastoru

zastoru/data 96K 595G 96K /zastoru/data

zastoru/subvol-115-disk-0 653M 11.4G 653M /zastoru/subvol-115-disk-0

zastoru/subvol-116-disk-0 57.1M 7.94G 57.1M /zastoru/subvol-116-disk-0

zastoru/vm-104-disk-0 22.6G 595G 17.5G -

zastoru/vm-109-disk-0 74.1G 662G 6.99G -

zastoru/vm-109-disk-1 1.60T 595G 1.60T -

zastoru/vm-110-disk-0 31.9G 595G 31.9G -

zastoru/vm-111-disk-0 1.16T 1.33T 422G -

zastoru/vm-112-disk-0 10.8G 605G 506M -

zastoru/vm-114-disk-0 12.3G 605G 1.96G -

zastoru/vm-117-disk-0 10.3G 598G 6.90G -

zastoru/vm-118-disk-0 10.3G 599G 5.97G -

zastoru/vm-119-disk-0 6.19G 598G 2.69G -

zastoru/vm-119-disk-1 2.06G 597G 60K -

root@ps12:~# zfs snapshot -r zastoru/subvol-115-disk-0@2022-07-02_10am

root@ps12:~# zfs list -t snapshot

NAME USED AVAIL REFER MOUNTPOINT

zastoru/subvol-115-disk-0@2022-07-02_10am 0B - 653M -

zastoru/vm-104-disk-0@--head-- 5.05G - 16.0G -

To revert to a snapshot:

root@ps12:~# zfs rollback -r zastoru/subvol-115-disk-0@2022-07-02_10am

And to destroy it:

root@ps12:~# zfs destroy zastoru/subvol-115-disk-0@2022-07-02_10am

All heil ZFS.