When searching around I don’t find many GPU passthrough success stories from the Coffee Lake platform, so I figured I should share what I’ve tested so far.

TL;DR

As a bottom line it works, there are no notable surprises. IOMMU grouping is really good on my Asrock z370m pro4. Passthrough of a MSI Geforce GTX 970 to Win8.1 pro N works fine with KVM and vfio, given the standard nvidia-spoofing procedures (see below).

Here follows some setup details, tests, and notes.

Basics

Hardware

- ASRock Z370M pro4

- Intel Core i7 8700 (non-K), 3.2GHz (4.3-4.6GHz turbo)

- Corsair Vengance LPX white DDR4-2666, 32Gb (2x16), Cl16

- MSI Geforce GTX 970, 4Gb

- Samsung SM961 256Gb nvme x4 on M2_2 (Xubuntu 17.10)

- OCZ Vertex4 256Gb on sata3 (Windows 8 PRO N updated to 8.1)

- BeQuiet Dark Rock 3

- Seasonic X-750 (yes, kinda overkill for this build.)

- Fractal Design Define Mini C

Linux + KVM subsystem versions

- Xubuntu 17:10 vanilla everything (no special ppa:s)

- Linux 4.13.0-16-generic

- libvirtd (libvirt) 3.6.0

- QEMU emulator version 2.10.1(Debian 1:2.10+dfsg-0ubuntu3)

- OVMF version 0~20170911.5dfba97c-1

IOMMU groups

IOMMU Group 0 00:00.0 Host bridge [0600]: Intel Corporation Device [8086:3ec2] (rev 07)

IOMMU Group 10 00:1f.0 ISA bridge [0601]: Intel Corporation Device [8086:a2c9]

IOMMU Group 10 00:1f.2 Memory controller [0580]: Intel Corporation 200 Series PCH PMC [8086:a2a1]

IOMMU Group 10 00:1f.3 Audio device [0403]: Intel Corporation 200 Series PCH HD Audio [8086:a2f0]

IOMMU Group 10 00:1f.4 SMBus [0c05]: Intel Corporation 200 Series PCH SMBus Controller [8086:a2a3]

IOMMU Group 11 00:1f.6 Ethernet controller [0200]: Intel Corporation Ethernet Connection (2) I219-V [8086:15b8]

IOMMU Group 12 04:00.0 Network controller [0280]: Intel Corporation Wireless 8260 [8086:24f3] (rev 3a)

IOMMU Group 13 05:00.0 Non-Volatile memory controller [0108]: Samsung Electronics Co Ltd NVMe SSD Controller SM961/PM961 [144d:a804]

IOMMU Group 1 00:01.0 PCI bridge [0604]: Intel Corporation Skylake PCIe Controller (x16) [8086:1901] (rev 07)

IOMMU Group 1 01:00.0 VGA compatible controller [0300]: NVIDIA Corporation GM204 [GeForce GTX 970] [10de:13c2] (rev a1)

IOMMU Group 1 01:00.1 Audio device [0403]: NVIDIA Corporation GM204 High Definition Audio Controller [10de:0fbb] (rev a1)

IOMMU Group 2 00:02.0 VGA compatible controller [0300]: Intel Corporation Device [8086:3e92]

IOMMU Group 3 00:14.0 USB controller [0c03]: Intel Corporation 200 Series PCH USB 3.0 xHCI Controller [8086:a2af]

IOMMU Group 3 00:14.2 Signal processing controller [1180]: Intel Corporation 200 Series PCH Thermal Subsystem [8086:a2b1]

IOMMU Group 4 00:16.0 Communication controller [0780]: Intel Corporation 200 Series PCH CSME HECI #1 [8086:a2ba]

IOMMU Group 5 00:17.0 SATA controller [0106]: Intel Corporation 200 Series PCH SATA controller [AHCI mode] [8086:a282]

IOMMU Group 6 00:1b.0 PCI bridge [0604]: Intel Corporation 200 Series PCH PCI Express Root Port #17 [8086:a2e7] (rev f0)

IOMMU Group 7 00:1b.2 PCI bridge [0604]: Intel Corporation 200 Series PCH PCI Express Root Port #19 [8086:a2e9] (rev f0)

IOMMU Group 8 00:1b.3 PCI bridge [0604]: Intel Corporation 200 Series PCH PCI Express Root Port #20 [8086:a2ea] (rev f0)

IOMMU Group 9 00:1b.4 PCI bridge [0604]: Intel Corporation 200 Series PCH PCI Express Root Port #21 [8086:a2eb] (rev f0)I have tried all PCIe slots except the uppermost x1 slot (as it is shadowed by the GTX970 cooler). All these slots represent own IOMMU groups, even the x4 one (in an x16 slot). I suppose this means that all slots except the main x16 one sits on the chipset. I also suppose it means that a second external GPU could sit in the x16(4) slot and get its own IOMMU group, though working at chipset-constrained speed, but I have not tested this.

Hardware notes

I use IGD for host graphics on Linux, GTX970 for passthrough. This needs IGD to be primary graphics in UEFI together with multigpu = enabled. The option of using IGD for host graphics is IMO the main selling point to choose Coffee Lake over other architectures for a Linux+Windows work/gamestation. It allows a silent and power-efficient system while still providing (barely) enough cores.

Currently I have no good way of organising input as I use a single keyboard and mouse with a KVM-switch. I have a PCIe USB controller from a former passthrough machine, but it’s ports are misaligned with the chassis back plate so I had to order a new one. But this I expect will be straightforward to add, then host and Windows VM will have exclusive physical USB ports, so no emulation of peripherals will be needed. USB will also handle VM sound, I have not tested qemu’s emulated sound.

The eagle-eyed reader spots a Wifi card in the device list, it is a Gigabyte add-on card which sits in a PCIe x1 slot, so it’s not part of the motherboard.

VM setup notes

I used virt-manager to create VMs, followed by ‘virsh edit’ for final tweaks. I have not optimised much yet, no vCPU pinning or hugepages, but hyperv extensions are enabled. RedHat’s virtio drivers are installed in Windows. Most testing below has been carried out with 4 real cores passed through the VM (host-passthrough, sockets = 1, cores = 4, threads = 2).

In principle I could give the VM the entire SATA controller or the entire nvme ssd, as both are in their own IOMMU groups and the Vertex4 ssd is the only SATA device. I have not tested this though. Instead the Vertex4 (sda) is sent to the VM like this:

<disk type='block' device='disk'>

<driver name='qemu' type='raw' cache='none' io='native' discard='unmap'/>

<source dev='/dev/sda'/>

<target dev='sda' bus='scsi'/>

<boot order='1'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

...

<controller type='scsi' index='0' model='virtio-scsi'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x0a' function='0x0'/>

</controller>Note that the disk itself is not defined as a virtio-device, albeit indirectly as the emulated scsi controller is. Virtio-scsi is AFAIK the only way to get Windows TRIM to work through the virtualisation layers down to the hardware, unless (presumably) you do PCIe passthrough of a physical controller.

EDIT 2017-11-18: Forgot the option discard=‘unmap’ in the section of the above XML. This is needed for TRIM commands from the VM to properly reach the SSD. I have updated this now. /END EDIT

For getting Geforce drivers to work in Windows while still using hyperv optimisations I have to add the following to the libvirt config file:

<hyperv>

...

<vendor_id state='on' value='nvidia_sucks'/> ['value' can be almost any string]

</hyperv>

<kvm>

<hidden state='on'/>

</kvm>I include this note as there has been some confusion recently as to which workarounds are needed for fooling the nVidia drivers to start despite passthrough. (This is an on-going arms race and a reason for caution before buying a Geforce card for passthrough - future drivers may block this usage in new ways). Both the above additions together constitute the only way AFAIK to have any hyperv extensions active while still having a working Geforce passed through. Note that for doing this purely in XML as above a recent libvirt is required, not long ago it was possible only with qemu command line injections.

(basic) Performance

Some very initial performance numbers, baremetal vs virtual Win 8.1 pro N. Baremetal and virtual performance are recorded from the same installed Win8.1 image (see note on “duel booting” below).

Unigine Heaven 4 ultra settings, 1080p: no discernible difference between virtual and baremetal - around 1300 points, ~25-110fps (average ~55) in both cases

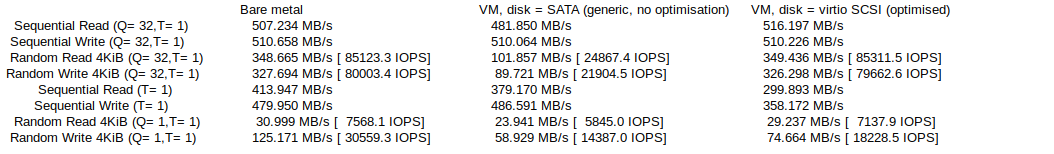

Flash performance (CrystalDiskMark), Baremetal vs VM (generic disk model) vs VM (optimised disk model)

(OCZ Vertex4 256Gb / SATA3)

Notable differences primarily in IOPS, where the generic SATA (emulated) device model suffers. But there is still room for optimisation relative to baremetal, and curiously there are areas where emulated SATA out-performs virtio-SCSI. Note again that many “best practices” optimisations (such as hugepages) are still not configured.

“Duel” booting - some caveats

I tried @wendell’s recently suggested “duel booting” procedure: First I installed Win8.1 to the vertex4 ssd on baremetal, then passing through the ssd as a raw block device to a VM. It works sort of as expected, but:

- Booting the VM triggers re-activation of Win8.1, given that it was already activated on baremetal. I have not tried activating Windows in the virtualized state, as I suppose that will just require a new re-activation on the next baremetal boot… Any advice on this? I am suspecting this is MS-intended behaviour and in line with the license, unfortunately.

- Everytime I change between VM boot / baremetal boot Win8 stays at “Windows is reconfiguring your devices” for some time. In addition, if this happens while on baremetal, Windows changes the UEFI boot order so that it’s disk is first next time, bypassing the GRUB loader on other disk. (this issue alone is reason enough to never let Windows near the real hardware ever again in the future!).

Because of these issues I will probably give up duel booting after I’m satisfied with my testing, and run Windows as a VM only. I am also considering adding some spinning storage and back Windows with ZFS sparse volumes, using the ssd as cache. This would simplify backups and be more disk-space efficient.

These are my notes so far from this setup. Note that I am not through testing by far, but I see enough critical parts working in order for considering Coffee Lake a viable GPU passthrough platform.