Hi everyone! long time lurker, first time poster.

I’ve got a gluster replicated volume running under proxmox. Two bricks with an arbiter brick in another node. This gluster cluster provides storage for my three node kubernetes cluster.

The nodes are running gluster 10.1 on ubuntu 22.04.1 with 1TB of HDD storage and a 19GB nvme cache.

Let me give you some specs:

Proxmox nodes:

- CPU: i5-6500T

- RAM: 16GB

- HDD (pve01): Seagate ST2000LM007 (2TB 2.5’’)

- SSD (pve01): Crucial CT500P2SSD8 (500GB nvme m2)

- HDD (pve02): WD WDC_WD20SPZX(Blue 2TB 2.5’’)

- SSD (pve02): Samsung 970 EVO Plus (500GB nvme m2)

Gluster VMs have 2GB of ram and 1 vCPU, 1000GB of HDD and 20GB of SSD.

Im using LVM caching to speed the volume up.

lvs output on gluster01(pve01)

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data gluster Cwi-aoC--- <1000.00g [lv_cache_cpool] [data_corig] 15.41 0.50 0.18

ubuntu-lv ubuntu-vg -wi-ao---- <15.00g

lvs output on gluster02 (pve02)

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

data gluster Cwi-aoC--- <1000.00g [lv_cache_cpool] [data_corig] 99.99 0.74 0.01

logs ubuntu-vg -wi-ao---- 10.00g

ubuntu-lv ubuntu-vg -wi-ao---- <15.00g

I have noticed that the node running on pve01 has way higher latency

PVE01 also has iowait consistently over 10%, while PVE02 is usually under 5%.

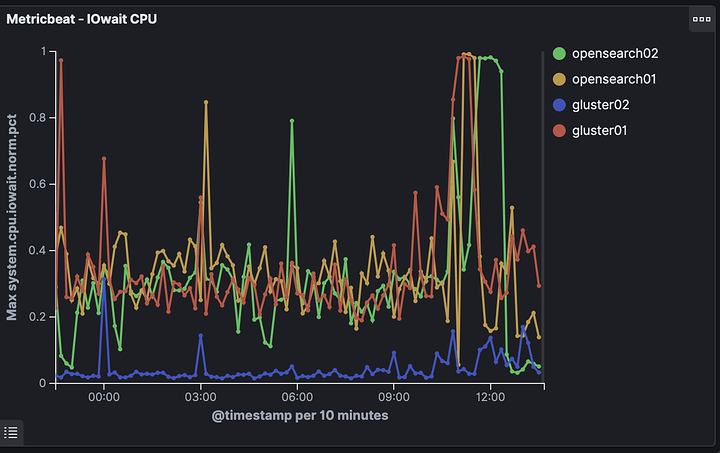

These PVE nodes also run some opensearch nodes, here are their iowait metrics:

My questions are:

- I understand that the SSD on pve01 is worse than the one on pve02, but can the difference in performance be explained by a difference in SSDs?

- Why would gluster01 have Cache used blocks at 15.59% and gluster02 at 99%?