Hey guys,

I’m in a bit of a problem, a friend brought me “NETEYE surveillance computer”, and after turning it on it looks like it’s running CentOS and software called Netavis Observer.

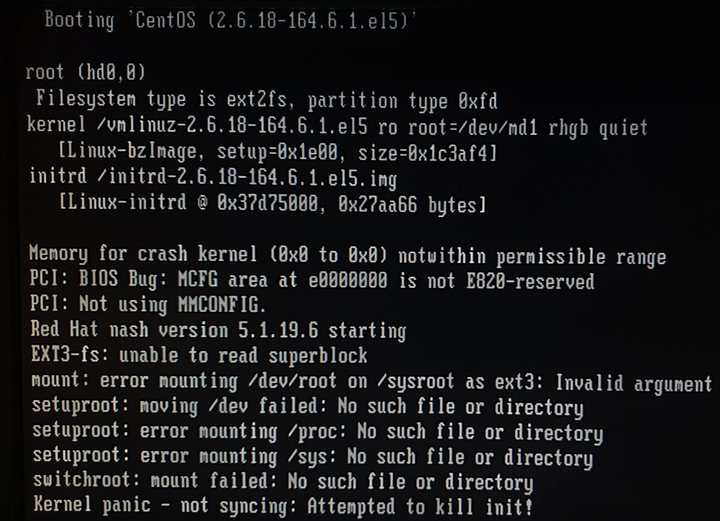

It wont boot, and it is showing me errors about superblocks being unreadable.

It won’t let me edit any of the boot options without a password so I got CentOS on a USB drive, booted it up and looked at the disk utility and found two disks, several partitions and four degraded RAID devices.

I went in, edited the RAID components and it now shows as “running” instead of degraded, and components say “Fully Synchronized”. Running a filesystem check reported problems, and after a while it said that it made filesystem modifications. It shows up as clean.

Next up were the two big partitions (not part of a RAID array as far as I can tell) named “images” (where surveillance stuff is saved I guess), and those are xfs type. Filesystem check just said that it isn’t clean instead of repairing it. So a short DudkDuckGo session later, I found out about xfs_repair, but that is not on my live disk, or I just can’t find it in terminal - I’m not really sure?!

A quick note: Disk utility is nagging about misaligned partitions and lower performance because of it.

So, I was happy about the filesystem and RAID repair earlier and went on to reboot thinking it might start now and do xfs_repair on it’s own, but I was again greeted with the same superblock errors as before.

Back to USB boot, and back to disk utility shows a running array, and I can mount and muck around those partitions, and it has lots of Linux stuff on it, directories, GRUB, that kind of thing - even some netavis directories.

At this point I’m fresh out of ideas, and I don’t really know where to go from here or what the hell is wrong with the OS. And while I’m not your average “click here to go online” guy, I’m not really an expert when it comes to Linux, filesystems or RAIDs.

Any help would be greatly appreciated!