Just after the last update, I realized a silly mistake I made- the opamps need to be fully differential for the actual audio input, so that’s now a TI OPA1632, while the 1611’s will serve as buffers in various places around the circuit.

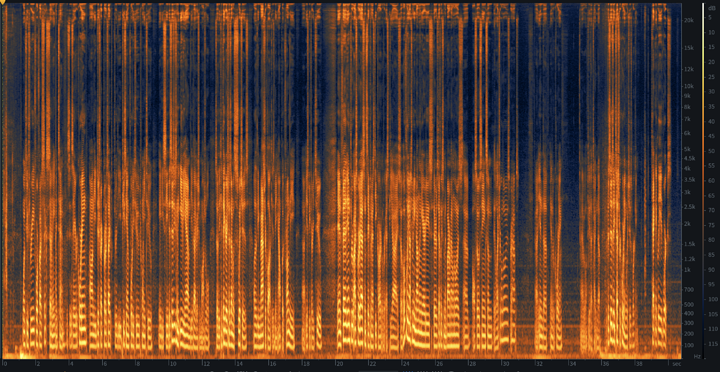

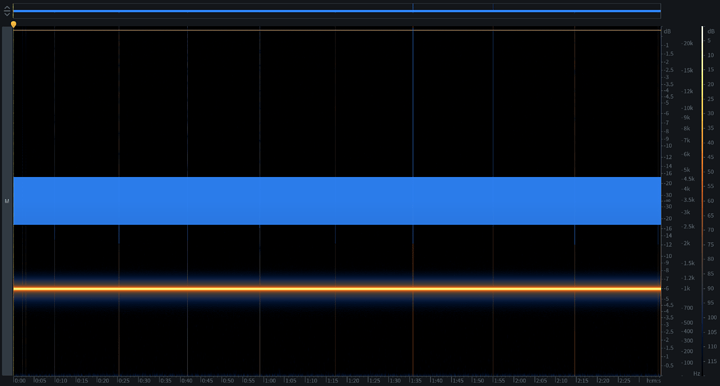

Yesterday I revisited an LTC library (also ESP32) I had worked on a while back. I’ve learned enough since then to realize it needs a brutal refactor, and I finally realized how to (hopefully) generate reliable LTC output.

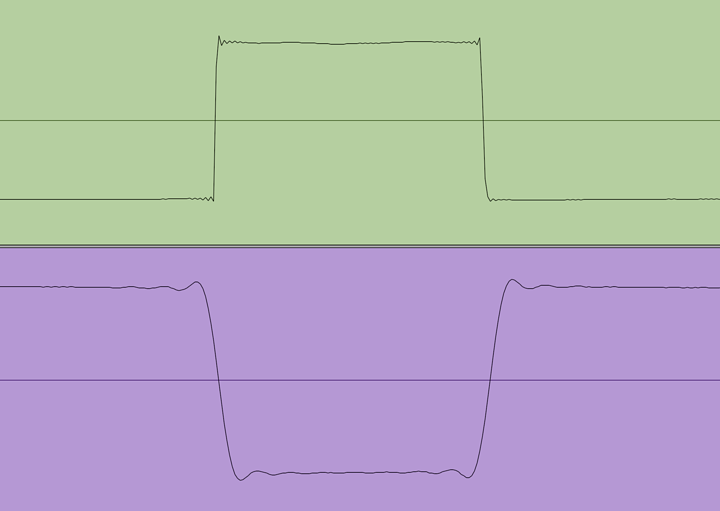

Previously, I was using the onboard DAC with interrupts to output each bit. The clock would stay synced over long periods of time, but would jitter forward and back every one or two seconds.

What I didn’t realize is writing to the I2S DMA buffer on the espressif chips is a blocking operation, and doesn’t need to be called with interrupts or delays. I was stuck in profiling hell before- measuring the duration of each function so that the edges would rise and fall at the exact right moment, then writing directly to the DAC output, only buffering the next bit to be written.

Now, I have a ring buffer that I can write to, which dumps it’s available data into the DMA in another task. This is the same method that writes data to the SD card from the DMA as it comes in for dishrec.

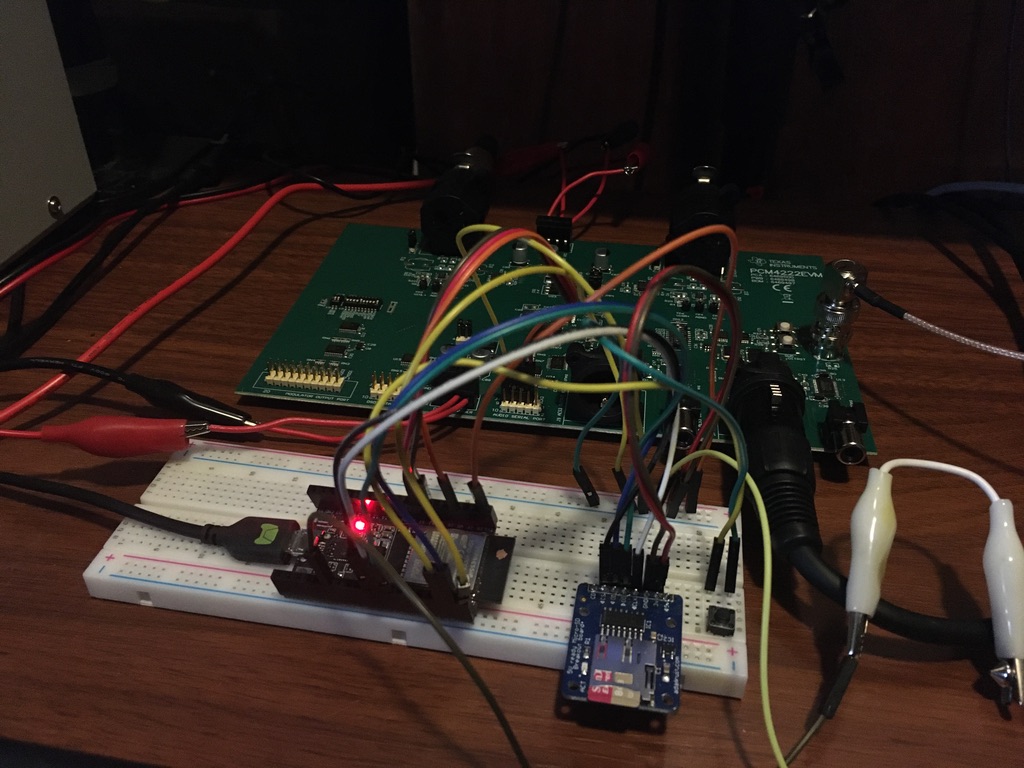

And speaking of dishrec- I have the EVM board in and powered for the PCM4222 ADC, with a barebones class written to interface with it. I’m also switching the production chips to the PCM4220, since it is pin-compatible with the 4222. The only reason I chose the 4222 prior is because it’s the one on the EVM.

Ok- back to timecode:

While writing the BWF iXML portion of the wav header code, I had more realizations. CD audio is 44.1kHz, broadcast is 48k. The difference is historical, but why 48k? I suspect it’s because It’s evenly divisible by 24, 25, and 30. The timecode we are familiar with reading in any editing suite is such:

hours:minutes:seconds:frames

The metadata that an NLE or DAW should read is the more ambiguously named samples since midnight. Though we use framerates that are 0.1% slowdowns like 23.98 and 29.97, the TC value still runs from 0-23 and 0-29 frames in each “timecode second.” So, because samples since midnight must be a whole number, the sample rate must be divisible by the rounded framerate. 44100sps / 24fps = 1837.5 samples per frame. That won’t work.

48000 / 24 = 2000, which means that at TC 00:00:04:11, SSM will read 214000 ((4 x 24) + 11 = 107 total frames * 2000 samples per frame = 214000).

After finally getting all that through my skull, it became clear that to correctly time LTC output at 23.98 and 29.97, I should probably just insert a 1 sample delay every 1000 samples to account for the 0.1% slowdown, as LTC output is real-time.

While I’m rambling, here’s another tidbit if anyone is interested: while we often abbreviate 23.976 as 23.98, the actual metadata representation of frame rate is a string expressed as a fraction; i.e., “24000/1001“, “24/1”, “25/1”, “30000/1001“, and “30/1”.  This is stuff I’ve used for forever but didn’t know. Plus, I always wondered what samples since midnight meant.

This is stuff I’ve used for forever but didn’t know. Plus, I always wondered what samples since midnight meant.

This is stuff I’ve used for forever but didn’t know. Plus, I always wondered what samples since midnight meant.

This is stuff I’ve used for forever but didn’t know. Plus, I always wondered what samples since midnight meant.