Parallel Computing !

Intel has been doing things to try to get into the CUDA space for a while, They’ve just failed at getting GPU’s where they would compete for compute. KnightsLanding was essentially a cash fire and now with Arc. . . Not getting enough traction. Too soon to tell.

AMD has made attempts, but AMD is a hardware company “First” that of recent history crawled out of bankruptcy (nearly), sold off it’s fabrications facilities and focused on working for it’s customers, (Sony Playstation more so than Xbox), Mining ( Geological not Crypto) and other more niche solutions.

So for 15yrs, Nvidia has run CUDA down the throats of Scientist, College students, Creatives ( Video, Audio, 3D modeling), and Finance Nerds which has fostered solutions that are very popular in the space.

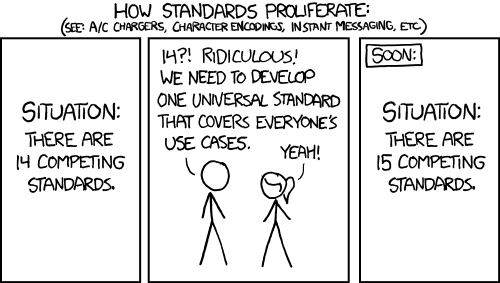

PyTorch, SciPy Adobe suite, AutoCad have all benefited from being “Works with CUDA”, “Accelerated with CUDA”, while seeing competing platforms like OpenCL suffer from bad decisions, OpenCL 2.x, The Khronos group essentially scrapping that and returning to OPenCL 1.2 as a reflection point with OpenCL 3.0.

So it’s not that AMD and Intel are not interested in Parallel computing, They are in fact, i’s just that they are approaching it differently, and the Open standard that should have competed was a mess. AMD introduced HSA into their APU’s with Kaveri. it worked great, but no one used it. The problem was that AMD was in a bad place financially and not popular in any space. It was/is a great technology at a bad time for the company. BTW LibreOffice with Hardware Acceleration could fly through large spreadsheets because it could leverage the GPU to do math as well. Now with ROCm, AMD is really showing it can do these task. Time will tell.

.

This is not a Linux specific thing. Will it, of course it will, if it is used. OpenCL making a comeback has a far larger role to play, I look at Darktable, Inkscape, GIMP, Krita as the main users of what a great OpenCL implementation can provide on Linux . GIMP just spent a couple years, converting their NDE extensions, filters to work with OpenCL. Inkscape could leverage OpenCL better for LPE’s along with OpenGL for redraws and handling images with tons of paths. We all know Blender needs OpenCL/ROCm/HSA for cycles rendering .

.

.

Well, Getting PyTorch working on AMD will be great, it’ll get more people to buy AMD, but there still a lot of work to be done. Having teams working on code to get application specific optimizations is expensive. AMD is not “rolling in cash” although they are better off now than they were 10yrs ago, they are clearly far behind. Open standards and Open collaboration is how AMD can compete.

.

Weeeeeell. . . Not really seeing as the X11 team are the developers of Wayland, and X11 is not getting anything outside of security patches at this point. Pipewire not being a replacement of Pulse or jack but a middleman/broker for both.

.

You have some valid points. I still do not understand the business model Instinct cards? They should be selling to consumers, creatives would jump to them if they were available IMO.

ROCm on Linux is readily available now, but the caveat without a proper card, it will take some hoops to get going. I have been on other forums helping people recently get RoCm <5.x working on APU’s and GPUs. Any card pre gfx9Xx for AmD is a dice roll, ROCm 3.x works but you need to first find it on the web, and compile it yourself. . . Look at what this guy did with a APU