Yo, forums! Is the Fox Loli again :DDDDD

Ever since the Xeon E5-2670 dropped to $60 apiece, I have wanted to have a crack at building my very own overkill over-the-top beastmeister of a machine, something to tear the socks off my old 2600K computer. This is something that a lot of others have done in months past, and it took me a little while to muster the courage (and the funds, hehe) to pull this off.

The machine will consist of the following:

• An Asus Z9PE-D8 WS

• Two Intel Xeon E5-2670s (with the C2 Stepping SR0XK, so as to retain the ability to use VT-d)

• The EVGA Supernova 750G2 that was in my old machine

• Two Xigmatek Dark Knights ( I will explain in a bit)

• 24GB of DDR3-1333 (I will be replacing it with 32/64GB ECC memory later)

• A Sapphire Radeon 7850 OC edition that I have lying around.

• Windows 10 Professional (I need to be able to use all the RAM I plan to put in this machine)

• Crucial BX200 256GB SSD (Hard drives will be added later.)

And last but probably most importantly:

• My heavily, heavily modded Fractal Design Define R4.

What is this build going to be used for?

• Video editing

• 3D Rendering

• Video encoding with 3 or 4 instances of Handbrake running simultaneously

• Lots and lots and lots of virtual machines :D

• All-around workstation badassery.

Now, I promised ghetto-ness and here are the points where it is required; keep these things in mind if you ever plan to do something similar.

Firstly, the case I shall be putting this in is my good old Fractal Design Define R4. This is a case that is very dear to my heart, but it's not the Define XL that was in the Dual Xeon workstation video that Tek Syndicate put out 3 years ago (which they mistakenly called an R4). The EEB motherboard I'm putting in this case is so wide that to get it to fit inside the case at all, I had to remove both drive cages and with a hacksaw cut off about a quarter of the 5¼in bay and plastic drive cage rail. That is far easier said than done - a normal hacksaw can't get to an angle necessary to carve out the bay. You'll have more luck with an angle grinder.

Second of all, in terms of mounting holes, SSI EEB is not supported by the Define R4 or by any mid-tower cases I am aware of. The holes covered in yellow are native ATX holes. The red holes must have standoff holes drilled into the motherboard tray. The green holes must also have standoff holes drilled, but they fall beyond the width of the mobo tray on the R4. The motherboard will have to be held up by a spacer of some description on the far right hand side.

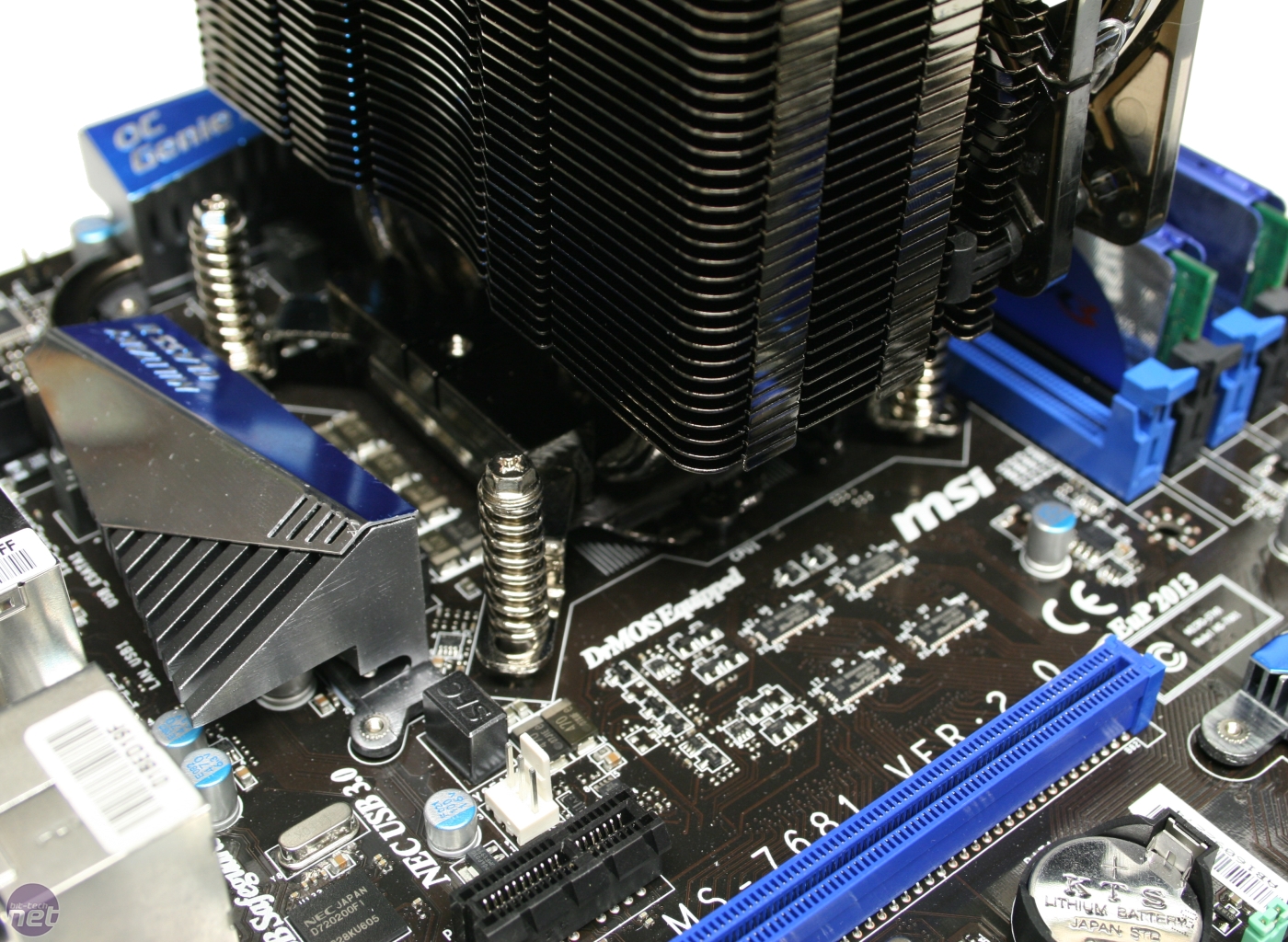

Third and last of all, the coolers. I picked up the two Xigmatek Dark Knights from eBay because they were cheap ($17 apiece) and because they'll handily beat a Hyper 212 Evo any day of the week.

Now, these are version 1 of the Dark Knight, which was released before Socket 2011 was introduced. So they don't have out-of-the-box support for Socket 2011 motherboards like the Dark Knight II has.

Thankfully, because of the nature of their retention mechanism, and because Socket 1366 has the same footprint as 2011, the retention screws can easily be replaced with ones threaded for the mounting bracket threads on Socket 2011. What is needed for each cooler are 4 M4-threaded screws between 26 and 28mm in length. Note that these must be M4 screws - DO NOT USE 6-32 SCREWS FOR THIS, even though they appear to have similar threads - you will damage the threads and the mount will not be secure.

I decided to call this Darknut because it's clad in white armour (the R4 is white) and it has Dark Knights in it. It makes me think of the Darknuts from Legend of Zelda: The Wind Waker.

Now, I haven't started this quite yet because I need to pick up the screws and I'm also planning on turning this into a build video as well... But I will update as progress is made, with pictures! :DDD

Until then, have fun! ;3