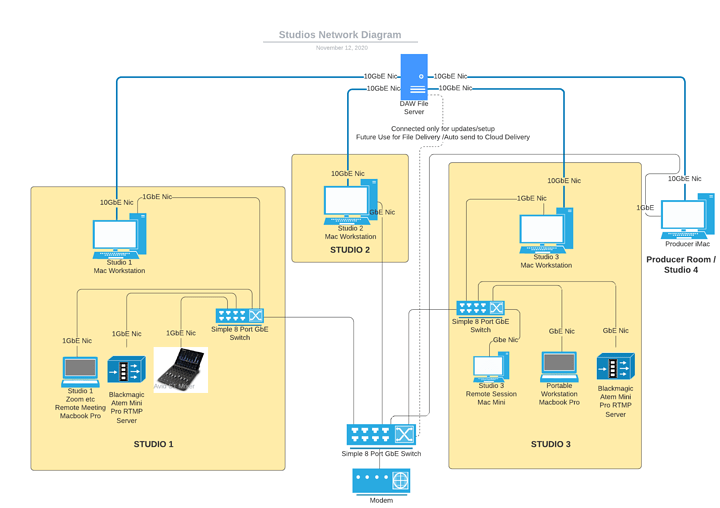

I literally just started my upgrading to 10Gb here. I originally wanted a couple straight connections because I’m only using 2 systems going to 1 with storage but in the end I don’t think it was much more to add a $250 Mikrotik 309 with 8 ports and I then had way more options later.

Also, I’m sure you realize you don’t get 40Gb speeds with that setup. Unless I’m just missing something? (you mentioned dizzying 40Gb speeds) But with 4x 10Gb nic’s on the NAS, one going to each workstation, you only get 1x 10Gb link to each. Right? Unless you are setting up 4x bonded ports on each workstation. And on a switched connection, you still only get the 10Gb between, one at a time. I’d put in a switch. The 309 was easy and I only had to adjust the couple of 1Gb links I put in. Plus I mixed fiber and copper, depending on the systems. My Mac had the 10Gb copper, the new Ryzen I had also had copper, but the storage server needed an add-on so I put in a SFP fiber to the switch since it was cheaper.

So someone above mentioned IOPS. I’m sure it totally depends on how your software does writes. I have 2 SSD arrays in a couple servers here. One has 6 disks (3 mirrors) and the other is 4 (2 mirrors). When using 4k writes, just doing some quick dd tests, there’s a big difference. That’s partly due to the disks I think too because of their internal caching. But on the 4-disk array I only get 4Mbps on a 400Mb file while it does sync on the writes. On the 6-disk, its 13Mbps. There’s no way 1Gb connection will be bothered by that. Now, if I do 1Mb block writes, that’s way different. Or, if I turn off sync on the test, I get speeds in the Gb’s per second. FYI, I use ZFS, which does weird caching things, so that’s a factor too. (personally, I would only do ZFS, but thats just me)

Honestly, if I had one of my clients asking me all this, I’d litterally bring in some decent, but not enterprisy, SSD’s in 6-disk (3 mirrors) ZFS in an older Xeon server and just test it out. I’d even test it on 1Gb ethernet and see what it does. Of course, for me though, I’d be happy to do that and if it doesn’t work I’d use the SSD’s elsewhere (and I have a spare older xeon).

I may be way off on this, but got a hunch even with a RAID10 of SSD’s you’ll be hitting some bottlenecks becuase of all the files. Hundreds of small files read in per project, and all those accesses from multiple systems, that’s going to go slower. And just guessing, but there’s probably way more read’s from those systems, but then a single large 300+ Mb file write every few minutes. If the software does small blocks and issues sync’s, its going to go slower than 1Gbps I bet. And that’s why I’d just get 6x cheap (not too cheap) 1TB SSD’s and see what it does. I used 4x WD Blues 1TB’s on my 4 disk and 6x Micron 9100’s on the 6-disk. ZFS seems pretty happy with them. For regular RAID only do RAID10 for IOPS. If you have a system with 16G memory you could spare for tests, load up Unraid or Proxmox or FreeNAS and test it out.

This is super interesting… you totally derailed my work here! LOL I’m partly iterested because years back I wanted to go into recording engineering, started schooling, then left, then ended up in PC’s and networking. But my son plays in Logic Pro a lot and I tinker with it once in a while. He’s always finding ways of using math to build music, he’s fascinated by it. And he just graduated with a BS in Math, yay! I bet he’d go nuts hearing what you do.

Anyway, I hope some of my info helps. I mainly work with with small biz networks and do development and have never needed 10G anwhere yet, except my home lab. But I have built a bit of ZFS servers for VM’s for clients. There’s much smarter people here with specifics on these bottlenecks and disk array performance.