Ok, so I’ve spoken about this in the past at length with several members, but I’d like to open a round table discussion on building a truly universal PC. Viz: a PC that can utilize any commercially available CPU and RAM packet, (as era dependent pairings from 1979-present day) in a robust plug and play manner. Insofar as the CPU, I imagine a pin toy that has a grid array that can be raised and lowered to make contact, individually, with either pins or land grid arrays. For ram, I’d just include multiple socket types, as they can be clearly labeled on the silk screen, and easily grouped in a relatively tight area on an oversized motherboard (think 286 dev boards, pictures of which, are what sparked my initial idea.) I’ve also floated the idea of a SOC handling the initial POST, leading to a bios style options screen, where you can select any CPU you have the pinout for. At that point, the SOC would soft power off, and leave the varible CPU and RAM combination as the functional ‘computer’ electrically. FPGA has also been considered. I’d like as much functionality to remain onboard the main board, rather than using daughterboards, or even Pentium II style packet CPUs. I’ve got a lot of random old hardware, just sitting around gathering dust. I’d like to change that. Your thoughts? Give as much detail as you like, I may or may not be able to follow all of it, being a self taught nerd, but I like to learn. @wendell your thoughts in particular would be greatly appreciated in this matter. This isn’t a commercially viable product and should not be designed as such. A secondary goal would be to be able to make otherwise impossible pairings to further development in classical computing. (think something like a pentium MMX cpu sending instructions to a modern PCIE x16 gpu.) it may not be able to be done, but the fact that I could TRY and do it, fascinates me. This has been an overriding obsession of mine for many years, and I’d like to leverage this forum in order to make it happen.

Believe it or not, there was a tremendous amount of innovation in precision that happened in manufacturing that, in turn, enabled new kinds of CPUs and RAM and other things to exist.

e.g. consider the power supply on the motherboard e.g. the VRM, it basically PWMs power between 20W on average and 300W average many times a second, as needed.

What do you think was required in order to make those VRMs such that they won’t catch fire?

Given they’re usually controlled using i2c, how much digital logic do you think each one contains? Do they have general purpose compute cores? 8051 or some kind of other microcontroller core?

How complex do you think that is?

What if you used those products to enable smarter manufacturing, so you could build some kind of strange MOSFET array attached to a controller in a single package that would be both crazy efficient and cheap at scale?

Perhaps looking at your problem from this perspective, extrapolating how these things work, might give you appreciation for the fact we do have some “standards” that somehow against all odds manage to last more than a year or two sometimes.

not only precision in manufacturing enabling things, but also I’m on to something with ddr5… CLEANING the memory contacts and not touching them with fingers may resolve a lot of the training issues around ddr5.

yes, I cant believe it, testing now. That’s the level of precision we’re currently dealing with.

Fiber connected DDR6 when

Famous last words:

Before the end of the decade.

I didn’t say which decade ![]()

“All I heard was an excuse to solder memory into all desktop motherboards.”

t. ASUS

Only read the title, sounds large.

yes. apparently no one actually reads here. see above. ![]()

Signal integrity hell awaits.

how will signal integrity be degraded if only one group of ram banks would be populated at a time?

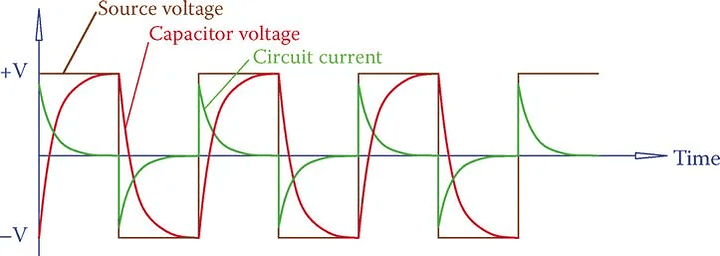

Because any two conductors in proximity form a capacitor. And capacitors will then turn nice crisp edges into a mess:

can you not isolate them?

To reduce capacity, you can reduce the area of the traces or increase the distance between them.

There are also ways to put a “guard” or “shield” between the two conductors, but that is an area I am woefully undereducated in beyond knowing the words.

LMAO I only know computer words.

Electrons don’t behave like photons, but we are really bending the laws of physics with fiberoptics. Look into a physics-based explanation of how fiber optics work and then think about how literally nothing in nature works like that and also how the hell is it so many orders of magnitude lower signal loss over distance than literally any other technology we have?

“the fix” is not to use electrons, probably, or figure out some sort of equivalent keyhole in the laws of physics through which we can improve computing… but its going to take some fundamental low level physics to beat optics.

then lament that we haven’t progressed more into more fully integrated photonic circuits. Scientists in the 80s were worried about this; hence a lot of the way stuff was written in the star trek technical manual talking about optical interconnects but also as-yet-undiscovered physics loopholes that enabled ftl information processing.

Maybe someday something like this would be posible with some sort of fluid nano gel filled with microscopic machines that assemble optical conduits and optical to electron bridges to be able to just plug in whatever…

This might help explain that somewhat:

PCI Express: Is 85 Ohms Really Needed? - The Samtec Blog.

literally the air is minutely oxidizing pads causing increased impedance… our fingers are probably worse.

Also this article might explain the wheelie bin inferno that is PCIe 4.0 passive cabling.

TL;DR: cable manufactures are extrapolating an impedance spec for their cabling that causes signal integrity problems in the real world because they think 85 ohms should be applied to all components unrealistically.

hey can we stay on topic please?

This is on topic, it is central to the reason why you would not want to build a PC with very deep backwards parts compatibility on impedance controlled items like CPU and memory.

Older parts had much looser and different impedance specifications for all of there buses and IO, which means you can’t mix circuits supporting them with modern components without extreme measures… so you’re going to need to have separate boards to accommodate the different hardware eras… separate motherboards.

If you absolutely needed a board that could house many different components with wildly varying electrical characteristics, you’ll need to look into active impedance tuning on a per-trace basis, this is fairly cutting edge stuff and a board designed in such a manor could easily cost more than a house. You’d also need a control system to oversee impedance tuning.

You’d be better off fully characterizing all target hardware and emulating it in something like an ultrascale, A massive undertaking of likely many hundreds of thousands of man hours to even get a few dozen systems from the last several decades.

" it is central to the reason why you would not want to build a PC with very deep backwards parts compatibility on impedance controlled items like CPU and memory." Wrong. I want it cause I want it. No other reason, it’s not practical, nor sellable, or lots of things. BUT I WANT IT. Like I said, obsession.

I’ve encounterd this kind of theoretical PC before in an article but in an even more universal form: A scrap computer (not necessarily a PC) that will survive the apocalypse. It will be designed to use all maner of scrap microcontrollers from all sort of scrap electronic devices like microwave machines, beverage dispensers, etc.

Let me go look for that…