Hi,

I talked a bit about my setup in the forbidden router topic

Unfortunately, after coming back from summer vacation I had trouble copying back the pictures… The transfer would stall and not be complete.

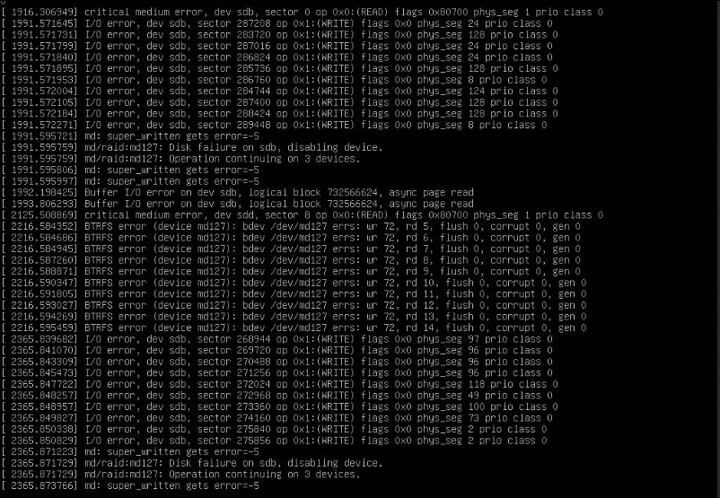

After some debugging I got some scary messages:

I’ve then discovered that one of my drives was out of the mdadm raid5:

root@vault:/srv/dev-disk-by-label-FiRed/dati# mdadm --detail /dev/md127

/dev/md127:

Version : 1.2

Creation Time : Wed Jan 22 02:19:47 2020

Raid Level : raid5

Array Size : 8790405120 (8383.18 GiB 9001.37 GB)

Used Dev Size : 2930135040 (2794.39 GiB 3000.46 GB)

Raid Devices : 4

Total Devices : 3

Persistence : Superblock is persistent

Intent Bitmap : Internal

Update Time : Fri Aug 28 12:00:41 2022

State : clean, degraded

Active Devices : 3

Working Devices : 3

Failed Devices : 0

Spare Devices : 0

Layout : left-symmetric

Chunk Size : 512K

Consistency Policy : bitmap

Name : microserver:FiRed

UUID : f52d1704:6a78c341:0afa7732:c0073c0c

Events : 20203

Number Major Minor RaidDevice State

0 8 80 0 active sync /dev/sdf

1 8 48 1 active sync /dev/sdd

2 8 96 2 active sync /dev/sdg

- 0 0 3 removed

root@vault:/srv/dev-disk-by-label-FiRed/dati#

I’ve tried to bring it back but the resync would fail.

Until then reading files (not completely sure since I’m not accessing all the files in the drive) was not a problem… but dumb me probably made things worse…

Since the error could be caused by the HBA controller or a faulty cable (SMART self-tests are fine)

I decided to remove the drives from the machine and put them into another one to try to resync the data. The resync went fine!

But then I decided to make a BK of the data just to be sure! The issue was even more evident. Reading errors, writing errors. Things were looking really bad. Even mounting the volume would hang the machine for minutes!

Mounting the raid as read-only allowed me to back up most of the data.

I only have 4 files/directories that I cannot read and that are (probably) important.

But I’m still getting a lot of errors:

[48370.703233] btrfs_dev_stat_print_on_error: 7698 callbacks suppressed

[48370.703245] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459341, flush 0, corrupt 1341993, gen 0

[48370.703482] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459342, flush 0, corrupt 1341993, gen 0

[48370.705325] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459343, flush 0, corrupt 1341993, gen 0

[48370.705985] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459344, flush 0, corrupt 1341993, gen 0

[48375.794710] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459345, flush 0, corrupt 1341993, gen 0

[48375.794875] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459346, flush 0, corrupt 1341993, gen 0

[48375.795457] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459347, flush 0, corrupt 1341993, gen 0

[48375.795591] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459348, flush 0, corrupt 1341993, gen 0

[48375.797340] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459349, flush 0, corrupt 1341993, gen 0

[48375.797464] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459350, flush 0, corrupt 1341993, gen 0

[48375.797701] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459351, flush 0, corrupt 1341993, gen 0

[48375.798009] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459352, flush 0, corrupt 1341993, gen 0

[48375.798731] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459353, flush 0, corrupt 1341993, gen 0

[48375.798955] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459354, flush 0, corrupt 1341993, gen 0

[48381.575497] btrfs_dev_stat_print_on_error: 12 callbacks suppressed

[48381.575508] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459367, flush 0, corrupt 1341993, gen 0

[48381.575789] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459368, flush 0, corrupt 1341993, gen 0

[48381.576068] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459369, flush 0, corrupt 1341993, gen 0

[48381.576301] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459370, flush 0, corrupt 1341993, gen 0

[48381.580913] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459371, flush 0, corrupt 1341993, gen 0

[48381.581183] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459372, flush 0, corrupt 1341993, gen 0

[48381.582052] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459373, flush 0, corrupt 1341993, gen 0

[48381.582896] BTRFS error (device md127): bdev /dev/md127 errs: wr 0, rd 131459374, flush 0, corrupt 1341993, gen 0

Now that I have saved most of the data I was wondering if there is a way to test out if a file is corrupted. Especially for pictures/videos!

(Yes I had backups but they were not so new and complete)

Has anyone had any experience in trying to recover a BTRFS partition?

I did not perform any scrub/test until now. I wanted to copy all the possible data as soon as possible.