So I finished building a server with the following relevant specs:

- Motherboard: ASRock X570 Steel Legend (BIOS P5.01)

- CPU: AMD 5700G

- 4-Port M.2 NVMe Adapter: Amazon link

- NVMe: Tried both a ZP1000GM3A023 & ZP1000GM3A013

- OS: TrueNAS 22.12.2

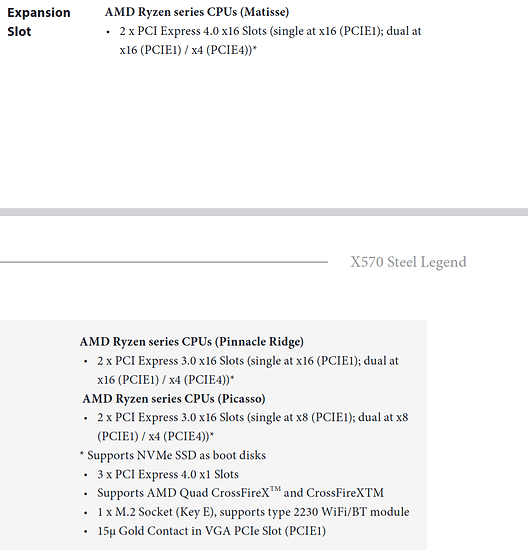

Trying to figure out if I could get x4x4x4x4 bifurcation from the x16 slot before the purchase is a bit of a crapshoot, but the CPU does provide 16 lanes for the x16 slot (unlike some previous APUs which seems to have caused confusion among some folks and first-line support), so I went for it.

Unfortunately, the BIOS only lists auto & 2x4 for the PCIe/GFX Lanes Configuration setting:

-

auto: only detected 1 or 2 drives if I recall -

2x4: to my surprise this seems to run inx8x4x4mode, as 3 of the 4 drives in the PCIe card are detected. I don’t know if that’s intended and I’m misunderstanding what2x4means?

I was content with 3 drives, but sadly the NVMe drive in the x8 part of the card seems to randomly get removed by TrueNAS. It used to happen every few days, but managed to last a month this time. This could be unrelated to the BIOS/Motherboard, but since it’s only happening on the mystery slot even with different drives, I’m a bit suspicious.

I’ll try the TrueNAS forums and probably shoot some emails to ASRock/AMD too, but figured I’d ask here to see if anyone’s encountered anything like this before, or has a few ideas I could try to resolve this.

If reattaching the drive without a reboot is possible, that’d be great too.

PCIe/GFX Lanes Configuration Description

This is the description for the setting in the BIOS. I’m pretty confused by this to be honest

Configure J10 & J3600 Slot PCIe

Lanes. Auto - If J3600 Slot is

connected device, J10 and J3600 both

are x8, otherwise J10 is x16; x8x4x4

- J10: X8, J3600: 4x4; x4x4x4x4 -

J10: x4x4x4x4 (J3600 Slot can't

connect any device).

Errors

CRITICAL

Pool SHODAN state is DEGRADED: One or more devices

has been removed by the administrator. Sufficient

replicas exist for the pool to continue functioning in a

degraded state.

The following devices are not healthy:

• Disk Seagate FireCuda 530 ZP1000GM30013 is REMOVED

[16901.207108] nvme nvme1: controller is down; will reset: CSTS=0xffffffff, PCI_STATUS=0x10

[16901.303103] nvme 0000:0d:00.0: enabling device (0000 -> 0002)

[16901.303663] nvme nvme1: Removing after probe failure status: -19

[16901.331131] nvme1n1: detected capacity change from 1953525168 to 0

[16901.331136] blk_update_request: I/O error, dev nvme1n1, sector 259144416 op 0x1:(WRITE) flags 0x0 phys_seg 11 prio class 0

[16901.331139] blk_update_request: I/O error, dev nvme1n1, sector 259144656 op 0xl:(WRITE) flags 0X0 phys_seg 8 prio class 0

[16901.331149] zio pool=SHODAN vdev=/dev/disk/by-partuuid/59020ebe-79db-4852-b3d9-adaecce835e0 error=5 type=2 offset=130534514688 size=131072 flags=40080c80

[16901.331193] zio pool=SHODAN vdev=/dev/disk/by-partuuid/59020ebe-79db-4852-b3d9-adaecce835e0 error=5 type=2 offset=130534645760 size=131072 flags=40080c80