That is interesting, I’ve never actually done time domain with EM; I always left that up to the wave optics guys, but I suppose it pretty important for GR.

Looks like MEEP uses BiCGSTAB for its solver, which is actually one of the most memory efficient iterative solvers if not a little unstable compared to GMRES (which is a memory hog in the realm of iterative solvers comparatively).

In my very limited reading/understanding of MEEP, it uses the FDTD method as opposed to the Finite Element Method in most other simulation software which really lends itself to solving time domain problems, but restricts itself to using more uniform grid meshes that aren’t efficiently applied to irregular geometry as something like a tetrahedral mesh a FEM program would use.

OpenFOAM is an okay proxy, although most of the benchmarks for it online are kind of small problems compared to what we’re talking about so we don’t see the memory subsystem taxed as much.

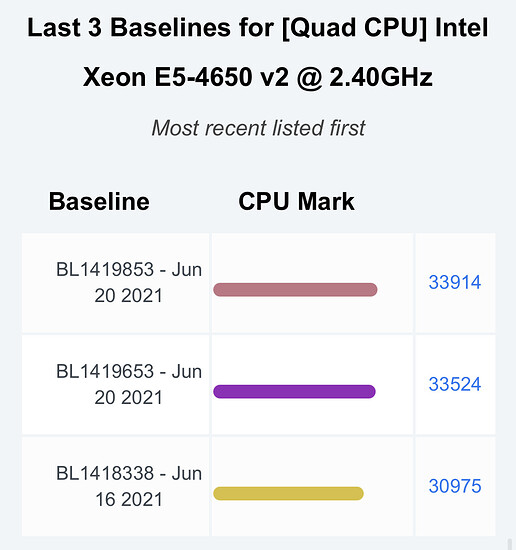

The following blocks are probably closer benchmark approximations; one of a dual socket e5-2650 v2 (16 total cores @ 3.0GHz) with 256GB of 1866MHz RAM and one of a dual socket e5-2697A v4 (32 total cores @ 3.1GHz) with 256GB of 2400MHz RAM. both using an FGMRES solver, the solve times are the fifth line from the bottom. there’s a 30% reduction in solve time by going from Ivy bridge to Broadwell and I’m pretty sure the majority of that improvement comes from the increase in RAM speed rather than the doubling of cores.

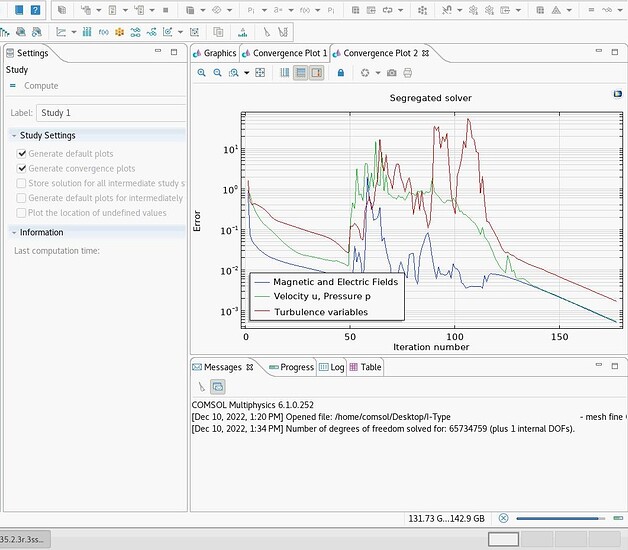

<---- Compile Equations: EM 1 in Study 1/Solution 1 (sol1) ---------------------

Started at Oct 29, 2022, 12:38:41 PM.

Geometry shape function: Linear Lagrange

Running on 2 x Intel(R) Xeon(R) CPU E5-2697A v4 at 2.60 GHz.

Using 2 sockets with 32 cores in total on WIN256-PC.

Available memory: 262.04 GB.

Time: 108 s. (1 minute, 48 seconds)

Physical memory: 7.61 GB

Virtual memory: 7.94 GB

Ended at Oct 29, 2022, 12:40:29 PM.

----- Compile Equations: EM 1 in Study 1/Solution 1 (sol1) -------------------->

<---- Stationary Solver 5 in Study 1/Solution 1 (sol1) -------------------------

Started at Oct 29, 2022, 7:32:38 PM.

Linear solver

Number of degrees of freedom solved for: 43351744.

Nonsymmetric matrix found.

Scales for dependent variables:

Magnetic vector potential (comp1.A): 3.9

Electric potential (comp1.V): 0.95

Terminal voltage (comp1.mef.mi1.term1.V0_ode): 12

Orthonormal null-space function used.

Iter SolEst Damping Stepsize #Res #Jac #Sol LinIt LinErr LinRes

1 0.093 1.0000000 0.093 1 1 1 203 0.00095 9.5e-08

Solution time: 8490 s. (2 hours, 21 minutes, 30 seconds)

Physical memory: 166.43 GB

Virtual memory: 168.9 GB

Ended at Oct 29, 2022, 9:54:08 PM.

----- Stationary Solver 5 in Study 1/Solution 1 (sol1) ------------------------>

<---- Compile Equations: EM 1 in Study 1/Solution 1 (sol1) ---------------------

Started at Nov 7, 2022, 5:13:12 PM.

Geometry shape function: Linear Lagrange

Running on 2 x Intel(R) Xeon(R) CPU E5-2650 v2 at 2.60 GHz.

Using 2 sockets with 16 cores in total on DESKTOP-TEH272H.

Available memory: 262.08 GB.

Time: 111 s. (1 minute, 51 seconds)

Physical memory: 7.6 GB

Virtual memory: 7.92 GB

Ended at Nov 7, 2022, 5:15:03 PM.

----- Compile Equations: EM 1 in Study 1/Solution 1 (sol1) -------------------->

<---- Stationary Solver 5 in Study 1/Solution 1 (sol1) -------------------------

Started at Nov 8, 2022, 1:57:03 AM.

Linear solver

Number of degrees of freedom solved for: 43351744.

Nonsymmetric matrix found.

Scales for dependent variables:

Magnetic vector potential (comp1.A): 3.8

Electric potential (comp1.V): 0.91

Terminal voltage (comp1.mef.mi1.term1.V0_ode): 11

Orthonormal null-space function used.

Iter SolEst Damping Stepsize #Res #Jac #Sol LinIt LinErr LinRes

1 0.062 1.0000000 0.062 1 1 1 208 0.00093 9.3e-08

Solution time: 11965 s. (3 hours, 19 minutes, 25 seconds)

Physical memory: 162.77 GB

Virtual memory: 163.75 GB

Ended at Nov 8, 2022, 5:16:28 AM.

----- Stationary Solver 5 in Study 1/Solution 1 (sol1) ------------------------>

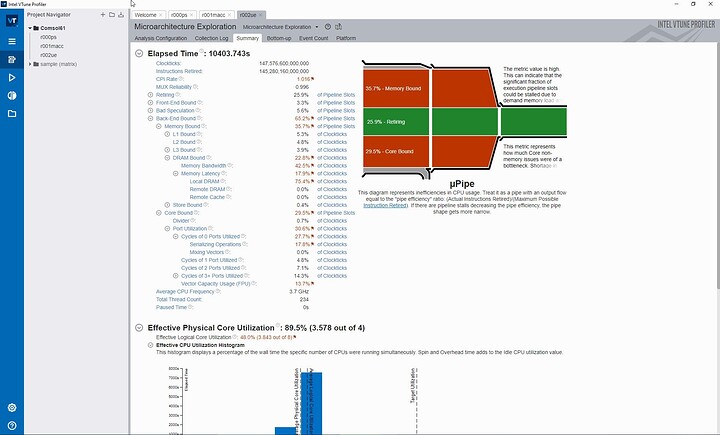

And here’s what VTune says is going on during simulation (this is actually from a Skylake system but it’s largely the same as the previous generations):