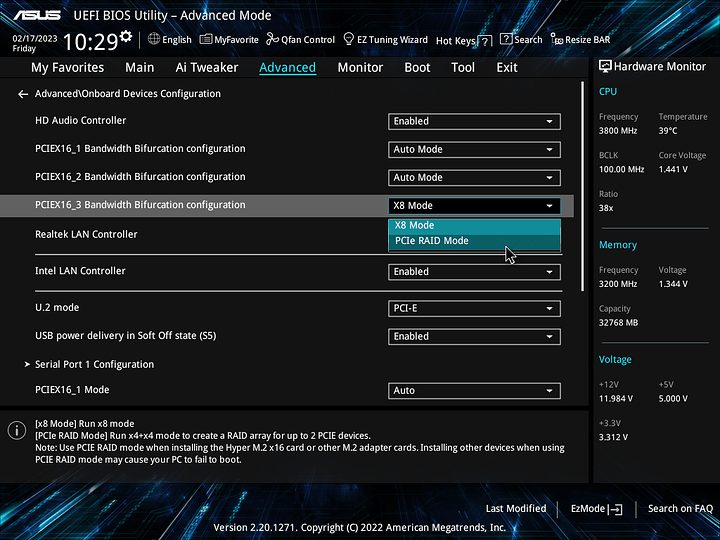

I finally updated the BIOS recently and while browsing the options, I’ve noticed it is now possible to bifurcate the third PCIe slot into a 4x4x configuration! I’m pretty sure it hadn’t been possible before and a Google search seems to confirm it. Would be great if it really worked, two gen3 drives could get close to full bandwidth in the chipset slot.

The option has been in the BIOS for a while. It’s great to have the option.

At the cost of additional latency introduced by the hop through the chipset.

Yeah, that feature has been there for years, one of the reasons I’ve been shilling for this motherboard model since late 2019.

Probably overlooked since ASUS calls PCIe Bifurcation “PCIe RAID Mode” as you can see in your screenshot which is quite a bit misleading, has nothing to do with the NVMe RAID option which is obviously (!) located in the SATA menu ![]()

Latency doesn’t suffer toooo much (tested with Optane 905P), maximum sequential speeds get reduced to about 6,500 MB/s instead of 7,300 MB/s (tested with Samsung PM1733 U.2 SSDs) directly off of CPU PCIe. Of course there is the risk of saturation of the PCIe Gen4 x4 interface between the CPU and the chipset, then things get worse.

Still my favorite home server motherboard, AM5 offerings are still pretty meh here in my opinion.

This mobo, when paired with fast RAM and a 5900X/5950X, allows building quite a balanced system packing quite a punch.

For this reason I have multiple of these.

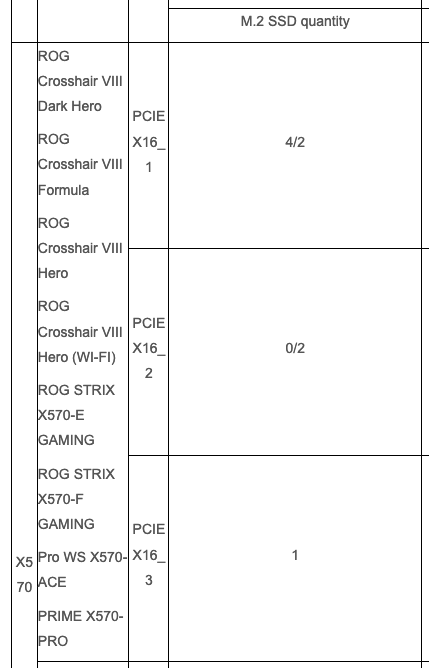

The reason I finally upgraded the BIOS and checked the setting is that I found a great deal on two 3.84tb pm983 drives, and I want to put them on an extension card like Asus Hyper M.2 X16 V2 (they can be had for ~45 euro, now that’s good value!). But Asus’ own docs say you can only have one drive in the third slot, as if it couldn’t be bifurcated

Anyway I’ll post some results when I get the drives and the card later this week.

Instead of the Asus Hyper card, which is HUGE, see if you can find something similar to the following in Euroland:

Cheaper, smaller, and 2x m.2 is sufficient for the chipset PCIe slot.

Yeah I was considering it, but I also read somewhere that these pm983s tend to run hot… So if I get the cheaper card and two 22110 heatsinks, total price is again close to 40 pounds.

Or would you say the heatsinks are not really necessary?

Instead of going M.2 I’d recommend using U.2 SSDs in this carrier AIC:

https://www.delock.de/produkt/90091/merkmale.html?f=s

You can use three of those cards in the ASUS Pro WS X570-ACE.

Micron 7450 SSDs perform very nicely (the models with 3.84 TB or larger) and in contrast to Samsung Micron offers proper end customer support like publicly available firmware updates and warranty service.

Also since Samsung recently has become the worst corporation against Right-To-Repair it should be boycotted.

My key issue is the size of the card. I have the smaller cards in 2U rack chassis as well as desktop/4U sized chassis.

Why not both? I like my m.2s next to my U.2 Optanes…

In my personal experience U.2 SSDs are…

- Often a better price/capacity, especially for SSDs >= 4TB

- Real world performance higher than M.2 (M.2 consumer SSDs often advertize with their best-case performance values, U.2 enterprise SSDs on the other hand with their worst-case numbers)

- Higher TBW

- Powerloss protection for data in-flight

I like U.2 as much as the next person and anyway pm983 would not be my first choice, but I can get them really, really cheap (150 euro per unused drive), which I think makes it worthwhile to put up with some inconveniences. Just trying not to run into any major pitfalls!

Okay, took me a while to find some time to run the benchmarks, but for what it’s worth, here they are.

(By the way, the drives turned out to be Hynix PE6110, not Samsung after all.)

I used KDiskMark just to keep things simple.

Test partition was formatted with XFS.

**Single drive in the PCIE2 slot (CPU lanes) **

[Read]

Sequential 1 MiB (Q= 8, T= 1): 3202.744 MB/s [ 3127.7 IOPS] < 2532.28 us>

Sequential 128 KiB (Q= 32, T= 1): 3200.789 MB/s [ 25006.2 IOPS] < 1274.40 us>

Random 4 KiB (Q= 32, T=16): 1292.216 MB/s [ 323054.8 IOPS] < 395.80 us>

Random 4 KiB (Q= 1, T= 1): 50.610 MB/s [ 12652.6 IOPS] < 78.69 us>

[Write]

Sequential 1 MiB (Q= 8, T= 1): 2043.337 MB/s [ 1995.4 IOPS] < 3796.25 us>

Sequential 128 KiB (Q= 32, T= 1): 2029.608 MB/s [ 15856.3 IOPS] < 1988.75 us>

Random 4 KiB (Q= 32, T=16): 899.734 MB/s [ 224934.5 IOPS] < 568.37 us>

Random 4 KiB (Q= 1, T= 1): 249.639 MB/s [ 62409.8 IOPS] < 14.92 us>

Profile: Default

Test: 1 GiB (x5) [Measure: 5 sec / Interval: 5 sec]

Date: 2023-05-25 19:36:43

OS: arch unknown [linux 6.3.3-zen1-1-zen]

**Single drive in the PCIE3 slot (Chipset lanes) **

[Read]

Sequential 1 MiB (Q= 8, T= 1): 2920.824 MB/s [ 2852.4 IOPS] < 2786.76 us>

Sequential 128 KiB (Q= 32, T= 1): 2922.456 MB/s [ 22831.7 IOPS] < 1396.33 us>

Random 4 KiB (Q= 32, T=16): 1100.643 MB/s [ 275161.8 IOPS] < 464.95 us>

Random 4 KiB (Q= 1, T= 1): 46.711 MB/s [ 11677.9 IOPS] < 87.17 us>

[Write]

Sequential 1 MiB (Q= 8, T= 1): 1958.828 MB/s [ 1912.9 IOPS] < 3965.14 us>

Sequential 128 KiB (Q= 32, T= 1): 1969.667 MB/s [ 15388.0 IOPS] < 2050.65 us>

Random 4 KiB (Q= 32, T=16): 861.860 MB/s [ 215466.1 IOPS] < 593.44 us>

Random 4 KiB (Q= 1, T= 1): 218.142 MB/s [ 54535.6 IOPS] < 17.23 us>

Profile: Default

Test: 1 GiB (x5) [Measure: 5 sec / Interval: 5 sec]

Now to test 2 drives simultaneously, I created a mdadm RAID0 array as follows:

# mdadm --create --verbose /dev/md0 --level=0 --raid-devices=2 /dev/nvme1n1 /dev/nvme2n1

** 2 drives in RAID0, PCIE2 slot (CPU lanes) set to Gen4 in BIOS**

[Read]

Sequential 1 MiB (Q= 8, T= 1): 5591.009 MB/s [ 5460.0 IOPS] < 1442.61 us>

Sequential 128 KiB (Q= 32, T= 1): 5625.446 MB/s [ 43948.8 IOPS] < 723.65 us>

Random 4 KiB (Q= 32, T=16): 2502.134 MB/s [ 625534.4 IOPS] < 204.24 us>

Random 4 KiB (Q= 1, T= 1): 50.046 MB/s [ 12511.7 IOPS] < 79.57 us>

[Write]

Sequential 1 MiB (Q= 8, T= 1): 4047.232 MB/s [ 3952.4 IOPS] < 1806.80 us>

Sequential 128 KiB (Q= 32, T= 1): 4016.320 MB/s [ 31377.5 IOPS] < 988.28 us>

Random 4 KiB (Q= 32, T=16): 1813.596 MB/s [ 453400.1 IOPS] < 281.28 us>

Random 4 KiB (Q= 1, T= 1): 248.187 MB/s [ 62047.0 IOPS] < 15.00 us>

Profile: Default

Test: 1 GiB (x5) [Measure: 5 sec / Interval: 5 sec]

** 2 drives in RAID0, PCIE2 slot (CPU lanes) set to Gen3 in BIOS**

[Read]

Sequential 1 MiB (Q= 8, T= 1): 6226.750 MB/s [ 6080.8 IOPS] < 1294.68 us>

Sequential 128 KiB (Q= 32, T= 1): 6204.591 MB/s [ 48473.4 IOPS] < 654.22 us>

Random 4 KiB (Q= 32, T=16): 2543.412 MB/s [ 635854.3 IOPS] < 200.93 us>

Random 4 KiB (Q= 1, T= 1): 50.103 MB/s [ 12526.1 IOPS] < 79.48 us>

[Write]

Sequential 1 MiB (Q= 8, T= 1): 4065.177 MB/s [ 3969.9 IOPS] < 1797.92 us>

Sequential 128 KiB (Q= 32, T= 1): 4086.927 MB/s [ 31929.1 IOPS] < 974.78 us>

Random 4 KiB (Q= 32, T=16): 1818.282 MB/s [ 454571.3 IOPS] < 280.55 us>

Random 4 KiB (Q= 1, T= 1): 249.364 MB/s [ 62341.2 IOPS] < 14.93 us>

Profile: Default

Test: 1 GiB (x5) [Measure: 5 sec / Interval: 5 sec]

** 2 drives in RAID0, PCIE3 slot (Chipset lanes) set to Gen3 in BIOS**

[Read]

Sequential 1 MiB (Q= 8, T= 1): 5864.548 MB/s [ 5727.1 IOPS] < 1372.90 us>

Sequential 128 KiB (Q= 32, T= 1): 5877.712 MB/s [ 45919.6 IOPS] < 691.93 us>

Random 4 KiB (Q= 32, T=16): 2234.582 MB/s [ 558646.4 IOPS] < 228.78 us>

Random 4 KiB (Q= 1, T= 1): 49.423 MB/s [ 12355.8 IOPS] < 80.62 us>

[Write]

Sequential 1 MiB (Q= 8, T= 1): 3843.885 MB/s [ 3753.8 IOPS] < 1916.53 us>

Sequential 128 KiB (Q= 32, T= 1): 3813.872 MB/s [ 29795.9 IOPS] < 1043.89 us>

Random 4 KiB (Q= 32, T=16): 1743.795 MB/s [ 435949.7 IOPS] < 292.58 us>

Random 4 KiB (Q= 1, T= 1): 215.968 MB/s [ 53992.1 IOPS] < 17.41 us>

Profile: Default

Test: 1 GiB (x5) [Measure: 5 sec / Interval: 5 sec]

I got the ASUS Hyper M.2 V2 (Gen3) card. As it turns out, it was a great choice because it comes with a massive heatsink and these drives run very hot. They actually start to throttle after one or two benchmark runs and even remember it forever in their SMART data. So it seems when using these enterprise drives, a heatsink is a must.

Thanks for the data. Quite interesting.

Going through the chipset incurs about a 10% performance penalty in these tests.

Also, forcing the incorrect PCIe bus speed incurs a penalty.

Thanks! Just realised I made a copy paste error and pasted chipset results under the cpu/gen4 header. This edit box is tiny! Fixed now.

The effect of setting the bus speed was the biggest surprise for me. I thought it gets configured to Gen3 anyway when a Gen3 device is detected, but there seem to be some additional complexities.

I’ll run a few more experiments if time permits, but for the time being I suppose it could be something to keep in mind when using those m.2 extension cards.

This topic was automatically closed 273 days after the last reply. New replies are no longer allowed.