Hi, first time posting here long time reader.

So i have an asus prime x399-a , 2950x 32gb ram, setup with this card.

I can get all 4 of the nvme drives to show etc. in a 4x4x4x4 setting. but when I actually create the raid in windows using striped just looking for raw speed here. I get on average 1.5 GB/s using a mellanox connect x-3 pro ethernet. updated latest firmware. all tuning done via the manual on nvidia, numa set to closest proc, I have tested multiple OS configs, unraid,truenas,debian, windows. Still cant get the thread ripper to push this network card, I had another am5 system laying around and out of kicks i just installed the nic there and windows to windows got around 2.7 GB/s same nvmes and nic. I know its probably something im doing wrong.

Hi and Welcome!

I wasn’t sure from your post if you are having issues with the network card or the nvme…

Sorry for the confusion, but i think neither just maybe settings on the network card. oddly enough I installed windows server 2022, made sure RDMA was enabled, got windows 10 pro workstation and enabled RDMA there. and now im getting consistent 3GB/s over network transfers…

The RDMA will help a lot as you saw because it bypasses a lot of the standard kernel network stack.

Sounds to me like you were using a basic smb/samba transfer method and standard ethernet frame size?

Test enabling jumbo frames on the NICs and switch (if using one) and see how the transfer speed changes. Also test other methods like NFS and iSCSI. I believe iSCSI with RDMA should be the fastest. 10gb networks can usually gain a couple GB/s by enabling jumbo frames on a large file transfer, and for 25gb and more you need jumbo frames on always. Note that 40gb NICs also have some extra processing overhead cause they are bonding together 4*10gb connections internally. 56gb NICs are the same thing as 40gb ones but with the encoding method changes to remove much of the encoding overhead (8/10 vs 64/66). I mention this because often the Mellanox ConnectX-3 40gb NICs can run in 56gb mode.

wait so my 40gb mellanox can run 56 too ? hmm let me get the exact model # to you.

MCX314A-BCCT, i dont use a switch just an active DAC between the two PC’s. and as far as mtu when i change it to say 9000 it drops performance by a mile. 9128 i tried as well on both ends, i forget how i calculated that long ago.

Its been way too long since Ive done it myself and I dont use the cards anymore, but yes I believe it should. You will need to use a DAC cable capable of FDR bitrate rather than a 40gb DAC though.

Try this thread for some info

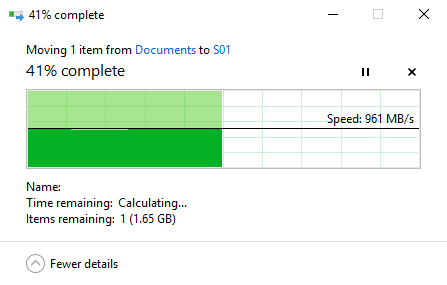

As for Jumbo frames dropping performance, that seems really strange as that is normally the solution to not being able to get full bandwidth. I know when I used CX3 NICs and did tests I had these results for the cars completely stock with a transfer direct between two PCs using smb:

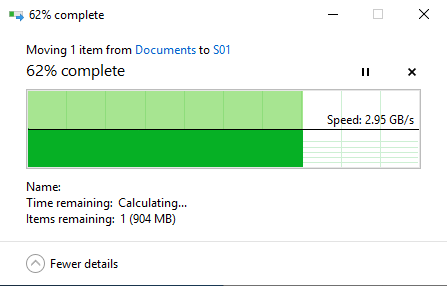

And the exact same config but with 9k jumbo frames enabled:

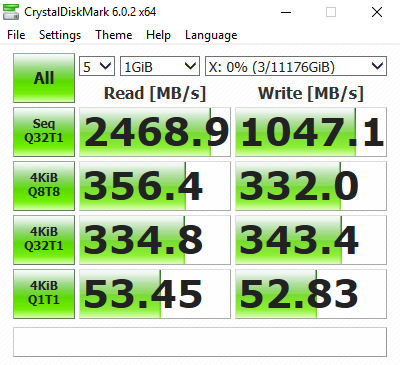

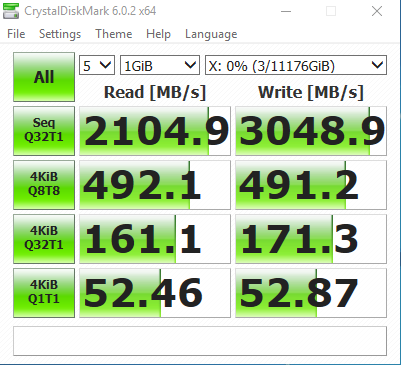

These were testing with Crystal Disk on the network shared drive over the connection with normal and jumbo frames:

Tested with a mounted RAMdisk on the server PC to eliminate disk bottlenecks in testing and only limit based on the network and sharing protocol.

interesting read for sure ! I wonder if the AMD processor is the bottleneck… or only 32gb memory… I still think its settings inside the mellanox adapter panel. Ive followed nvidia’s manual on this and still cant seem to get over 3GB/s and only 3BG/s for around 20gb data then drops to 2GB/s feels like a ram issue or send buffering issue. I could i suppose do a nttcp test, this is one of the fiber cables ive tried

just created two 15gb ram disk using ImDisk, and only got 1.9-2.1GB/s xfer

Can’t help with the Windows side as I’m only using Linux.

However, through a lot of trial and error I found that changing the following settings will allow iPerf3 to pass 4GB/s through a Mellanox SX6012 switch.

/usr/sbin/ip link set mtu 9000 ${mlx_dev}

/usr/sbin/ip link set txqueuelen 20000 ${mlx_dev}

/usr/sbin/ethtool -G ${mlx_dev} rx 8192 tx 8192

In Linux the cards default to 40gb connection, but either via ethtool or, in my case via settings on the switch, can ensure connections to negotiate 56gb.

Please note that the cables need to support 56gb (look for FDR cables). eBay is your friend.

There is tons of protocol overhead when using these cards. As said above, RDMA is required for optimal performance.

Finally, I have never seen actual file transfer speeds >3GB/s. I don’t know if it’s a limitation of the network connection or simply that the file system/single thread copy (on either end) is the bottleneck.

so basically if I am getting 3BG/s file transfers I need to stfu and enjoy ? lol

No, keep pushing and let me know what you did to improve the situation!

Theoretical maximum for 40gb is 5GB/s, but after some overhead probably more like 4.5GB/s. Then you get limited by things like CPU, memory, kernel stack, network protocol, drive speed, other network utilization.

Network protocol part can be changed by using something besides SMB, such as NFS or iSCSI.

kernel stack can be bypassed with things like RDMA

drive speed can often be plenty fast now with nvme drives or a big ram cache set up on the share

Id love to see more than 3GB/s, but that is where I topped out with a basic setup and nothing special with standard SMB protocol and I just left it at that cause it was the easiest.