https://teksyndicate.com/forum/pc-gaming/amds-strategy-mantle-trueaudio-steamos-and-more/155972

(This started off as a forum topic, but a friend of mine who's a bit more experienced with the Tek forum recommended I put the topic in the blog area. So here it is.)

This is pretty much me babbling on about my own thoughts and opinions regarding AMD's recent decisions, and my attempts to explain why they've made certain decisions. (Don't ask me for the references and links. There are too many, it's nearly 7AM and I've been working all night and haven't slept, and I'm lazy enough I could make Homer Simpson blush.)

First, let me start off by explaining some of the background info regarding their recent decisions:

AMD has attained the "holy trinity" of Videogame consoles: Sony Playstation (PS4), Microsoft XBox (XBox One), and Nintendo WiiU. This gives them a HUGE advantage in the gaming market, since this allows cross-platform games that run on consoles and PCs to have the already-added benefit of being optimized for AMD hardware.

Since the two main consoles (XBox One and PS4) have eight-core design and AMD GCN architecture (HD-7000 series and R7 / R9 200 series) GPU design included, it's safe to speculate that we might see OpenCL programming taking place for the GPU (well, it's basically a glorified APU, but whatever...), and we'll also see optimizations done for more cores, meaning better multi-threaded coding should be included.

Also worth noting is that Windows 8 has really cooled the enthusiasm for the PC Market. It's been predicted that Windows 7 will become the next Windows XP, since Windows 8 isn't really living up to the hype. Windows 8.1 is promising to solve this issue, but AMD can't wait around. Even companies like Lenovo are including a Start Menu program to help users navigate through Windows 8 better. This means that even Microsoft OEM partners have seen the horrible idea of MetroUI/ModernUI, and how badly it's affecting their sales.

AMD is already in financial troubles lately, and is nearly hemorrhaging money like there's no tomorrow. The GPU and APU market are what AMD does seem to "get" right now, and you can bet they're counting on that to make a comeback at Intel and nVidia.

AMD has always been a big supporter of OpenSource. They've had good drivers for Linux for some time now. But with Windows 8 it's really been shown that Windows might end up being a pitfall to ruin the PC Desktop industry; sales are down and they keep falling, except in the high-end gaming area. AMD would be absolutely ruined if the PC Desktop market crashed, since it's been known for a while that AMD doesn't perform well on laptops/notebooks, and AMD can't make a good ultrabook-like competitor to go head-to-head with Intel right now.

And well, that's the background info. It's a lot, I know, but let me bring in why AMD is choosing to do right now:

With the announcement of SteamOS, AMD has a big advantage: they don't have to do a lot of coding to make great drivers for Linux, which gives them a short-term advantage. And with the "console trinity" under the AMD belt, that means they can deliver a new API to help bring gamers (their core consumer market) to Linux in order to better replace DirectX (which is Microsoft Windows exclusive, for those who don't know and might have been living under a rock in Bikini Bottom for the past decade).

This is where AMD Mantle comes in. AMD Mantle means that Game Devs can get better performance, and it'll be more stable across different platforms (such as consoles and PCs), which means less time developing, which means cheaper games to develop (which means lower sale prices, or more profit... no points for guessing what EA execs will pick).

But AMD made Mantle an open thing... why? Well, first, it'll be great marketing down the road, which is a great long-term strategy for AMD to help boost sales. It also gives them a great short-term advantage, because now nVidia will have to figure out a way to optimize their drivers to work with Mantle... but AMD made it, so they might have a slight advantage, and this has probably been in their skunkworks for a while now as well, meaning there's probably a lot of optimization that's been going on behind the scenes... but there's another, much more important reason:

AMD wants to attract developers to Mantle. If they made it closed-platform, it would make companies feel locked in, which is bad. It's the opposite of the CUDA philosophy, and it's one of the reasons Adobe went with OpenCL (and other content creation softwares as well). By making Mantle open, they've created something very nice: a platform for developers, especially Game Developers. This means AMD now has a whole eco-system for GameDevs to work on that's fully-featured and ready for console development and PC development. It means less work, less time wasted, and it'll be faster (hopefully). By attracting more developers to use their technologies, it means more developers will optimize their code for AMD products, which gives AMD better performance in benchmarks and (more importantly) real-world usage, which will have long-term boost in performance and (consequently) sales (which will be driven by marketing pamphlets featuring big graphs with benchmarks results of AMD products beating the competition),

With their TrueAudio technology, they can now make games more immersive. Sure, it doesn't sound like much, but it'll be that extra little touch PC gamers and PC Hardware enthusiasts will really like, not to mention those audiophile guys (stay away from my music! keep your ears to yourself! mute means mute!... - I had to get that out of my system, sorry guys) It's also a great way to take some of the burden off of the less-powerful single-threaded performance of AMD CPU cores, by passing said burden to the GPU.

With this, now AMD has a technology which is (hopefully!) better than DirectX. AMD seems to want to make Mantle a technology which can improve performance for multi-core CPUs (like those glorified 8-core console APUs that the PS4 and XBox One are using). This means AMD FX CPUs and AMD GPUs are now going to have a slight advantage.

And by supporting SteamOS from the get-go, we'll soon see a lot of the performance bottleneck from Windows go away. This might even (at some point) make 4K gaming at 60fps (or more!) possible using an R9 290X, or maybe even an R9 280X !

4K gaming might be a very, very interesting thing indeed. We haven't been able to go that route for some time because right now we haven't had standards which were 4K compatible, whether they were cables, VESA standards or monitors. However now we have VESA 1.3, HDMI 2.0, and with the new VESA standard we'll also see automatic 4K detection and configuration to it'll be transparent to the end user.

4K gaming won't come to consoles anytime soon, though, because the consoles just don't have the horsepower and they also don't have HDMI 2.0 or DisplayPort 1.2 connectors available. That means that Microsoft and Sony would have to release updated consoles. That's not to mention the performance drop in framerate and frame latencies if we did see them jump to 4K by putting on new connectors without increasing the amount of GPU horsepower on their consoles.

AMD decided to do what they did for a very precise, calculated, clinical reason. They saw Windows bottlenecking the performance. Many games weren't going to appear on PCs because of the development cost, now and in the future. And lastly, they also saw the drop in sales of desktops due to Windows 8 and MetroUI/ModernUI. After all that, going to Linux might have helped them, but if Linux had nothing to offer gamers and users in terms of games and/or software, it would have been a tremendous flop (like the new Blackberry OS, which despite being a great mobile OS, it had almost no apps due to almost no userbase).

So Valve and AMD helped scratch eachother's back. Valve made an OS that was meant for AMD's core audience, and AMD made drivers and the development platform needed for an en-masse migration to Linux, in an effort to increase Desktop PC sales and game sales. And it seems very promising, at least so far.

I'm hopeful this strategy works out. AMD has put a lot of effort into Mantle, and TrueAudio seems like a good idea.

If game engines decide to support Mantle en-masse (like Frostbite has already done, Unity from what I've heard, and others), this might be a very interesting thing. And if we see OpenCL middleware for things in-game Physics, we might also see a lot of improvements in gaming performance.

Where AMD goes from here is anyone's guess. AMD seems to be holding out on developing new GPUs right now because it just needs to have a product to compete with the GTX Titan and the GTX 780 for a more reasonable price. If it can do so for the meantime and hold out until 22nm and 20nm are here (in Q2 2014 from what I've heard, or maybe Q3 2014), then we'll see AMD come out with new APUs, new GPUs, and maybe even new CPUs.

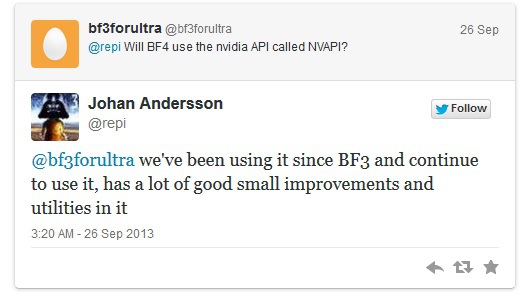

Here's what I think Intel and/or nVidia might prepare as a counter-offensive measure. First, Intel might rethink a lot of their strategies of keeping us stuck at four cores forever. If AMD can put enough pressure to force Intel to make that change and add more cores to their mainstream line of CPUs, it might take away from their Workstation buyers, which might hurt them in terms of profitability (even if it's only a dent). nVidia might try to entice game developers and game publishers by trying to give them millions of dollars for game deals (since AMD has been known to offer great game deals thus far) for nVidia exclusive giveaway codes, or they might even give game developers or game publishers money to only let nVidia optimize their drivers before the game is released... or possible to get the coders to put in-code optimizations for nVidia-based GPUs. This has been well-known for some time now, and we've seen it time and time again.

Now to mention that Sony and Microsoft feel betrayed by letting PC users get access to AMD's Mantle technology, too, and it will be interesting to see whether Sony or Microsoft will allow GameDevs to use Mantle or not in their own proprietary, console-specific OS's.

What intrigues me is that right now most AMD GPU and CPU users are running Windows, which begs the question: how will Microsoft react to Mantle, SteamOS and AMD's new-found alternative to Windows? Will Microsoft play dirty or not? Only time will tell. But we do live in interesting times, at least for news regarding PC Hardware and PC Software right now anyways.

Anyways, that's all for now. Did I forget anything (except the references and links; don't bother asking me for those, it'd take a bajillion-and-a-half milliseconds for me to look that up and who has the time anyways?... pay no attention to the man behind the screen who just typed a metric butt-ton of text).

Sorry for the colossal text, guys. But in the words of eternal bard Shakespear: "To be, or not to be? tl;dr yolo"

** UPDATE ** : AMD recently announced that Mantle is *not* going to work with XBox One. It's meant for PCs, but since AMD has not announced whether or not Mantle works with Sony's PS4, we might just get a surprise, although I wouldn't hold my breath.

http://www.techpowerup.com/192549/xbox-one-doesnt-support-amd-mantle-api.html

http://www.techpowerup.com/192552/amd-explains-why-mantle-doesnt-work-on-xbox-one.html