where’s the pics + benchmarks! ![]()

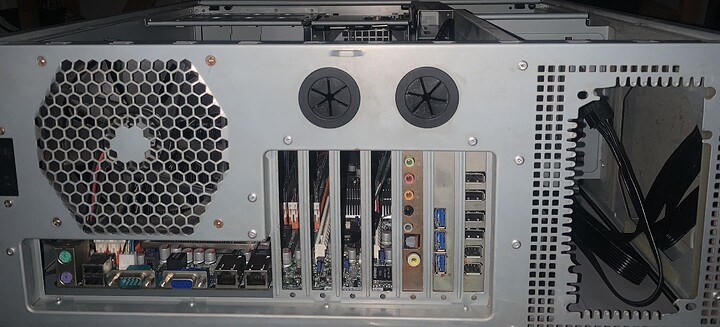

I picked my full tower case up from the climate controlled storage today. The aluminum side panels have a dusting of oxidized aluminum. Now to pull the old dual Xeon with 24gb of ddr3 so I can install the new MB.

I need to move some motherboard standoffs in order to install the motherboard. Mine are round with no flat spots. I have some channel locks that may work to remove it, but it is nearly midnight here so that is a project for another day.

Probably the soonest I can work on this is 5PM CDT on Saturday.

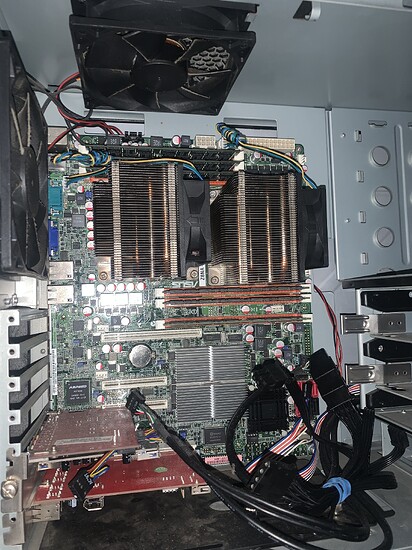

Motherboard installed.

The standoffs were riveted in place. Channel lock pliers pried them off.

Now one of the 8 pin motherboard connectors don’t match 8 pin connectors from my power supply. The other one does. atx+4 is connected. I need to find the additional power lines I last used in 2009. (or order spares).

I should have done this while waiting for the cpu…

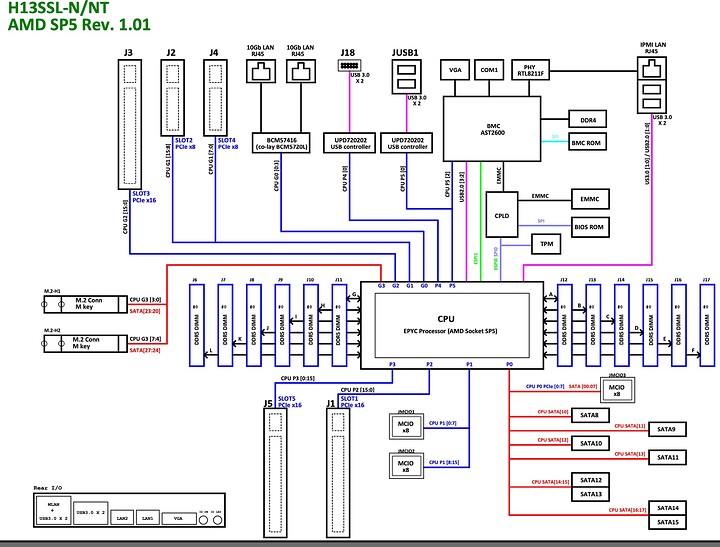

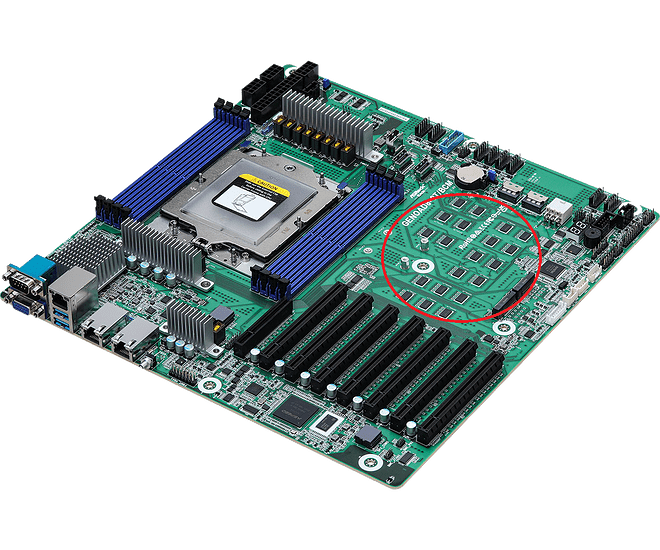

What’s up with the PCI express slots for these boards? I count 5 slots total, only 3 of which are 16 lanes? Threadripper boards usually have 7 full-length PCIe x16 slots, though often one or two are x8 lanes.

Do these boards use a lot of U.2 or other things that spends the PCIe lanes?

So i searched for some specs, found the board here: H13SSL-N | Motherboards | Products | Supermicro

So it has:

- 64 lanes spread over 5 PCIe ports, only 3 of which are full length x16.

- Only two M.2 slots, only PCIe 4 and not 5 and both are not cooled at all it appears.

- Only 6 USB3 ports (including all internal headers).

- Dual 1Gbit LAN (broadcom) + 1 IPMI management LAN.

- 8x SATA ports.

However it also sports MCIO ports or connectors. Though i have never heard of those. It appears to be PCI express via cable?

So where did all the PCI express lanes go? Unused on this motherboard? Genoa has 128 PCIe 5.0 lanes for single socket systems, so why use PCIe 4.0 on the M.2 slots?

All very confusing to me. This stuff is stupid expensive but even the lowest B550 AM5 board has M.2 with PCIe 5.0, so I am a bit confused…

Some more bizarre things i stumble upon:

Please note - many Supermicro parts are only sold in combination with a complete tested system order to protect our own assembly line due to the shortages.

Also, some Supermicro barebone systems require a minimum amount of CPU/memory/HDD/SSD/NVMe.

For these reasons, some orders might be cancelled as they are not accepted by Supermico or Ahead-IT.

Can anybody confirm this? Or is this EU only and in US you can actually just buy this board?

64 64 lanes for slots

88 +24 lanes for mcio

96 +8 for 2 m.2

100 +4 10gbe is pcie3, probably 4 lanes

102 +2 ipmi

110 +8 2x pcie3 usb3 controllers with 2usb3 ports each

18 lanes un accounted for.

The 3 mcio ports each handle 8 lanes of pcie.

I got a $20 double ended symmetrical cable good for pcie4

and a $16 mcio to dual x4 m.2 adapter card.

With these, each mcio can give me a cheap pair of m.2 ports.

Unlike am4 and am5, there is not the choice between pcie and sata, I get both.

epic does bifurcation whatever way I want it, and the motherboard supports that.

I got the NT model so I also got dual 10g ethernet.

I NEED ECC. I have lost 1.5 years of my professional career to ram errors that would be prevented with ECC ram. if I have a choice in the matter, I am going to use ECC ram in my personal computers. The AM5 motherboards that support DDR5 ECC are not yet shipping.

And there are 12 channels of ddr5 with ecc. So far I am using 2x 32GB each, but in the future after they become more common I can get larger pieces. And 12x 32GB is 384GB, which is still 3 to 6 maxed out AM5 systems. 64GB pieces are only 120% the price of 32GB pieces, so 768GB is still a reasonable price, especially compared to buying 6-12 AM5 PCs.

My last PC was an intel NUC. That computer was so frustrating in its limited expansion. The computer before that was a dual socket 1366(I think) xeon workstation, which was much less frustrating to work with.

I had an urgent need to deploy a computing cluster in Jan 2023 in order to show a working proof of concept, and ran face first into the wall of unsupported hardware due to microcode updates to CPUs (lots of intel security holes). Basically all of my PCs were unusable for even a proof of concept. This brings me current. I could have gone am4 or am5, but I tend to use a lot of ram, and this build lets me add ram as needed without also having to build additional systems. I eventually got the proof of concept working, but my aging hardware held me back about 9 days. This gets me current. I have usually been in server administration. I have been supporting freebsd, linux etc since 1996. For my work, cpu usage has not been a limitation. IO and ram capacity has always been the limiting factors. This system will effectively remove those limitations.

I also have an apple M2 MacBook Air 24GB 2TB, which complements the server. I can do most things on my laptop. There are some things a laptop sucks at. For those this computer should excel at.

I am having an atx 8 pin cable shipped here by Thursday, and full pack of cables by the following Thursday. The first cable should be enough for me to turn it on.

My power supply only shipped with one 8 pin power connector. The other one I bought arrived yesterday. Today the package with an assortment of computer screws arrived, so I can now install the motherboard standoffs.

I think I have all of the components now.

Still to be installed:

Motherboard stand-offs

More than 2 screws connected to standoffs

Power supply,

the second 8 pin power connector

Gpu 6-8 pin (gpu is installed)

Heatsink

Ram

A significant portion of the household income comes in during an event that runs from Thursday two days ago through Sunday the day after tomorrow. And then I am out for 4 days. I will get stuff done as I have time.

A bit closer,

Motherboard stand-offs installed

All screws for motherboard installed, even the one under slot 1 m.2

Heat sink installed

All power connections connected

Both optane 118GB installed

gpu installed and powered

Ubuntu 22.10 downloaded and boot disk created

Now where did I put the ram…

Probably more progress tomorrow.

The cpu lanes can be configured to be PCIe lanes or sata, so that will account for at least 8 more of the 18 lanes

Please let us know the temps in a full load. I am looking to build a similar system, but with a “9374F” and I am struggling to find an appropriate cooler for it, the temps are worrying me.

Also if you are interested to add some more fans for the VRM look into these “https://www.aliexpress.com/item/1005003469260210.html?spm=a2g0o.order_list.order_list_main.5.21ef1802iy37T4”.

Thank you!

I counted 8 of the lanes as pcie, there are another 6 motherboard Sata, which makes sense for the 2 pcie channels for ipmi.

Below is a probable 16x set of pcie/sata

2 ipmi, already counted

6 Sata

8 pcie or Sata, already counted

Now 12 channel’s unaccounted for.

TPM in in there somewhere, I have the module for it.

Don’t want to derail the thread too much, but I just came across an interesting SP5 board from ASRockRack with 8 (!) PCIe slots but only 8 RAM channels ( ![]() ) :

) :

https://www.asrockrack.com/general/productdetail.asp?Model=GENOAD8X-2T/BCM#Specifications

I saw that they added that board earlier this week too. I don’t think I’ve ever seen a motherboard with that many pcie switches on it before.

Where are the pcie switches? stock all epyc 9004 CPUs have 128 pcie5 channels, which translates to 8 16x pcie5 sockets. In this case they only offer 7, with the 8th usually being 8x, and the other 8 channels providing motherboard services.

All these guys:

I’m assuming its because of the extra m.2 slots and the mcio connectors that account for more pcie lanes than genoa has; no block diagram to confirm yet though.

How’s the build going, @MikeGrok? I just got the H13SSL-NT in; newegg was back-ordered. If anyone has a lead on MCIO cables, I’d like to go 74pin to 2x NVME, but I can’t find them anywhere.

Glad you’re feeling better!

To clarify the lane routing, Genoa has 8 main multi-function I/O interfaces, G0-G3 and P0-P3. Each is 16 lanes and can run PCIe 5. P0 and G3 can have any of their lanes configured for SATA.

There are two supplementary interfaces, P4 and P5, each with 4 lanes of PCIe 3.

So from the H13SSL-NT block diagram:

- G0 - 12 unused: 4 PCIe3 lanes for the BCM57416 ethernet controller

- G1 - fully allocated: 8 lanes for PCIe slot 4, 8 lanes for slot 2

- G2 - fully allocated: 16 lanes for slot 3

- G3 - 8 unused: 4 lanes each for M2-H1 and M2-H2

- P0 - fully allocated: 8 lanes PCIe/SATA for JMCIO3, 8 for SATA ports

- P1 - fully allocated: 8 lanes each for JMCIO1 and JMCIO2

- P2 - fully allocated: 16 lanes for slot 1

- P3 - fully allocated: 16 lanes for slot 5

So that’s 108/128 allocated, 20 free. It’s not unusual to have many free, the board density required to run so many lanes in an ATX form factor is challenging.

The supplementary interfaces P4 and P5 are used for PCIe 2 lanes: 1 lane each to a couple USB5 controllers, and 1 lane to the BMC.