Hello guys,anyone here use Gigabyte MZ31-AR0?

Since there are so many ROMED8-2T owners around here: Any idea how many amps I can draw from an (each) individual fan header? I am heaving clearance issues with a PCI card (technically, I am playing Tetris with a few). Consolidating some chassis fans onto one header (with Y splitters) would geometrically solve the problem …

I’ve never seen the spec on the fan header amperage limts anywhere.

Since the fan headers use the the proprietary 6 pin fan headers for the server 2U-4U server fans, I bet it can handle a lot considering someone might fit a Dynatron or Delta fan cooler.

A standard motherboard fan header can supply 1A @ 12V. Lots of other headers are rated for 2A or even 3A for water cooling or AIO coolers.

So I don’t think that putting a couple of standard PC fans on a fan splitter is going to cause any issues.

Just for future reference, 4 fans on one header, staying well below 1A in total, is not an issue at all. Tried it, it worked. Thanks @KeithMyers

@s-m-e

We folks in the x570D4U-2L2T thread have confirmation from Asrock Rack support that those 6-pin headers are rated for 4A. I’m not sure if they’re all the same across all their boards though and SP3 boards might have even more juice on the headers.

4A is plenty unless you’re going full jet engine with 15k rpm fans.

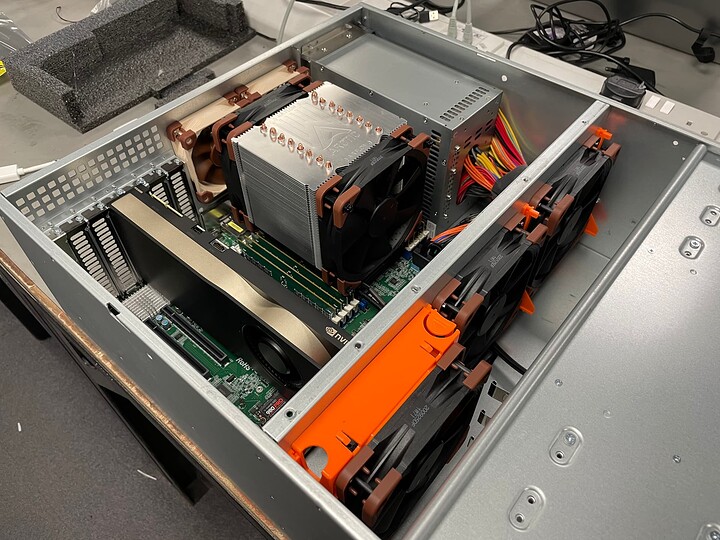

Latest iteration of 7443P build, this time using the Arctic Freezer 4U SP3 cooler.

The fans it came with are dogsh!t, they went straight in the bin. I strapped 2x Noctua Industrial 3000rpm to it instead, running at 1800rpm.

Runs at ~51degC with all 48 threads maxed out running Prime95, 3100Mhz all core boost, ~205W package power.

This is in contrast to the ~72degC my previous builds using Noctua SP3 92mm coolers. Incredible difference.

Thanks for the review of the Arctic Freezer 4U solution. That is a big difference over the Noctua solution. Much more than just more heatpipes (6 vs. 8). More finstack surface area and the great fans probably the greatest difference.

Such a pretty build! Still debating on going for 2nd gen Rome now or waiting for intels new HEDT release or whatever it is… although I’m tempted to bite the bullet now and upgrade and not worry about new products

They’ll always be something faster coming along real-soon-now. Whether or not it’ll actually be in stock is a whole other matter these days…

And has anyone price up DDR-5 RDIMMS? I suspect the pricing will be rather unpleasant for quite some time…

For you folks with onboard 10G-NICs, what sort of temps are you seeing in operation?

In a 26C room, my CPU is hovering at 29-30C idle, while the NIC is sitting at 71C on my H12-SSL-NT.

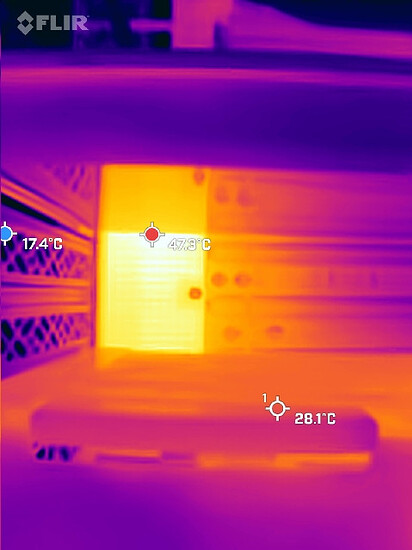

Asrock Rack ROMED8-2T with onboard X550-T2 , both ports connected at 10G, 20 C ambient, slight airflow from 3 silent fans at front of case :

- IPMI sensor : 59 C

- FLIR : 47 C on the heatsink

My Mellanox ConnectX-4 Lx add-in cards are similar (2x optical 10G) : 55 C and 57 C

https://www.mindfactory.de/Hardware/Arbeitsspeicher+(RAM)/DDR5+ECC+Module.html

unpleasant prices, but first retailer where I was able to spot some RDIMMS. Around +75-100%/GB compared to DDR4 RDIMMs. Have fun populating all 12 channels on Genoa ![]()

Hey fellow ROMED8-2T (and 7443p) users - I am currently running with 4 RAM sticks of 16 GB each (Samsung, DDR4-3200, CL22-22-22, reg ECC, 2 Rank → M393A2K43DB3-CWE) running beautifully in 4 channels.

I am dealing with a project where I (sooner than intended) need to up that a “little”, to 256 GB total at least. I am considering to go for 4 sticks of 64 GB, same type of RAM, same manufacturer (so I can eventually add yet another 4 as needed). Samsung has two options in its portfolio, each labeled as “Samsung RDIMM 64GB, DDR4-3200, CL22-22-22, reg ECC, dual rank, x4”:

- M393A8G40AB2-CWE

- M393A8G40BB4-CWE

The second option goes for 1.3 times the price of the first. Technically, digging into data sheets, even the chips appear to be the same except for a “v1” for the first option and “v2” for the second option in their type IDs. Option one was apparently first listed in about 2019 while option two apparently appeared in 2021.

Any ideas and/or experiences in terms of compatibility or other related issues?

Fun side question: I intend for my 16 GB sticks to become spare parts, but would it theoretically be possible to run this thing in 8 channel mode fully populated with 4x 64 and 4x 16 GB sticks? I remember servers populated with a mix of 1 GB and 4 GB sticks “in the old days” being able to do stuff like this.

From my investigations, I find no difference in specs or pricing for those two part numbers.

AFAIK, you can’t run asymmetric channels for memory on Epyc.

The modules all have to be the same.

Update: I Got the replacement for my DOA front fan for my Arctic Freezer TR4-SP3, after a long wait. Adding it lowered the temps under full load from 64c to 59c in back-to-back testing at same ambient conditions, so you were right it makes quite a difference with both fans.

Although I still see up to 65c with both fans at 1500rpm, i.e. they don’t reach their max - so the default BMC fan settings of the ROMED8U-2T might not be well optimized for this cooler.

Looks nice - how did you attach the fans to the cooler, are those screws or some kind of straps?

Also did you compare temps with the Noctua fans vs. original fans on the AF cooler?

My ROMED8U-2T has an Intel X710, and I seldom see it go above 40c given normal ambient conditions (20-25c). At idle its now at 38c, with the idle CPU at 33c.

It’s only attached to a 1GbE network though, so I’m not sure if that’s a relevant comparison. I don’t have any 10GbE peers to connect it to yet.

From specs the X710 is supposed to run a bit cooler than both the Broadcom 10Gbe chip you have, and the Intel X550-T2.

Thermals consideration was my original reason to go with the H12SSL-i instead of the H12SSL-NT. I feared the onboard 10GbE on the latter would run too hot, given that I use desktop chassis. From your post it looks like my assumption was correct. You still use a Supermicro chassis, right? That should have sufficient cooling, maybe ask Supermicro whether your temps are in the expected range?

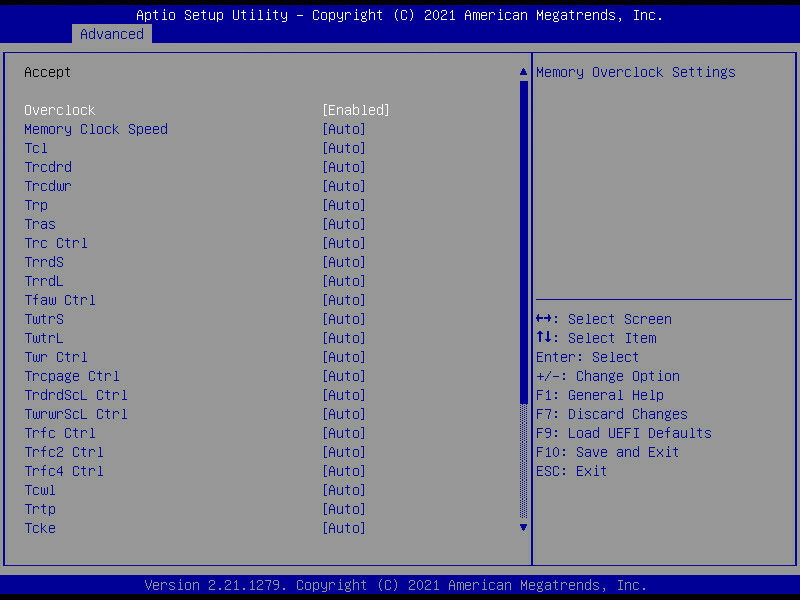

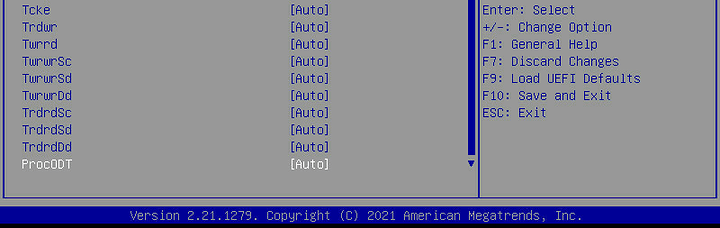

Memory timings on the ROMED8U-2T

I finally got around to fiddling with the memory speed settings on my ASRock Rack ROMED8U-2T. I did not try any literal overclock, as the default 3200MT/s are matched to the IF of my 7443p, and I don’t foresee myself wanting to run the sticks at higher clocks in any realistic scenario (unless convinced otherwise). But I did play around with timings, mostly to find out whether it would work at all. I’m a total noob on memory tweaking, so I tried only some basic stuff.

Caveat - no way to verify running timings

First, my ROMED8U-2T has a problem that I didn’t see on my H12SSL - it seems impossible to read the SPD info from the memory modules. I could not get them with decode-dimms in Linux, neither with MemTest86 - the latter only reports the numbers as missing.

Therefore the only way for me to verify a change of clocks is to run MLC and look for a performance difference.

Anyway, I have essentially the same sticks as @s-m-e , M393A2K43DB3-CWE, 16Gb 3200MT/s Dual Rank. My memory from the other board where I could read the SPD, is that they run at CL22-22-22-?? where ?? might have been 52 (did not manage to google it).

Edit: Hwinfo64 in Windows could read the timings despite SPD information being unavailable: they are reported as 24-22-22-52.

Test procedure

Here are my previous screenshots of the memory timing options:

I first lowered the CAS Latency (Tcl) in steps of two, down to CL16. Then I got a message at POST that two of the dimms did not pass the self-test. Which means that 6 of them did, suggesting CL16 is not impossible with the right set of dimms.

Then I went up to CL18, and started to change the other primary timings. I settled for 18-18-18-36, wich I’m running now. Every change I did gave rise to a stable difference in MLC results, so I assume that changing the settings worked.

Stability

Passed:

- 4 rounds of MemTest86 (freeware version)

- One full round of MemTest86+ (FOSS)

- About 1hr of mprime (prime95), mixed FFT sizes

- No ECC errors reported after 2 days

Performance

MLC results only.

Default (3200MT/s, 24-22-22-52)

Intel(R) Memory Latency Checker - v3.9

Measuring idle latencies (in ns)...

Numa node

Numa node 0

0 94.8

Measuring Peak Injection Memory Bandwidths for the system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using all the threads from each core if Hyper-threading is enabled

Using traffic with the following read-write ratios

ALL Reads : 144877.1

3:1 Reads-Writes : 134949.7

2:1 Reads-Writes : 136703.5

1:1 Reads-Writes : 137479.7

Stream-triad like: 140258.9

Measuring Memory Bandwidths between nodes within system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using all the threads from each core if Hyper-threading is enabled

Using Read-only traffic type

Numa node

Numa node 0

0 144865.2

Measuring Loaded Latencies for the system

Using all the threads from each core if Hyper-threading is enabled

Using Read-only traffic type

Inject Latency Bandwidth

Delay (ns) MB/sec

==========================

00000 555.29 144735.1

00002 552.16 144774.0

00008 551.47 145408.6

00015 545.08 145517.4

00050 504.37 145663.3

00100 387.87 146326.6

00200 133.94 100345.0

00300 124.09 69892.2

00400 119.85 53663.7

00500 117.53 43612.7

00700 115.41 31760.8

01000 113.95 22645.1

01300 112.08 17668.3

01700 106.92 13725.5

02500 106.73 9578.5

03500 105.94 7037.3

05000 105.78 5121.1

09000 105.23 3125.4

20000 104.99 1745.6

Measuring cache-to-cache transfer latency (in ns)...

Local Socket L2->L2 HIT latency 22.6

Local Socket L2->L2 HITM latency 23.7

Tweaked (3200MT/s, 18-18-18-34)

Intel(R) Memory Latency Checker - v3.9

Measuring idle latencies (in ns)...

Numa node

Numa node 0

0 89.9

Measuring Peak Injection Memory Bandwidths for the system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using all the threads from each core if Hyper-threading is enabled

Using traffic with the following read-write ratios

ALL Reads : 147305.1

3:1 Reads-Writes : 138777.5

2:1 Reads-Writes : 140462.3

1:1 Reads-Writes : 141285.1

Stream-triad like: 143622.2

Measuring Memory Bandwidths between nodes within system

Bandwidths are in MB/sec (1 MB/sec = 1,000,000 Bytes/sec)

Using all the threads from each core if Hyper-threading is enabled

Using Read-only traffic type

Numa node

Numa node 0

0 147259.7

Measuring Loaded Latencies for the system

Using all the threads from each core if Hyper-threading is enabled

Using Read-only traffic type

Inject Latency Bandwidth

Delay (ns) MB/sec

==========================

00000 523.11 147208.4

00002 521.15 147108.9

00008 532.44 146583.0

00015 530.16 146652.3

00050 492.74 147099.2

00100 347.49 149592.6

00200 128.43 100264.8

00300 118.34 69817.3

00400 114.41 53619.9

00500 112.13 43582.8

00700 110.17 31723.3

01000 108.72 22626.7

01300 107.31 17668.1

01700 101.82 13739.7

02500 102.47 9599.2

03500 101.95 7059.3

05000 101.69 5144.6

09000 103.32 3134.0

20000 101.01 1768.0

Measuring cache-to-cache transfer latency (in ns)...

Local Socket L2->L2 HIT latency 22.7

Local Socket L2->L2 HITM latency 23.8

About a 5% decrease in latency.

Conclusions

It’s possible to lower the timings on ASRock ROMED8U-2T without the system complaining just for the sake of it. What I haven’t tried are any subtimings, as my understanding of memory timing tweaking is quite shallow. But I’m happy for suggestions!

I don’t have concrete workloads which I know of where these settings would make a difference. It’s fun to optimize for the OCD of it, and I would probably run lower-than-spec timings if I keep this board for my main work machine, “because I can”. However there are still some aspects of it that are less optimal (e.g. the lack of a VRM temp sensor) and might lead me to put it in my server instead.

Btw:

One thing I didn’t check, is if increasing the ram frequency would also potentially increase FCLK. Not too hopeful, but it’s something to try.

If I were to check this, how could I verify the resulting FCLK on Linux?

I’ve never bothered trying to verify overclocking in Linux, because doing so would have me fall down a fractal rabbit hole and end up spending days researching extra shit.

I just want to get my overclocking done and move on, so what I do is just install windows on an old sata drive and boot that while testing. Literally all my testing tools are for windows anyways.

Ryzen master should show it. HWinfo should also show fclk, probably. CPU-Z may also report it as northbridge frequency.

Ok that was the step I was hoping I could skip ![]()

I might actually do that. I have an old 120Gb ssd laying around, might as well install Windows and some test tools on it, and then keep it around for occasions like this.

sudo lshw -C memory

*-bank:3

description: DIMM DDR4 Synchronous Unbuffered (Unregistered) 3600 MHz (0.3 ns)

product: F4-3200C14-8GTZ

vendor: G Skill Intl

physical id: 3

serial: 00000000

slot: DIMM_B2

size: 8GiB

width: 64 bits

clock: 3600MHz (0.3ns)

Just divide the reported clock by 2 and that is FCLK

Isn’t that just showing the frequency of the memory module? The FCLK (infinity fabric clock) is usually in sync, but it can be uncoupled and be different, which is what we want to check for.