That's true, GlobalFoundry's 14nm is great but still has ways to go but their 14nm will be a good canvas to start off and build off of that. We've seen this happen with their Polaris where it would only hit 1266mhz and at best 1300mhz, and maybe 1400mhz under water and edited Bios (all easily hitting 200+w), but eventually the good silicons started popping up with TDP that could be pass as a laptop GPU and even XFX got wind of it for their desktop counterpart which barely reaches 90w at 1266mhz, and easily OC'd to 1400+mhz at only 133w all on air. We could easily see the same thing happend to Ryzen given that they're both manufacture under GlobalFoundry's fabrication process.

Der 8auer got that and that was for how long?

Top cinebench scores are way lower clockspeed.

Well, the thing is, that even 100MHz gives a significant bump in performance.

So 3.9 on Asus will be way slower than 4,1 on Asrock. But I doubt we will see even 4,4...

I don't get the argument that Kaby Lake will somehow give you an edge for gaming. It's a non argument. If you want to game at 1920x1080 on a quad core just buy a quad core and game at 1920x1080.

The justifications people seem to be coming up with are just plain crazy.

For most games you can get a pentium and do just fine...

Finally AMD comes out with a CPU that can compete with Intel but still gets not so great reviews because it's not on par in games with the Intel CPUs that have been for 5 years the only choice for gamers.

Also this CPUs just came out and microcodes updates are in the pipe, the internet should chill out for a sec.

The 5960X is almost as weak as a 1800X if compared against the high clocked i7 quad cores, but nobody started a shitstorm online for this. This happened because a CPU costing 1000$ flies under the radar for 90% of the PC users.

I can see pointing out the memory speed issues and the relatively low overclocking margin on water/air but the fact that the CPU put's out, at best, 10 fps less compared to the Intel CPUs in fps numbers well above 100 it's pure rush of shit to the brain in my opinion.

Also who would be so stupid to buy an 8-core CPU just for gaming? In all the application this processor is made for gives a run for it's money to every Intel CPU.

Reviewers should take the 1800X and play a game at 1080p while recording, transcoding the recorded footage and streaming at the same time, for example. That's where a CPU with 8 cores and 16 threads truly shines.

I'm not defending AMD, I'm not a fanboy. I'm just saying reviewers and the internet is going a bit nuts over a slight difference in gaming performance for a good CPU.

Ah yes, that Computer Base test. It's kinda funny that Jim (AdoredTV) is basing his whole point on that outlier of a Computer Base test with average FPS numbers. Where are the frame times?

Look at TechReports review of Ryzen 1800X and 1700X review, they have a 8370 in there. It looks bad compared to Ryzen and the Intel chips. The 2600K beats it no problem.

Check out the frame times for the games:

Watch Dogs 2 is the only game that the 8370 isn't worse than even the slowest of the other CPUs in that test. The FX-9590 isn't much better, and Vulkan/DX12 can help but doesn't re-arrange the perf chart by any means. 8370 remains hopelessly last. These results are pretty much mirrored by others who do frame-time testing, like PCPer and GamersNexus.

That's probably why Computer Base have Average FPS in their chart. That CB test is a clear outlier. While I think Jim has a point, I'm not so sure of what he uses to prove it.

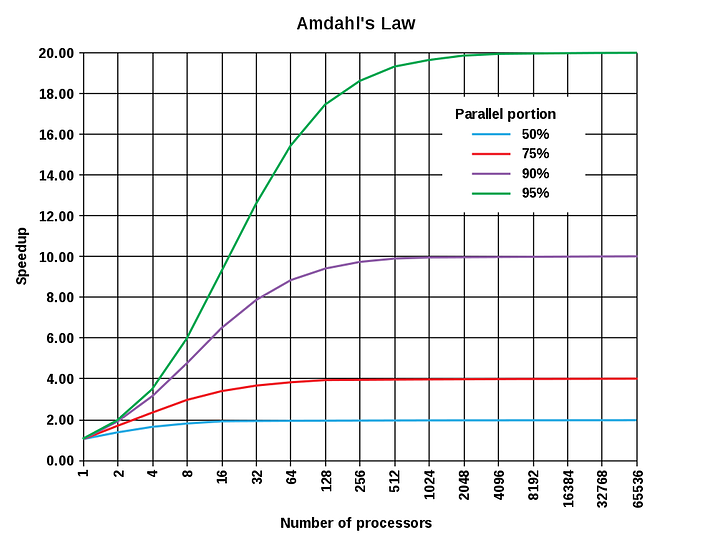

Second part, and this is hard to understand unless you're bit into Computer Science (Amdal's Law). The possible speedup you can get by adding more cores to a task. Ryzen has worse IPC than Kabylake. Not by much, like less than 10%, maybe like 7%? It is not really a problem, no more Bulldozer! But the high clocked desktop Kabylake CPUs has significantly higher clockspeed at stock too. And Ryzen doesn't overclock much beyond 4-4.1. So what, why does this matter for games? A typical gaming load is still very dependent on single thread performance. Sure, we are today a lot better at taking advantage of more cores. Put physics on one core, put scripting/AI on another etc etc. But for the main thing we are very much limited to how well we will be able to use many threads. It is a hard problem. You can't make an essential single threaded serial problem magically multi-thread. Beyond a fixed number of threads, you just don't get any meaningful scaling, or any scaling at all. That's why games runs so well on an high clocked (not even overclocked) Kabylake i7 or even an i5. Even the best threaded games like Ashes of the Singularity have very little meaningful scaling beyond a pretty low number of threads.

Say that AOTS has a p value of close to 90% (probably too high, but what the heck). That means if the four-core CPU has a single core perf advantage of 30% or more over a six-core, the four-core will win. Always, it is not possible for the six-core to win. If it is 90% up to eight cores, the four-core need 50% single thread advantage. That looks a lot better for the eight-core, problem is not many games reach even 80% p-value. At 80% the four-core need 30% advantage in single thread compared to the eight-core. The four-core only needs 20% single thread advantage to beat the six-core at 80%..

That's why pretty much all Intel i7 win over the older FX:es in ATOS. 8 cores doesn't matter when they are slow. And that is the theoretical maximum, in reality you usually get worse scaling due to subsystems not scaling with cores. Memory (why does X99 have four channels again), DMI/SATA etc. And most games have pretty bad scaling, maybe 80% at best.

And this is a Hard problem. Meaning that with what we know today, Amdal's Law can't be broken. If the four core has 30% better single thread perf compared to an eight core, the p-value needs to me higher than 80%. Otherwise the four-core will always win.

But what about Cinebench, Blender etc? Ryzen does well here, tremendously so thanks to that fantastic SMT performance. Those kinds of problems are embarrassingly parallel. A typical gaming load is not. If you can make a typical gaming load embarrassingly parallel, you'll be a very rich man indeed.

Look at that red line there, the very good threaded games we have are about there. This a why a Kaby Pentium can give you acceptable performance in a game, most of the time. The purple line could be AOTS, if only we had 64 or 128-core CPUs running at really high clockspeeds. One can dream, right? Make it happen AMD! :-)

I'm tired and have probably made mistakes, there are undoubtedly better CS people on this forum than me.

Kevin from Tech Showdown did a nice apples to apples compairisson between a 1800X and a 6900K both at the same clock speeds, and also memory at the same speeds.

Keep in mind that Ryzen is a new platform, and still need to get some improvements.

Massive fail. The thumb nail shows a 1700x.

Someone was dozing on the job. 💤💤💤💤💤

I saw that, but that doesnt matter for numbers.

The 1800X is used for the tests.

I would assume it's from a combo of Windows paired with gigabyte.

Unless other people have this problem with different boards.

I really wouldn't call it that. xD

Very interesting.

I think Jay has been backing off his AMD hate for the last month or so. AMD didn't invite him to the launch... Maybe he is being a little more open minded :)

The latest video made a lot of sense.

Didnt I read only the Windows 10 kernel will get Ryzen patch's ? Same with the latests Intel CPU's

Turns out to be a clock runs slower after waking up:

I know a guy that have an Asus Crosshair and have problems when resuming from sleep. Every sensor say the clockspeed is significantly higher. And it seems to get worse after each sleep and resume, 5.9 GHz etc.

Even after setting power options to High Performance in the control panel?

BIOS updates, looking at most X370 board pages there have been new bios updates coming out almost bi-daily.

Is this maybe down to the external clock generator on the high end boards?