Are you still running the previous firmware (Idr the version number off of the top of my head ![]()

![]() )?

)?

I’m using the second-newest firmware version P14.2 on the P411W-32P.

Since Broadcom displays 3 different (!) versions for the very same firmware file for example, I’ll always use the version from the original download package file name.

BTW: On their download site they state the version for the firmware P14.3 to be 4.1.3.1, Broadcom’s G4xFLASH tool on the other hand reads the installed firmware version as 0.1.3.1.

Could it be that some stupid typo in the firmware source file disrupts the proper firmware version naming scheme and maybe as another side effect causes unspecific bugs?

Firmware P14.3 still has not any functionality, SSDs don’t show up during POST and also not in the OS. Came back to it and checked with the “latest” drivers with the certificate issue in Device Manager, was thinking that maybe the P411W-32P would finally work with the latest drivers from within the OS and that potentially only boot support remained broken.

But I can at least boot with the drivers P23 with different firmware versions installed, drivers P18 caused a BSoD during boot with any other firmware than P14.3 (which is funny since this firmware is completely broken).

Can you properly use the drivers P23 in Windows or do you get the same certifacte error message as me?

Has anyone here tried the pcie 4.0 slimsas redrivers and breakouts made by Christian Peine? I stumbled on them last week but can’t justify the cost of testing them right now. https://c-payne.com/collections/slimline-pcie-adapters-host-adapters

On a separate note, does anyone know of an M.2 to pcie 4.0 x4 adapter? I need to run a 3080 off an M.2 slot on my trx40 motherboard and shipping the adt-link m.2 to pcie x16 4.0 risers takes a while.

If nothing else I’ll probably test one of the mining m.2 to x4 cards, but don’t have much hope for them at gen 4 speeds.

I’ve received this Delock Produkte 64106 Delock M.2 Key M zu 1 x OCuLink SFF-8612 Konverter but I haven’t had time yet to try it out. I can let you know once I’ve tested it. Though my test will be with a U.2 SSD.

UPDATE: A first test wasn’t successfull. It didn’t even recognize the drive at gen3 speeds.

Since I don’t know what specifically to test next I will probably look at this dude’s goods next. The big players have failed so that would only be logical.

Hardware:

- Motherboard: GigaByte MZ72-HB0

- CPU: 2x EPYC 7443

- Drives: 4x Intel D5-P5316 u.2 PCI-e 4.0

- PCI HBA: HighPoint SSD7580A (4 SlimSAS 8i ports)

- Cables: 2x HighPoint Slim SAS SFF-8654 to 2x SFF-8639 NVMe Cable for SSD7580

I had a bit of journey (explained below) but ultimately I could

achieve a bandwidth of 28.4 GB/s on a Linux md raid0 array and sequential read with fio. The key insight is on Epyc (and Threadripper?) you may want to change the nodes per socket (NPS) in the BIOS.

fio Results:

# /opt/fio/bin/fio --bs=2m --rw=read --time_based --runtime=60 --direct=1 --iodepth=16 --size=100G --filename=/dev/md0 --numjobs=1 --ioengine=libaio --name=seqread

seqread: (g=0): rw=read, bs=(R) 2048KiB-2048KiB, (W) 2048KiB-2048KiB, (T) 2048KiB-2048KiB, ioengine=libaio, iodepth=16

fio-3.30

Starting 1 process

Jobs: 1 (f=1): [R(1)][100.0%][r=26.5GiB/s][r=13.6k IOPS][eta 00m:00s]

seqread: (groupid=0, jobs=1): err= 0: pid=10378: Wed Jul 27 09:30:41 2022

read: IOPS=13.5k, BW=26.4GiB/s (28.4GB/s)(1587GiB/60001msec)

slat (usec): min=32, max=578, avg=63.60, stdev=23.46

clat (usec): min=264, max=4962, avg=1117.58, stdev=79.42

lat (usec): min=328, max=5369, avg=1181.28, stdev=79.04

clat percentiles (usec):

| 1.00th=[ 963], 5.00th=[ 1057], 10.00th=[ 1074], 20.00th=[ 1090],

| 30.00th=[ 1090], 40.00th=[ 1106], 50.00th=[ 1123], 60.00th=[ 1123],

| 70.00th=[ 1123], 80.00th=[ 1139], 90.00th=[ 1139], 95.00th=[ 1172],

| 99.00th=[ 1434], 99.50th=[ 1598], 99.90th=[ 2024], 99.95th=[ 2114],

| 99.99th=[ 2343]

bw ( MiB/s): min=23616, max=27264, per=100.00%, avg=27101.38, stdev=407.26, samples=119

iops : min=11808, max=13632, avg=13550.69, stdev=203.63, samples=119

lat (usec) : 500=0.01%, 750=0.12%, 1000=1.73%

lat (msec) : 2=98.02%, 4=0.11%, 10=0.01%

cpu : usr=1.45%, sys=88.36%, ctx=338808, majf=0, minf=8212

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=100.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.1%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=812351,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=16

Run status group 0 (all jobs):

READ: bw=26.4GiB/s (28.4GB/s), 26.4GiB/s-26.4GiB/s (28.4GB/s-28.4GB/s), io=1587GiB (1704GB), run=60001-60001msec

Disk stats (read/write):

md0: ios=13175835/0, merge=0/0, ticks=8504656/0, in_queue=8504656, util=99.93%, aggrios=3249404/0, aggrmerge=50764/0, aggrticks=2083607/0, aggrin_queue=2083607, aggrutil=99.85%

nvme3n1: ios=3249404/0, merge=203059/0, ticks=2283093/0, in_queue=2283093, util=99.85%

nvme0n1: ios=3249404/0, merge=0/0, ticks=2059957/0, in_queue=2059957, util=99.85%

nvme1n1: ios=3249404/0, merge=0/0, ticks=1889297/0, in_queue=1889297, util=99.85%

nvme2n1: ios=3249404/0, merge=0/0, ticks=2102083/0, in_queue=2102083, util=99.85%

Initial testing of this setup I was only able to achieve between 7 to 9 GB/s depending on setup/options, each individual drive was able to achieve 6.9 GB/s on it’s own so it was hitting a

bottleneck somewhere.

What was also annoying was I had asymmetric direct IO access speeds, 7GB/s from

one CPU socket and 1GB/s from the other socket. I expect some dip but this was

more than I expected. The OS was running on a Gen4 x4 m.2 and has similar access

speeds from either socket.

I contacted HighPoint support and they were helpful. There was some back and

forth with of “is this plugged into x16 slot?”, “are use pinning the process

to a given NUMA node?”, “are our drivers installed?” etc. It turns out the magic

option was to set the Nodes Per Socket (NPS) to NPS4 in the BIOS. This means I

have a NUMA node per core complex (4 per socket 8 total). I had found some info

on setting the NPS in Epyc tuning guides but no mention of it’s impact PCI-e

bandwidth. We have another machine with 2x Epyc 7302 (PCI-e 3.0)

and HighPoint SSD7540 with 7x m.2 drives. Previously I could only get 6GB/s

which I presumed was some bottleneck/inefficiency on using an odd number of

drives. After setting the NPS4 in the BIOS on this it bumped up to 13.3GB/s.

If anyone knows why the PCI-e gets bottlenecked when NPS1 (default) is used I

would be curious. My guess is to make the multiple core complexes appear as a

single node per socket the infinity fabric uses some lanes, but this is

pure speculation. There is an old post by Wendel on “Fixing Slow NVMe Raid Performance on Epyc”, I didn’t want to bump but I wonder if the nodes per socket would impact this also.

Back to the Epyc 7443 system here are the bandwidth numbers when pinning to

different CPUs (fio --cpus_allowed=<ids>).

The drives are connected on NUMA Node 1

$ cat /sys/block/nvme[0-3]n1/device/numa_node

1

1

1

1

Curiously I get best results if I allow any CPU on Socket 0 rather than

just the node the drives are connected to.

Cores: 0-23: (Socket 0, NUMA nodes 0,1,2,3)

READ: bw=26.3GiB/s (28.3GB/s), 26.3GiB/s-26.3GiB/s (28.3GB/s-28.3GB/s), io=1580GiB (1696GB), run=60001-60001msec

Cores: 24-47 (Socket 1, NUMA nodes 4,5,6,7)

READ: bw=21.4GiB/s (23.0GB/s), 21.4GiB/s-21.4GiB/s (23.0GB/s-23.0GB/s), io=1283GiB (1377GB), run=60001-60001msec

Cores: 6-11: (Socket 0, NUMA node 1)

READ: bw=24.8GiB/s (26.6GB/s), 24.8GiB/s-24.8GiB/s (26.6GB/s-26.6GB/s), io=1486GiB (1596GB), run=60001-60001msec

We went with the HighPoint HBA as we were originally going to use a Supermicro H12. The Gigabyte MZ72-HB0 motherboard actually has the ability to plug 5x SlimSAS 4i in directly, although this is 2 on Socket 0 and 3 on Socket 1. The motherboard manual is quite sparse on details but I can confirm all the SlimSAS slots are 4i although only 2 are labelled as such. It is quite hard to find information of the SFF connector spec/speeds but my understanding is MiniSAS is 12Gb/s per lane and 4 lanes (so 6 GB/s), SlimSAS is 24Gb/s per lane (so 12 GB/s). My understanding is therefore you should NOT use MiniSAS bifurcation for Gen4 SSDs if you want peak performance.

I found this forum thread before I set the nodes per socket in the BIOS and decided to order and try some SlimSAS 4i to u.2 from Amazon (YIWENTEC SFF 8654 4i Slimline sas to SFF 8639). These were blue-ish cables and given previous mentions of poor quality (albeit different connector types I think) I didn’t have much hope. However testing these cables I saw expected speeds and no PCI-e bus errors YMMV if connecting via a bifurcation card. DeLock seems to be the only option there but hopefully there are more possibilities in future, particularly with Gen5 incoming.

Edit: You need to change the BIOS settings for 3 of the SlimSAS 4i from SATA to NVME.

Just an itsy bitsy teenie tiny update since there are neither new, fixed drivers nor a firmware for the BSoDcom P411W-32P.

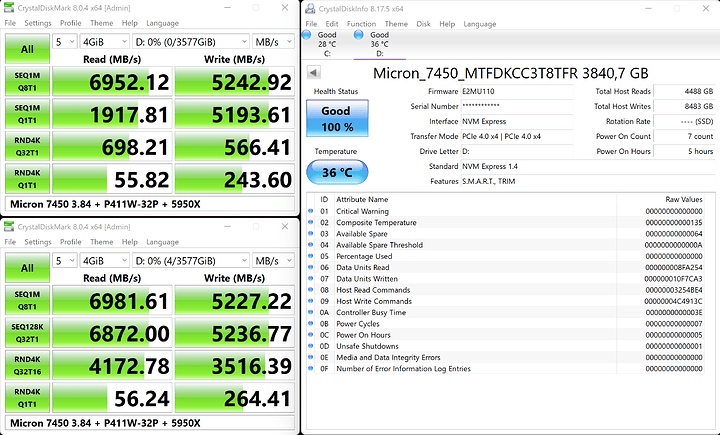

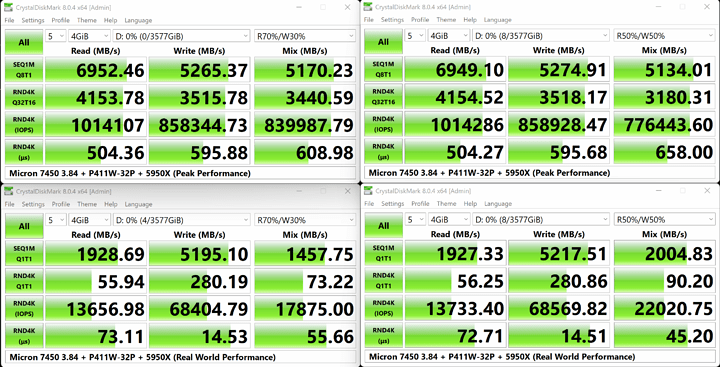

Since Optane has been killed, I’ve been looking at other NVMe storage that might be a nice SLOG/L2ARC etc. volume but isn’t extremely expensive.

The Micron 7450 with 3.84 TB or larger (the larger capacity models are faster) looks good and doesn’t break the bank compared to Optane P5800X models, for example. If you just use 2 TB instead of the 3.84 TB as a max capacity the NAND should last much longer than the warranted TBW.

Have you tried Serialcables dot com? They make some breakout hardware that I’ve with great success in the past. We used it for Pci-e 4.0 Test and Dev.

Just to be sure: Did you do your tests on configurations where PCIe Advanced Error Reporting was enabled and also confirmed working for the PCIe lanes the cables were using?

That’s the hurdle I can’t clear with completely passive adapters yet, PCIe Gen3 is working absolutely error-free, Gen4 meh and active adapters like the Broadcom Tri-Mode or PCIe Switch NVMe HBAs don’t show anything about PCIe Bus errors with connected SSDs ![]()

Thanks for the tip, haven’t bought from serialcables.com yet, will take a note for the next round.

Needed a bit of a break from cable/adapter testing since every avenue with PCIe Gen4 led to some kind of disappointment

Does anyone have any links to any 2U PCIe 4.0 Risers?

I am looking at building some 2U Rackmount workstations but i am struggling with this.

I have currently got one of these:

ARC2-733-2X16C7 2U Dual Slot PCI-E X16 Flexible 7cm Ribbon Riser Card | eBay

It reports the GPU (3090) as PCIE 4.0 in GPUZ and even when i run the render test in GPUZ it continues to show Gen 4.0 but when i run anything intensive such as Aida64 GPU Stress test or other GPU tests such as Specviewperf it reboots the system. If i set the slot to Gen3.0 rather than Auto it works fine.

I have also found a Supermicro part:

Supermicro RSC-D2-66G4 - Accessories, Riser Cards - computer shop Serverparts.pl

but is pretty expensive and hard to find along with the second slot not being able to be accessed so its a bit overkill.

Any suggestions would be extremely helpful. If i could find one like the first one that has a small flexible riser to the top PCIe slot on the rear of the board and then the PCB connected to the 3rd one down that would be perfect.

BTW i have tried using flexible risers that are available for some chassis but they are orientated in the opposite direction and cannot be used in 2U.

I haven’t messed around with the P411W-32P in months because it is in production. ![]()

I’d love to see patches, but unfortunately, it doesn’t appear to be on Broadcoms priorities list.

I’m tempted to buy a second card and an enterprise seagate nvme SSD for testing. That’s a later thing for now.

I’ve started to see pcie 4 riser cables from reputable companies on Amazon. I have not tested them, nor do I have the capacity to atm.

Thermaltake TT Premium PCI-E 4.0 High Speed Flexible Extender Riser Cable 300mm AC-058-CO1OTN-C1 https://a.co/d/fswQ4fJ

idk about that but yeah I’m seeing more and more

we’re barely getting 4.0, I wonder if we’ll ever see flex 5.0

The issue i have with flexible risers is that they are often made for vertical fitting of the GPU in a case and are handed so if i fitted in a 2U system it wouldn’t let me fit the lid. Those Thermaltake ones look like they might fit but i am not sure if i can secure the socket(GPU end) to the bracketry that will let me fit the GPU in horizontal configuration in 2U. And if it did i would have concerns about it being damaged in transit. With one that is a PCB type it would be nice and secure.

I recently got one of these for a 2U box I have (because I can’t currently get ahold of a proper PCIe slot adapter that would go in that slot for it, not very impressed with ASUS’s customer service): LINKUP - Ultra PCIe 4.0 X16 Riser Cable, Left Angle Socket {15cm}

Basically the “top” of the GPU is pointing away from the midpoint of the board, and the back of the card is facing up, so I needed a cable with the right orientation. The cable bends back over itself.

I’m busy testing other bothersome stuff today, but I don’t recall seeing any issues in DMESG while it was briefly in the other day. I can confirm if it’s truly good or not in a few days if you’re interested.

Great, thanks that looks like it will work…

Has anyone got any feedback regarding the P411W-32P.

Ive got a similar setup but im unable to get Windows 11 22H2 to install let alone boot.

The issue is with the default itsas35i driver which is constantly BSODing.

Ive tried multiple firmwares and get the same drives not detected, they are micron 9300 maxes and Intel P3700’s.

Hi @Kleog and welcome to the community!

Unfortunately Broadcom’s firmware and drivers (at least for Windows) are pretty broken, reliably causing BSoDs. Have been seeing different but similar patterns with Broadcom HBA 9400, 9500 and lastly the P411W-32P.

Regarding the P411W-32P:

- The most recent firmware is completely broken (SSDs don’t show up at all)

- The most recent drivers are broken (invalid signatures)

I could test around with the P411W-32P with the second to latest firmware and the ancient generic drivers that come with Windows.

Can you list your CPU/Motherboard (BIOS version)/Memory configuration?

I’ve already notified Broadcom’s support about these issues but they just say everything’s fine in their “lab” and ignore the issues.

My hope is that the more specific examples of these failures are independently collected at some point they might actually be forced to improve things.

Broadcom sucks pretty hard, have already wasted many, many hours with them.

System is X299 Sage BIOS 3701, it does have PEX switches for the PCIE lane allocation and my system is full slot wise.

I’m thinking whether its because of the slot running at x8 and /or the fact I’m using the side ports of the P411-32P and not the top, several things to try I guess.

I can get Windows 11 22H2 to boot with the latest firmware but as you said no drives detected. Pre 22H2 also seems to work with old firmware.

BSOD’s happen even with no cables attached… issue is not memory related, no issues with the card removed.

You think I can get away with using the “Diamon PCI-E SFF-8643 Expansion Card” with linux? I know it explicitly says for windows only but I don’t see why it wouldn’t work.

Looks like it won’t even hit gen3 speeds though, which is all I need.