—BACK STORY—

Hi everyone, I’m a sort of sys ad “jack of all trades” everything guy at a large university in the southern US working in a medium sized scientific research department that has an operation most similar to a small business. I’ve only been in the role for about a year after my boss retired and since then I’m the only IT person for the department. My boss was fond of Apple, and I am too. But, upon it being my responsibility to manage upwards of $40 million in research data at 35TB+ in size I was uncomfortable keeping it on what he had setup roughly 8 years ago: a hodgepodge of Mac minis and an Xserve along with about a dozen OWC hardware RAID arrays connected over eSATA/USB/FireWire. Well… at least it was in a 3-2-1 backup structure!

Funding is tight and I have to argue for every IT expense I need. Purchases from places like eBay are completely out of the question so I purchased and donated approximately $2,500 in level1techs inspired refurbished enterprise servers from eBay and got to work replacing the old infrastructure. The drives were purchased by my department.

My main goal was to get the data on ZFS and OFF the crappy OWC hardware RAID arrays. Those things were AWFUL. As @wendell mentioned in one video, you have to know where the bodies are buried, and with the OWC arrays it was obvious the bodies were buried in hardware RAID. The arrays would disconnect randomly, had no alert system, had no ability to troubleshoot, my boss had many in RAID0, and as I found out later as I cannibalized their drives many drives were in the process of failing with dying sectors and had absolutely no warning signs from the arrays.

I went with FreeNAS for its ZFS ease of use and simple replication abilities. I was already familiar with it from running it in my homelab and I guess I’m just a sucker for web UIs.

—HARDWARE—

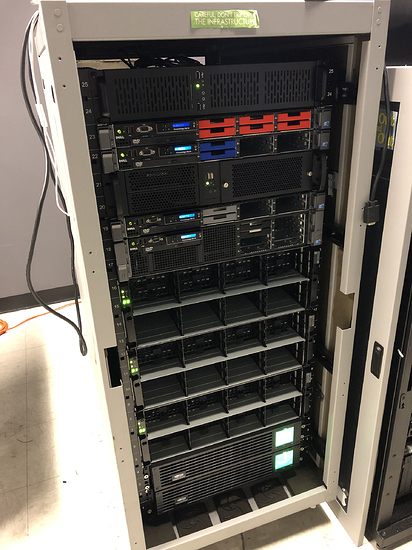

Primary FreeNAS server

- Dell PowerEdge R710 with e5520s and 96GB RAM

- NetApp DS4243 IOM3 with 12x 8TB WD Reds in RAIDZ2 (primary pool “tank”)

Backup on-site FreeNAS server and also secondary b-tier storage

- Dell PowerEdge R610 with e5520s and 96GB RAM

- NetApp DS4243 IOM3 with 12x 8TB WD Reds in RAIDZ2 (tank backup)

- NetApp DS4243 IOM3 with 12x 4TB and 12x 3TB in two vdevs of RAIDZ3 of various models cannibalized from the previously mentioned OWC hardware RAID arrays. RAIDZ3 because these drives are used, some as much as 30,000+ hours and I don’t trust them. I call this “bpool” and use it for passive backups via Time Machine and Veeam Agent for all department computers

Disaster Recovery off-site FreeNAS server

- Dell PowerEdge R610 with l5520s and 96GB RAM

- NetApp DS4243 IOM3 with 24x 6TB WD Reds in two vdevs of RAIDZ2. Although these drives are used they all had <6,000 hours so just barely. They were cannibalized from four Buffalo NAS’ my boss used for the previous on-site backup

Windows Server 2016 for Active Directory

- Dell PowerEdge R610 with l5520s and 24GB RAM

XCP-NG virtualization server

- Dell PowerEdge R610 with x5670s and 64GB RAM (XCP-NG virtualization server)

NetApp cont.

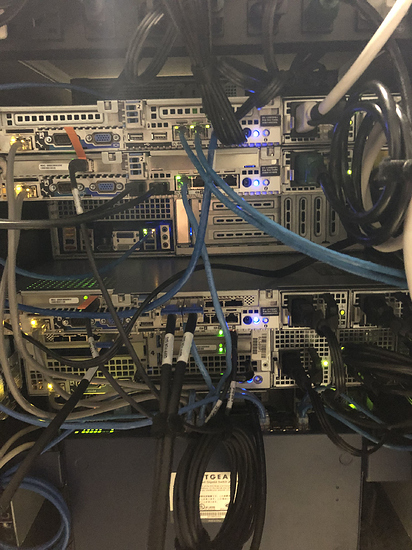

- Each server that is connected to a NetApp DS4243 has an LSI 9201-16e which can be found on eBay for approx. $50

- Mini-SAS to QSFP+ cables can be found on Amazon for $50-80 depending on length

Networking

- Three servers have Mellanox MNPA19-XTR 10 Gb cards and are connected directly to each other

- Primary FN is connected to on-site backup FN

- Primary FN is connected to Windows Server (makes changing permissions noticbly faster)

- Everything else is gigabit, including Internet connection

—MORE INFO / TRICKY BITS—

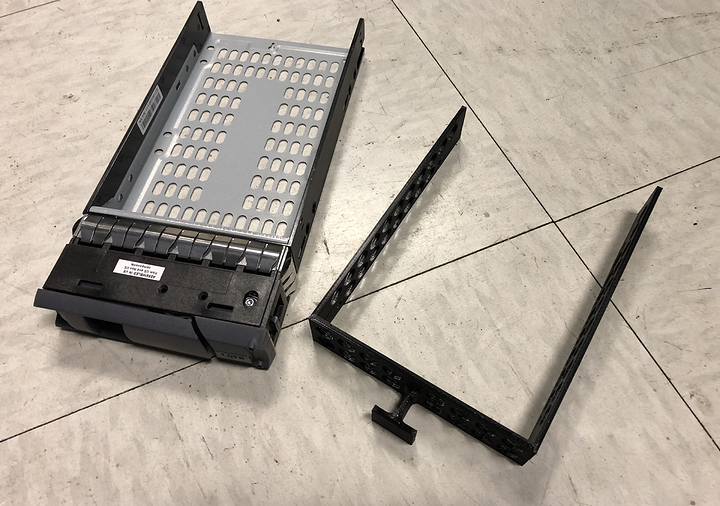

3d printing:

You might get lucky and get DS4243s from eBay for a reasonable price with their disk trays included, but from what I saw $150 tray-less sales were much more reliable. I actually bought a lot of 100 trays for $300 but after realizing I could make use of my 3d printing hobby to design and print trays myself I resold them.

I designed a disk tray compatible with DS4243s using TinkerCAD and printed them using my WanHao i3 Plus. They’re designed to not restrict airflow and use a minimal amount of printing material. You can print two at a time which takes 7 hours and costs $0.48 each.

I also modified a design already on Thingiverse for PowerEdge R610/R710 disk trays which cost $0.30 each to print. You can see those, color coded, in the above hardware pictures.

I’ve had zero issues with these 3d printed disk trays.

Backups:

The primary FreeNAS server replicates to the on-site backup AND the off-site backup which is located across campus.

Initially I had problems with this because FreeNAS’ built-in replication is over SSH which regardless of encryption level was capping out around 125 Mb/s. The 35TB of data would’ve taken over a month to do an initial backup. This issue was present even over the 10 Gb connection between primary-backup, but I never figured it out. Instead I used netcat to zfs send recv the first ZFS snapshot to backup over the directly connected primary-backup 10 Gb. This worked wonderfully and I was able to max out at 3 Gb/s which is only the max because the DS4243’s IOM3 modules.

Another problem I ran into was wrongly assuming my disaster recovery server had a gigabit connection. It ended up being a 60 Mb/s connection. One amazing feature of ZFS though is the ability to export and import pools so after doing an initial netcat zfs send recv over direct attached 10 Gb I exported the pool and sneakernetted it across campus where I imported it into the disaster recovery server. 60 Mb/s hasn’t been an issue with continuing replication.

Restores:

In the planning stages less restores and faster restores was one of my major goals (duh!). My FreeNAS storage cluster is a MASSIVE upgrade in this regard. Previously if one of the OWC hardware RAID arrays died restoring from backup took at least 2-3 days if not more. During my boss’ last day an OWC array did die and restoring the ~4TB of data took from Friday afternoon to Monday morning over its eSATA connection. Have I stressed enough how shitty those OWC arrays are? 3 days of downtime was, in my eyes, professionally unacceptable.

With the new setup an array/vdev failure is much much much less likely because the lowest RAID level is RAIDZ2. In addition I have FreeNAS email alerts setup so I can be alerted when a drive is in the process of failing and can be replaced before failure. Even if I had the primary pool fail an entire 35TB restore should take only 1.5 days from backup.

Don’t forget–with ZFS even if the drive is lying about its health you’ll be alerted to checksum issues when the scrub runs. You might think drive schizophrenia isn’t a thing but since I’ve worked here for approx. 5 years I’ve seen at least 3 drives that have all the symptoms of failure but come up completely clean in SMART. Trust in ZFS!

Virtualization:

I went with the new FOSS fork of Xen, XCP-NG because I had issues running Proxmox on this hardware. I use Proxmox at home, but with the R610 and consumer SSDs I was getting extremely high I/O delay. After many weekends troubleshooting I switched to XCP-NG and haven’t had any issues. Some VMs I run:

- Active Directory web directory

- Active Directory password reset site

- WSUS

- Calendaring and reservation system

- Nginx reverse proxy

- Cert authority

- Various organization sites

Other servers:

You might have noticed two black 2U servers in the above pictures. One is my technician FreeNAS 10TB server where I keep images, software, isos, etc. The other is an experimental BlueIris server not in use yet.