Hello.

I’m building a *BSD (pfSense/OPNSense) router/server for a 200+ player Lan Party. And I need some suggestions from people that are a whole lot more in the know about such builds.

First some background:

Me and my team have organised 2 big (100-150 player) Lans in the last 2 years.

Both times we used a X99 build with a [email protected] as the router.

It also contained 3* 4x 1Gbps Intel NICs as links to the switches and a 2 port 10Gbps card from QLogic (HP 530T) as the main link in.

We never came even came close to maxing out the CPU but we have decided to replace the X99 build with something more server like as we are expecting more and more players in the near feature.

Requirements

We are looking to build a Router/Server that can handle at least 10Gbps and would stay with us for multiple years. That’s including caching stuff like Steam, Microsoft updates, …

The machine would also have a couple of Jails/VM’s for game servers.

The current budget is 3000€ (That’s for the motherboard, CPU and Ram only).

We plan on filling the machine up with:

2x 2 Port 10Gbps Ethernet cards*,

2x 2 Port 10Gbps Fiber cards*,

n* 4 Port 1Gbps Ethernet cards**.

I have currently found 2 motherboards that I assume would be good enough for our use case.

MW51-HP0 - Intel LGA2066 socket

I assume Intel CPUs are tried and tested in such a use case.

The CPUs boost to a higher clock then any AMD EPYC CPU on the market currently but due to the budget I would be trading cores, memory and PCIe lanes for addition clock speed.***

The fact that the motherboard uses a PLX chip to get some of the lanes also concerns me. I assume if I used 4x PCIe cards in the last 4 slots there would be no issue, but upgrading down the line…

Also the board only comes with 2x 1Gbps NICs (shared over DMI3).

MZ31-AR0 - AMD SP3 Socket

The board already has 2x SFP+ 10Gbps slots eliminating one PCIe card.

Everything important is directly connected to the CPU so no PLX chips.

Also a bunch more ram slots.

The problems I see are that AMD EPYC is clocked lower then Intel Chips*** and looking at the OpenBSD documentation AMD EPYC is not mentioned anywhere (and yes I know that the CPU works with OpenBSD looking at the Phoronix testing).

Why not use a dual socket server?

We tried using a dual socket Intel server but we had some problems, if one of the cards was attached to CPU1 and the rest to CPU0, they would sometimes drop packets or not even link at all.

Why not upgrade the X99 build?

The same issue as listed for the Intel platform above, PLX chips.

TL;DR:

More Cores (AMD) or More GHz (Intel) for a *Sense router build?

All recommendations are welcome. Just make sure they are reasonable

* The cards would be used for incoming links from the school or facility that we would host the party at. Some are only able to provide high speed Fiber other Ethernet.

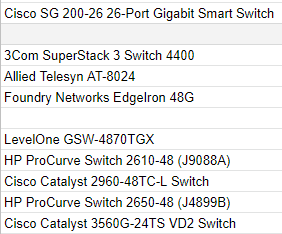

** The cards would be upgraded as the need for more connectivity would grow. The reason we currently want multiple out links is due to most of our switches only support Gigabit. Also the need for multiple separate Lans.

*** Due to only testing with high clock speed CPUs, I don’t really know what pfSense/OPNSense would prefer, cores or speed. If anyone has done the testing and has some number to share, please let me know in the comments.

(I might edit this to add more information later so I reserve this small section for edit notes. Also IF this build becomes reality sooner then later I don’t mind posting performance numbers and a build log here.)

Edit 1: Add a note about recommendations.