I don’t have any performance metrics on how non-optimal stripes perform vs optimal stripes, but I can imagine we’re talking about a 15-20% peak performance hit.

First of all. Thanks for all the replies so far!

Config 3 gives the least amount of storage available. Two out of five disks will be dedicated to parity, which is a huge loss per vdev. What if the server cabinet had 24 (or 36) bays? That gives each vdev an additional data drive. Bringing the disk total up to 6 per vdev.

Can’t you change the cluster size to make it write out the data in powers of two? I think i read something about this. For example if you changed the cluster size to 96KiB. That would give a multiple of two written to each data disk. 5-disk RAID-Z2 = 96KiB / 3 = 32KiB

Also is it beneficial to have larger cluster sizes for large files?

The server cabinet is not bought yet. Just found a good deal on the case with 20x bays. Not sure if the cabinet even has a brand. Here is a link if you’re curious. (It’s in Norwegian) https://www.finn.no/bap/forsale/ad.html?finnkode=106438409

Although looking at the non optimal vdev configurations, i might want to look for a different one. Perhaps a 24 or 36 bay chassis? If so, does anyone have recommendations to a decent storage server chassis?

What kinds of problems are we talking about? Reliability issues? Incompatibility?

Yes, and a faulted pool has 0 storage capacity.

Thats what backups are for. But it’s a fair point.

Sorry, didn’t mean to sound like a dick, by the way. I’ve been in your position, and learned the hard way that raidz1 is not a good option.

I have a 6 5TB disk raidz2 in my NAS here, running on a slow, soon (tomorrow actually, yay!) to be upgraded amd e350 motherboard. Scrubs take 24 hours. Resilvering a drive takes over 12 hours. Restoring all my data, about 10TB, from a backup over the 1Gbit network takes even longer, days, and that’s with SATA III drives and controllers. The case you linked is SATA I/II, so unless you’re going to massively upgrade the internals, it’ll take longer than that for you.

If I were you I’d save myself future headaches and go for the raidz2, or even a mirrored stripe option.

I’m going to quote myself from another thread even though I know you’ve specifically said Redundancy and Space is more important to you:

And here’s why:

You have to remember, RAID is NOT backup.

RAID’s purpose is to have the data be accessible even if drives fail. Accessible. Not safe.

The only reason anyone should ever use RAID is so that they don’t have to sweat if a drive is dying or dies on them. So that they don’t have to immediately drop everything and deal with the problem the moment it comes up.

Even then, they should still fix the problem the moment they become aware of it.

Again, say it with me, RAID is not backup.

Rebuilding an array is just as important as how you lay it out to begin with. Having good performance is necessary to rebuild arrays reliably and quickly.

I also want to point out that your end game of 20 drives doesn’t mesh well with the vdev sweet spot of 6-8 drives each. 6 through 8 don’t easily divide into 20.

Edit: I now see the posts mentioning you looking at different chassis. /edit

People thought it was. Now, not so much.

Where RAID 5 is RAIDZ1.

TL;DR: Drive specs were upped to meet the necessary requirements for RAID5 to continue to be reliable.

You’re still right about it taking forever though.

No worries, didn’t see it as a ‘snarky’ comment or anything. It’s a good point to bring forth. Especially since i won’t be having a backup server anytime soon…

Interesting it takes that long time on six 5TB drives. I cant even imagine the time it would take on a 10 disk raidz2 vdev. Thanks for the time estimations. Really valuable advice.

One drive has the max throughput at around 150MB/s (WD red 4TB). SATA II can handle about 3Gb/s, which is about 375MB/s. So SATA II shouldnt be a bottleneck. Unless I’m doing the math completely wrong, which might be likely.

I assume you mean 4 vdevs with 5 disks in raidz2?

No, avoid 5 disk raidz2 for the reason @SgtAwesomesauce mentioned earlier on in the thread, performance will take a pretty big hit. I’d go for either 4 or (edit: preferably) 6 disks per vdev in that case.

You’d want a chassis that has a number of drive bays that is divisible by the ideal vdev disk capacity of 6-8.

So 24 and 36 are multiples of both 6 and 8, but 20 is not for either of them.

Thanks for the detailed post! The article you linked was a good read. Could you also give more details in how you think the vdevs should be configured?

Yeah, i get that RAID is a redundancy, not a backup. The server room is gonna be located 100km (62miles) away from where i normally live. So redundancy is important because of that. I’m planning a backup server, but that’s a few years out.

Fair point. I agree with the points about the rebuild taking less stress off the other drives in the vdev. But doesn’t a mirrored vdev reduce the redundancy quite a bit? Since when a disk fails, the entire pool depend the remaining disk to complete the rebuild. If that disk fails, the entire pool is lost.

It might also bring in unrecoverable errors (URE) since all the data comes from the remaining disk. Raidz2 solves this since it has double the parity. It can then double check the information if the data is conflicting during rebuild. However, if two drives fail, it might bring UREs. Unrecoverable errors could cause corrupted data, which is not at all ideal.

The added speed and IOPS is a nice bonus though.

Sorry this was a bit confusing. I meant 20 drives to max out the server with maximum disks. If i had a 24 bay server, max would be 24. Same goes for a 36 bay.

My gut tells me raidz1 is far too little redundancy for a server of this size. Plus i won’t be having a backup solution for a while. (I know i should back it up, but no one has infinite money  )

)

You probably meant striped.

In the thread I quoted myself from, I talk about the redundancy of RAID 10 VS RAIDZ1 VS RAIDZ2. Here you go:

Yes, 24 or 36 would be more ideal when using ZFS.

However, here’s something else to consider: Cold swap drives that wait for use.

What I mean is, pretend you still used the 20 drive chassis. Instead of using all 20 drives, use 16 and have 4 turned off, but in their bays.

Presuming you have some remote ability to manage the server, if you have a drive failure, you can remove that failed drive from the array, turn one of the unused drives on, then add it to the array and have it resilver.

This means you could repair RAID failures remotely, and just go replace the failed drive when you feel like it.

Four drives sitting there doing nothing is a bit significant though, so I can see why you may wish to not do that. However, another thing to consider is that 4 drives is the minimum RAID 10 number.

So you could have a second array for whatever reason. Performance or otherwise.

These are just ideas, but if I were you and I wanted max capacity, I would go for 24 or 36 to maximize capacity and efficiency.

What is the Mean Time Between Failure for the drives you intend to use? What are their URE specifications?

These two things along with their capacity should give you all the needed info to use the online calculators that exist specifically for calculating the odds of a RAID array failing.

IMO, even with 20 drives, 2 drives failing is very unlikely. Unlikely enough that I personally would trust that array to not lose my data.

HOWEVER, you should note in my above quote, when I mention “two drives failing”, I’m talking about a RAID 10 with 4 drives.

With RAIDZ1 and RAIDZ2, the more drives you use, the more capacity efficiency you get. With RAID 10, you’ll always get 50% capacity efficiency.

This means that yes, you sacrifice half your storage, but you also get a lot more potential redundancy.

What do I mean by potential redundancy?

You have 20 drives. A RAID 10 is a RAID 1 of a RAID 0 (or vice versa but this way is simpler to setup). So you have a RAID 0 of 10 drives, and a RAID 1 of that RAID 0.

If you lose 2 drives, one from each RAID 0, you lose your data.

If you lose 10 drives from one of the RAID 0’s, you lose no data.

You can see how that can be a blessing and a curse. So that’s not exactly ideal, because while it gives you the possibility of huge redundancy, the lower bound is 2 and that means this is a bit better than RAIDZ1 but a bit worse than RAIDZ2 (as my quote above talks about).

I actually have a question for you. What are the options for increasing RAID 10 redundancy when you have this many disks? I’m trying to consider if there’s a way to reasonably setup the RAID such that redundancy is >=2 disks without losing space. I don’t think that’s possible however.

Still, kad, it all depends how likely you think 2 disk failures is. I think it’d be low enough to not worry about, and even if it does happen, you have a 50% to not lose your data anyway.

Up to you.

Sorry to take so long to get back to you.

So, changing the cluster size is definitely an option, but keeping it as a power of two, as opposed to a multiple of two will significantly improve performance. That said, this will reduce ancillary wear on the disks.

On the large cluster sizes topic: The more spindles you have, the more IOPS and raw throughput you’ll have.

This looks just like an unbranded Norco case.

You could argue that any improvements to a single drive also affect RAIDZ2, thereby canceling any benefits RAIDZ1 has over RAIDZ2.

This may be true, but depending on your budget, Z1 may be far more affordable than Z2.

Sorry it took a while to get back to you.

URE spesification is 10^14.

Mean fail time is 1,000,000 (1million) hours.

Yeah i get that it is unlikely. But I don’t really want to risk loosing the entire pool. Especially since i don’t have a backup. (yes i know, raid is not backup)

Sorry but i don’t see how this has more redundancy over say raidz2. In a raid 10 configuration, it can only loose one disk per vdev. Even if the rebuild is faster, the risk seems to be higher than raidz2 (or even raidz3, but that is a bit overkill).

Mirror depth is how you increase redundancy. So with a typical pool of mirrors setup, let’s boil this down to 4 disks, you have two 2-disk mirrors striped together. Happiness. You’re guaranteed to be able to lose 1 disk, and have a roughly 66% chance to lose a second disk and still be fine. Technically speaking, this loses out in redundancy to a 4 disk RAIDZ2 where you are guaranteed to be able to lose 2 disks and be fine.

However, as you add disks, you can reshape your mirrors. With 6 disks you can have either three 2-disk mirrors and get the performance boost, but still only be guaranteed to be good after 1 disk, or you can have two 3-disk mirrors. A two 3-disk mirror setup would allow you to lose any two drives and still be fine, and then a certain percentage chance to lose another couple of drives and still be fine. With a 6 disk RAIDZ2, you’re still only get those 2 disk failures. Any third disk failure is catastrophic.

3-disk mirrors sacrifice more space for that redundancy though.

After all the great feedback from everyone. I see that the 20 bay server is a no-go. Therefore i will be looking into a 24 or 36 bay one instead. This changes quite a bit how the vdev can be split.

Here are some new configurations based on everyones input. The maximum vdevs is based on either 24 or 36 bays. I would really appreciate your thoughts on them. What would you choose in this scenario?

Configuration #4

24 bay: (4 vdevs, 6 disk per, raidz2): 65.54TB usable.

36 bay: (6 vdevs, 6 disk per, raidz2): 98.30TB usable.

Each vdev can loose at most 2 disks. Rebuilds are slow, and will strain the remainding disks. Potentially long scrub and resilvering times (thanks @magicthighs). 66% space efficiency.

Configuration #5 (as @Vitalius suggests)

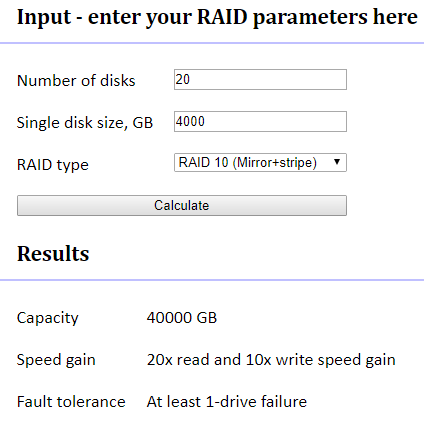

24 bay: (6 vdevs, 4 disk per, raid10): 49.15TB usable.

36 bay: (9 vdevs, 4 disk per, raid10): 73.73TB usable.

Each vdev can loose at most 1 disk. Rebuilds are fast, and won’t strain the other disks in the vdev as much. 50% space efficiency.

Configuration #6 (as @Levitance suggests)

24 bay: (8 vdevs, 3 disk per, 3-way mirror): 32.77TB usable.

36 bay: (12 vdevs, 3 disk per, 3-way mirror): 49.15TB usable.

Each vdev can loose at most 2 disks. Rebuilds are fast, and won’t strain the other disks in the vdev as much. 33% space efficiency.

Personally, #5 because I wouldn’t be worried about losing a 2nd disk, but since you care a bit more about it than I seem to, for you, #6.

Yes, it loses a lot of storage, but the performance is important for redundancy. Speed in rebuilding an array is almost as important as how many disks it can lose if something catastrophic happens. Say, a power surge that kills one drive and cripples another, and you don’t know how long any of the drives will last.

It simply boils down to balancing Redundancy to Storage Efficiency to Performance.

You can do well at 2 if you sacrifice the 3rd, and you’ve already chosen Redundancy as very important, so now you have to choose between Storage Efficiency and Performance.

But Performance is a key component to Redundancy, as I mentioned, in certain situations.

Sorry, I know this is a relatively old thread. Just have a quick question…

I have a 36-bay server. I would like to have 5 7-disk RAID-Z2 vdevs + hot spare. I understand that this is not optimal, but I’m not sure if a nonstandard recsize is advisable or if it will cause more harm than good. The workload is backup/archive, but I’d still like the performance to be as good as it can be with the disk config.

EXAMPLE: 128k vs 120k

(128/5 = bad, log2(128) = good)

VS

(120/5 = good, log2(120) = bad)

If there is no clear answer, I will run some tests, but if there is, then I’d rather save the time.

I’m not sure which would be better. That’s something you’ll have to investigate on your own. I don’t have any resources indicating which would be better.