I have a issue getting top speeds between my zfs array and my 10g nic and I don’t know why

i get 900 MB\s from ZFS RAM cash to PC RAM Disk (across a link between 2 NIC’s)

580 MB\s ish from array to M.2 SSD (internally within the server)

580 MB\s ish M.2 SSD to PC RAM Disk (across a link between 2 NIC’s)

but 200 MB\s if I’m lucky between the array and PC RAM Disk (across the link between 2 NIC’s)

hardware

2x 8GB RAM

2x ASUS XG-C100C NICs

1x Ryzen 5 3400G

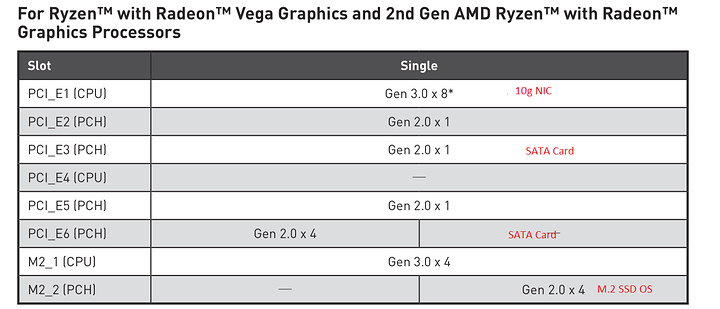

1x MSI X470 GAMING PLUS MAX Motherboard

1x M.2 SSD

2x Ziyituod SATA 3.0 Card

10x 3TB WD red’s

Software

Ubuntu server

zfs raidz2

run an iperf test

size of files being transferred

1 Like

What type of transfers are you using to see that speed?

1 Like

SMB and stix files between 250mb and 500mb

Don’t have access to the server right now but will do tomorrow

That will be the best thing to get a baseline. ZFS performance can vary a good bit depending on what you’re doing.

Things I’d look into:

IOPS vs Throughput, 1 vdev will prioritize throughput over iops. Adding another raidz2 vdev will increase iops

Sync vs Async, I see you don’t have a SLOG so sync writes will suffer a slight penalty. Reads should be fine

fixed guess auto corrected to that idk

1 Like

risk

September 2, 2020, 7:34pm

9

Could try transferring using socat.

I will run a iperf test tomorrow and get back to you 2 thanks for the help

Thanks for the suggestion will do

zlynx

September 3, 2020, 2:24am

13

Not that this is a problem exactly, but I am pretty sure that your M.2 SSD is a SATA drive. That 580 MB/s is right there where a M.2 SATA would be. I’m a little surprised that you didn’t go NVMe.

1 Like

zlynx

September 3, 2020, 2:35am

14

Something to look for on the ZFS host machine with whatever tools you have. I like atop and iostat.

During the ZFS file transfer over the network look for bottlenecks. Is one or more of the drives at 100% IO Wait? Is one of the CPU cores at 100% Or similarly is your smbd at 100%?

1 Like

I did a iperf test and received 500-600 MBytes until i increased the simultaneous connections to 3 then it hit 1.05 GBytes

I am taking this to mean that it’s more a Samba/smb issue then a hardware issue

1 Like

I might do in the future but it was just a case of cost I just needed a bigger drive at the time

for the redundant 2 drives and the space I would receive seemed like a good trade-off