So i finally got around to getting the 10 Gig network link between my machine and my TrueNAS system. An improvement but i am a little disappointed.

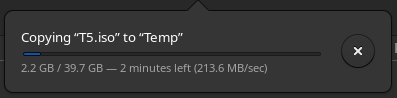

Hoping i can get some ideas of where to start with investigating where my performance issues are and if its actually feasible to improve anything to get more throughput. My initial tests over SMB were ~200MB/sec write and a tad less reading this was just using a 50GB ISO file for testing. Running iperf3 i can easily saturate the pipe.

My pool is about 84% full and is a bit unbalanced which i would assume is not helping. The configuration and some tests i’ve done are in the post below.

Any pointer on where i can start with this would be much appreciated ![]()

iperf3 results:

Connecting to host NAS, port 5201

[ 5] local PC port 40404 connected to NAS port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 944 MBytes 7.92 Gbits/sec 0 1.50 MBytes

[ 5] 1.00-2.00 sec 952 MBytes 7.99 Gbits/sec 0 1.60 MBytes

[ 5] 2.00-3.00 sec 954 MBytes 8.00 Gbits/sec 0 1.60 MBytes

[ 5] 3.00-4.00 sec 952 MBytes 7.99 Gbits/sec 0 1.60 MBytes

[ 5] 4.00-5.00 sec 954 MBytes 8.00 Gbits/sec 0 1.60 MBytes

[ 5] 5.00-6.00 sec 952 MBytes 7.99 Gbits/sec 0 1.60 MBytes

[ 5] 6.00-7.00 sec 784 MBytes 6.58 Gbits/sec 0 1.60 MBytes

[ 5] 7.00-8.00 sec 952 MBytes 7.99 Gbits/sec 0 1.68 MBytes

[ 5] 8.00-9.00 sec 952 MBytes 7.99 Gbits/sec 0 1.68 MBytes

[ 5] 9.00-10.00 sec 954 MBytes 8.00 Gbits/sec 0 1.68 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 9.13 GBytes 7.84 Gbits/sec 0 sender

[ 5] 0.00-10.00 sec 9.13 GBytes 7.84 Gbits/sec receiver

SMB transfer:

zpool iostat during transfer:

capacity operations bandwidth

pool alloc free read write read write

---------------------------------------------- ----- ----- ----- ----- ----- -----

tank 64.1T 12.3T 1.65K 2.72K 85.2M 792M

raidz2 22.1T 10.6T 728 1.48K 29.1M 441M

gptid/adb397c7-404f-42a1-afd6-3986c9061c66 - - 187 268 6.78M 73.9M

gptid/7abaa948-54eb-478e-b597-5bc52e6d844d - - 157 268 5.65M 73.6M

gptid/75642685-c7ce-4dc5-a137-72b8220e76f2 - - 120 257 6.04M 74.0M

gptid/13751d73-0ffe-4ee4-b9fa-fa7086780331 - - 87 179 3.50M 72.3M

gptid/c9445a4c-de1e-41b0-ad35-9ad1f9be226b - - 52 255 1.60M 74.0M

gptid/89f020e0-e3b2-4773-97e3-c535b64de5c3 - - 122 290 5.56M 73.8M

raidz2 42.0T 1.68T 961 1.24K 56.4M 353M

gptid/ab4a3e5c-06ca-46fb-9ae3-dc7b5cd71273 - - 226 255 11.3M 58.6M

gptid/25367327-9a02-4bb9-80b4-b4add8d7649f - - 140 161 9.18M 58.3M

gptid/8b7dfef7-2b36-4512-b485-953ed5c28a32 - - 130 220 5.95M 58.5M

gptid/c18e167a-b3ce-4d31-9071-e1506756f7f8 - - 93 220 5.39M 57.4M

gptid/b6000342-9df8-4bea-9779-971c92b6353f - - 181 181 13.8M 59.7M

gptid/2482a8a9-7805-470b-98f3-0417664d4c32 - - 189 230 10.9M 60.3M

logs - - - - - -

mirror 2.27M 110G 0 0 0 0

gptid/733a212b-d04f-4a8c-bdfc-495b44c4e3f2 - - 0 0 0 0

gptid/fa9f0879-b80e-4528-ae5c-66fabb42c15d - - 0 0 0 0

---------------------------------------------- ----- ----- ----- ----- ----- -----