Wondering if this is pcie errors on a board with aer squelched. …

Another thing that crossed my mind was have you tested for memory errors?

I was able to dig up problem much more similar to yours, though they only involve mirrors, not special vdev mirrors.

https://www.reddit.com/r/zfs/comments/vlfy2o/high_cpuio_leads_to_identical_checksum_errors/

https://www.reddit.com/r/zfs/comments/rqsion/identical_zfs_checksum_errors_on_mirrored_nvme/

No resolution to these

And this: https://www.reddit.com/r/zfs/comments/od3t32/help_wanted_zfs_mirror_always_has_the_same_number/

In which case replacing both sata cables and a disk seemed to work.

And this: Checksum errors, need help diagnosing. | TrueNAS Community

- Updated my BIOS

- Replaced my power supply (the original one was an off-brand that required sata adpters, that felt like my most egregious hardware part)

Perhaps unplug and reseat the power connections, like the adapter you used,

Hi wendell, thanks for chiming in.

Unless I’m looking at the wrong place, I don’t think that is happening, currenly. I do have an add-in card for the additional SATA slots, but I’m not seeing AER errors.

$ sudo dmesg | grep -i AER

[ 0.728018] acpi PNP0A08:00: _OSC: OS now controls [PCIeHotplug SHPCHotplug PME AER PCIeCapability LTR DPC]

[ 1.321877] pcieport 0000:00:01.2: AER: enabled with IRQ 28

[ 1.322065] pcieport 0000:00:01.3: AER: enabled with IRQ 29

[ 1.322221] pcieport 0000:00:03.1: AER: enabled with IRQ 30

[ 1.322439] pcieport 0000:00:07.1: AER: enabled with IRQ 32

[ 1.322598] pcieport 0000:00:08.1: AER: enabled with IRQ 33

I’m especially intrigued by the high cpuio loads. I am running into some aberrant behavior with both my NVIDIA GPU getting evicted from the Docker container runtime, resulting in high CPU loads on transcode operations and Backblaze consuming lots of CPU resources, even if it has not had anything to backup in a while. Both are suspect.

I ran memtest86 and found no memory errors. I reseated cables to the drives and memory for good measure.

After having the cache detached, the CKSUM errors have not gone away and they are still mirrored across devices.

At this point I am choosing to bow out and thank you all for thinking along, but my SO overruled me in that we will simply rebuild the pool at this point, for both our sanity. If I had gotten a bit longer with this, I would have opened an issue, but as I won’t be reintroducing special devices in the new pool, it seems wrong to open the issue for a pool that can’t reproduce the problem.

Thank you all for your input!

I conclude that we successfully rebuilt the pool together.

zpool status

pool: main

state: ONLINE

config:

NAME STATE READ WRITE CKSUM

main ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

sda ONLINE 0 0 0

sdb ONLINE 0 0 0

sdc ONLINE 0 0 0

sdd ONLINE 0 0 0

sdf ONLINE 0 0 0

sdg ONLINE 0 0 0

logs

nvme1n1 ONLINE 0 0 0

cache

sdi ONLINE 0 0 0

sdj ONLINE 0 0 0

spares

sde AVAIL

sdh AVAIL

errors: No known data errors

I swapped around some duties here, making the previous cache device a log device and the special devices as cache devices. It might still end up showing hardware issues, but at least now I can safely detach devices from the pool.

Thanks again for all the input and support!

if it were me…

id export the pool

and import it with

zpool import poolname -d /dev/disk/by-id/

Thanks a bunch for the suggestion!

$ zpool status

pool: main

state: ONLINE

scan: scrub repaired 0B in 00:07:10 with 0 errors on Tue Jan 3 14:46:47 2023

config:

NAME STATE READ WRITE CKSUM

main ONLINE 0 0 0

raidz2-0 ONLINE 0 0 0

wwn-0x50014ee2bfb9ebed ONLINE 0 0 0

wwn-0x50014ee26a640583 ONLINE 0 0 0

wwn-0x5000c500c3d1a6f1 ONLINE 0 0 0

wwn-0x5000c500c3d6963d ONLINE 0 0 0

wwn-0x50014ee2bfba002a ONLINE 0 0 0

wwn-0x5000c500c3d165b1 ONLINE 0 0 0

logs

nvme-nvme.1987-3139323938323239303030313238353535304334-466f726365204d50363030-00000001 ONLINE 0 0 0

cache

wwn-0x5001b444a7b30dc7 ONLINE 0 0 0

wwn-0x5001b444a7b30db5 ONLINE 0 0 0

spares

wwn-0x50014ee25cc3127d AVAIL

wwn-0x50014ee25cc2f341 AVAIL

errors: No known data errors

What happens if my metadata device fills up? Is it possible?

Or does it write to the pool instead?

I’m guessing a bunch of node_modules/ directories filled this up. I excluded them in Robocopy, but /PURGE (or /MIR) doesn’t remove them if they’re excluded, so they’re artificially bloating my metadata drives right now.

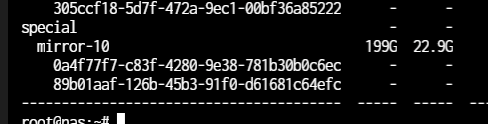

I have 1TB metadata on my other NAS, but I thought 200+ gigs would be plenty here. It looks like I might need to add some mirrors.

ZFS starts writing to the pool as it would normally do without a special vdev when it’s 75% full, leaving a 15% reserve for general performance reasons.

You can the reserve percentage in: /etc/modprobe.d/zfs.conf by adding zfs_special_class_metadata_reserve_pct=10% for example, which should still be perfectly fine. The larger the drives are, the less reserve is needed to maintain sanity in free space availability that allows ZFS to function quickly.

Calculating actual space in ZFS is a little bit complicated even before metadata is involved, so I refuse to comment on what reported numbers actually mean and why napkin math doesn’t give entirely expected results. Because fuck if I know.

The important thing for me is knowing the pool doesn’t fail when metadata drives fill up.

I wish I could dedicate metadata only to specific datasets like I can with dedupe. Is that possible?

Yes it is! I can find the exact way to do that in a bit when I’m not on the phone, but essentially you set something the datasets you don’t want included to “none” or something like that.

I am not finding an obvious way to exclude a dataset from utilizing a special vdev. I take it back I may have gotten confused with some other things like setting primarycache=none|metadata|all on a dataset, or excluding dedupe data by changing the zfs_ddt_data_is_special module property (though this is system wide).

Hello. I have a basic understanding of what is going on here, but I’m not grasping some of it. My situation is that I don’t even have a ZFS pool yet, but I am looking to create one with new drives at some point (my RAID array is full). If I create a histogram of the files of this array, is there any way I can calculate how large of SSDs I would need to store the metadata (to figure out things before even migrating to ZFS)? I wouldn’t be storing at small blocks files on it, just metadata. My mind is going all over the place thinking about this.

It might be better to see real data. I don’t know how to get the file stats, but I have a mix of games, long video streams, short videos, pictures, and code projects as well as documents, music compositions, you name it.

All that data is currently 8.22TB. This zpool has 204GB of metadata. Unless you have 8TB of super tiny files, you’re probably fine. The quickest way to test is to copy your files. I’m assuming you have an extra copy of your data to play with.

I have an all SSD-array in this NAS. I’m only using metadata vdevs because I put four Optane drives in there as 2 mirrors. Those drives allow very low-latency access; otherwise, I wouldn’t have bothered. In my offsite HDD array, I used regular NAND flash SSDs.

Is that even true? I mean you should also have checksums with ZFS and if one record from a 2-way mirror has correct checksums and the other has not, then it should be obvious which record is the correct one?!

What I was describing was if a disk in a mirror fails or is removed, leaving only one disk. In which case ZFS can tell if there is an error, but there is no practical way to fix regular data.

One thing I didn’t consider however, is that normal metadata/files stored within metadata blocks should have an extra copy by default, so that should still be correctable. I don’t actually know if this is still the case when using special vdevs. If there is an extra copy, then there’s a chance that things are still correctable, assuming the data corruption isn’t across areas of the disk effecting both copies. Use of special small blocks to put regular data on the special vdev would still likely be at risk.

Would be great if the special dev can be set as just a cache for metadata instead of dev failure = pool failure

Zfs has the metadata copies feature but it’d probably take a bit of work to make it do that in the case special vdevs were in playin practice I don’t find it too off-putting as the special vdevs can be as or even more redundant than the normal vdevs

just a cache for metadata

That’s basically ARC. Or if it doesn’t fit in that, an L2ARC with at least secondarycache=metadata on the datasets. Make sure /sys/module/zfs/parameters/l2arc_rebuild_enabled is set to 1 so it’s persistent on reboot. This is usually set as the default.

L2ARC for metadata only, may benefit from messing with l2arc_headroom and related fill rate things like that to more aggressively grab anything metadata related in ARC, but you are on your own for that, and can easily make things worse than default if you don’t know how to verify what you did was helpful or not.

Hi I’m new here and was hoping to get some assistance troubleshooting.

I followed the guide in the OP by wendell and have added a special metadata device to my zpool that comprises of 2 way mirrors. I have added a special mirror vdev. I set my record size to 1M and small blocks to 512K as the overwhelming majority of content is large video files. I believe the metadata is going correctly to the special device, but the small blocks are not, and I have moved all data off the pool and back on again twice to attempt to rebalance.

here is my block size histogram:

| + | A | B | C | D | E | F | G | H | I | J |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | block | psize | lsize | asize | ||||||

| 2 | size | Count | Size | Cum. | Count | Size | Cum. | Count | Size | Cum. |

| 3 | 512: | 147K | 73.3M | 73.3M | 147K | 73.3M | 73.3M | 0 | 0 | 0 |

| 4 | 1K: | 134K | 161M | 235M | 134K | 161M | 235M | 0 | 0 | 0 |

| 5 | 2K: | 136K | 372M | 607M | 136K | 372M | 607M | 0 | 0 | 0 |

| 6 | 4K: | 325K | 1.36G | 1.95G | 118K | 646M | 1.22G | 467K | 1.82G | 1.82G |

| 7 | 8K: | 157K | 1.53G | 3.48G | 109K | 1.20G | 2.42G | 400K | 3.38G | 5.20G |

| 8 | 16K: | 129K | 2.69G | 6.17G | 190K | 3.74G | 6.16G | 142K | 2.84G | 8.04G |

| 9 | 32K: | 145K | 6.37G | 12.5G | 115K | 5.16G | 11.3G | 137K | 5.92G | 14.0G |

| 10 | 64K: | 116K | 10.2G | 22.8G | 102K | 8.97G | 20.3G | 140K | 12.1G | 26.1G |

| 11 | 128K: | 164K | 25.5G | 48.3G | 319K | 43.6G | 63.9G | 165K | 25.7G | 51.8G |

| 12 | 256K: | 128K | 47.7G | 96.0G | 55.4K | 19.4G | 83.4G | 129K | 47.9G | 99.6G |

| 13 | 512K: | 404K | 297G | 393G | 37.2K | 26.3G | 110G | 404K | 297G | 397G |

| 14 | 1M: | 23.6M | 23.6T | 24.0T | 24.1M | 24.1T | 24.2T | 23.6M | 23.6T | 24.0T |

| 15 | 2M: | 0 | 0 | 24.0T | 0 | 0 | 24.2T | 0 | 0 | 24.0T |

| 16 | 4M: | 0 | 0 | 24.0T | 0 | 0 | 24.2T | 0 | 0 | 24.0T |

| 17 | 8M: | 0 | 0 | 24.0T | 0 | 0 | 24.2T | 0 | 0 | 24.0T |

| 18 | 16M: | 0 | 0 | 24.0T | 0 | 0 | 24.2T | 0 | 0 | 24.0T |

and here is my zpool list -v output

| + | A | B | C | D | E | F | G | H | I | J | K | L |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | NAME | SIZE | ALLOC | FREE | CKPOINT | EXPANDSZ | FRAG | CAP | DEDUP | HEALTH | ALTROOT | |

| 2 | Vault | 34.6T | 24.1T | 10.5T | - | - | 1% | 69% | 1.00x | ONLINE | - | |

| 3 | mirror-0 | 16.4T | 8.77T | 7.59T | - | - | 0% | 53.60% | - | ONLINE | ||

| 4 | wwn-0x5000c500e4dd4620 | - | - | - | - | - | - | - | - | ONLINE | ||

| 5 | wwn-0x5000c500e4f28be1 | - | - | - | - | - | - | - | - | ONLINE | ||

| 6 | mirror-1 | 5.45T | 5.14T | 316G | - | - | 3% | 94.30% | - | ONLINE | ||

| 7 | wwn-0x5000c500e55271b9 | - | - | - | - | - | - | - | - | ONLINE | ||

| 8 | wwn-0x5000c500dd1032e3 | - | - | - | - | - | - | - | - | ONLINE | ||

| 9 | mirror-2 | 5.45T | 4.96T | 508G | - | - | 3% | 90.90% | - | ONLINE | ||

| 10 | wwn-0x50014ee2bae693cf | - | - | - | - | - | - | - | - | ONLINE | ||

| 11 | wwn-0x5000c500e5526172 | - | - | - | - | - | - | - | ONLINE | |||

| 12 | mirror-3 | 5.45T | 5.15T | 306G | - | - | 4% | 94.50% | - | ONLINE | ||

| 13 | wwn-0x5000c500dd103bdd | - | - | - | - | - | - | - | - | ONLINE | ||

| 14 | wwn-0x5000c500dd10322f | - | - | - | - | - | - | - | - | ONLINE | ||

| 15 | special | - | - | - | - | - | - | - | - | - | ||

| 16 | mirror-4 | 1.86T | 84.2G | 1.78T | - | - | 0% | 4.42% | - | ONLINE | ||

| 17 | nvme0n1 | - | - | - | - | - | - | - | - | ONLINE | ||

| 18 | nvme1n1 | - | - | - | - | - | - | - | - | ONLINE |

I would expect several hundred GB on the special vdev but only have 84.2gb there. Is there anything I’m missing?

Additionally, I have read you can increase your record size as high as 16MB but it affects tuneables is there a resource I can find to see what exact impact it would have to for example increase to 4 or 8MB?

Thanks in advance ![]()

I just bought 18 more of these 905ps at work. Lol.

Long live Optane.