Sure, to have a successful system you need a mother board that supports IOMMU (AMD) or VT-d (Intel), while most modern mother boards have this support there are boards that lack some of the necessary bits in the BIOS even though the board specifications will list IOMMU/VT-d, Asus is one of the manufactures who show the support as being there but some boards just flat don't work....but this this mostly their AMD MBs from what I've seen.

Next you need a CPU that supports the same standards (IOMMU/VT-d) on the AMD side every processor they make is ready for virtualization, on the Intel side most are but some are not, so like a Intel 2500k will not work but some of the newer K series CPU's do.

My point is you need to research the hardware to make sure it has the necessary functionality and support for virtualization and it's better if you can fins someone who actually has successfully used that hardware and not just taking the word of the manufacturer...because sometime they lie. :)

Then it is important to have a GPU that will pass through, while any discrete GPU will work the preferred card would be an AMD based card because they work with minimal effort, normally integrated GPUs are fine for the host but if your CPU doesn't offer a integrated GPU then you will need two discrete GPUs one for the host and one for the guest.

Nvidia...... one of the reasons AMD is preferred to use for GPU pass through is because Nvidia doesn't like virtualization (for whatever reason) and has added into it's video drivers a device that looks to see if where it is installing (guest system) is being virtualized and if it is the drivers will refuse to work giving a error, Nvidia has been asked many time to fix this issue but has refused to do so. There are work-arounds to get a Nvidia GPU to pass through but it comes down to fooling the drivers into thinking it is a bare metal install by adding addition settings to the VM which cause a performance hit.

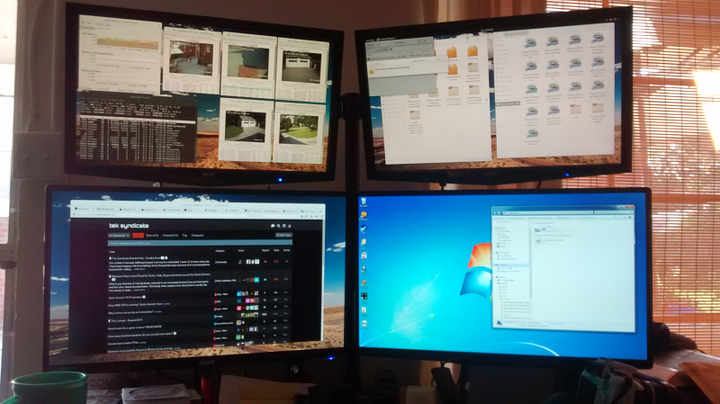

So on to my system.....I wanted a guest system that was very robust and stable we all know how unstable Windows can be when it lacks resources, so I wanted to mimic a bare metal install which means I need the same resources to pass through as I would give a Windows machine to operate on bare metal.

For me that equals multi-cores, lots of RAM, and a powerful GPU....my system consists of the following.

Asrock 990FX Fatality MB

AMD 8370 CPU

32g of DDR3 RAM

3 - R9 270x GPU's

2 - 1 gig NICs

1 - 240g SSD for the host

1 - 1TB HD for the guest

Of course to power a high wattage CPU and 3 GPU's you need lots of power so I use a Seasonic 1050w PSU, then since AMD CPUs run hot a Corsair H100 cooler to keep cooled off.

So my guest get these resources either directly passed through or virtually passed through...

6 CPU cores, 16 gig of RAM, 1 R9 270x, 1 gigabit NIC, a complete 1TB hard drive, USB mouse, keyboard, and game pad .

So you can see the resources I give the guest is very much like a medium power Windows machine that would be built on bare metal but it works exactly the same being virtualized as a bare metal install, but yet I have the flexibility to give more resources or take away resources without ever touching the hardware in a lot of cases, I can easily change the amount of RAM, CPU cores, or devices being passed through virtually, and I can create mutable VMs to run on the host that will all run at the same time as long as I'm not passing a hardware device through physically to two VMs at the same time which would cause the exact same thing as your topic originally asked, but virtually I can do just about anything and as many instances as I like or my real hardware will stand to run. (I hope that makes sense)

The point I'm trying to make is that to build a system like mine you need to think about what guest system you will be running, then think if you were going to build that system on bare metal what amount of hardware would you buy to build it, once you get that mindset you can build a host machine that will run Linux with no effort and run your virtualized OS on top just like a bare metal install but with all the benefits of having the guest housed in a container that you have total control over...

Hope this helps.... :)