tl;dr: I can’t find a single RSS client designed to download and archive full webpages, and I don’t understand why.

I use RSS. I love RSS. I love the very idea of RSS. I get mad every time I see a “subscribe to my email newsletter” signup on a website instead of an RSS feed.

I love RSS, but I must be thinking about RSS in an entirely different way from most people, because I don’t how the (apparently) main use case (other than podcasting) is even a use case at all. Maybe someone here can help me understand (and maybe solve my problem!).

RSS is for recieving new things from a given source. It isn’t just that though. It exists to handle that problem in a particular way. It allows sources to be aggregated in a unified and centralized way - that is, you can parse, reorganize, and combine feeds.

That’s really cool. It lets us do things like listen to the latest of several different podcasts in one place.

The parsability of RSS feeds is obviously their core feature. What confuses me is what that parsability is being used for. With all the wonder of RSS we only seem to get RSS readers. (Note: I realize in the context of RSS “reader” is generally a synonym for “client.” I hope it will be clear what I mean from the rest of the post

What are the point of RSS readers? I don’t mean all types of RSS clients. There are some good applications that just exist to parse new links from feeds (and the data about those links/links), and open these links in whatever browser you want. I understand that- that’s just the core of what RSS is for.

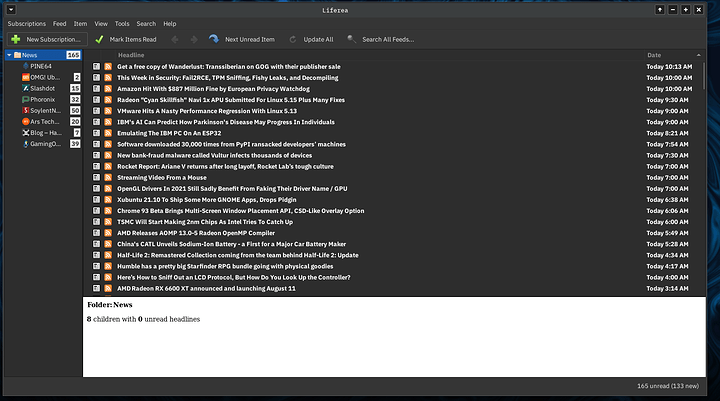

What I don’t understand is the purpose of the “full” RSS readers. They seem generally to provide lots of extra little convenience features, without providing the one absolute foundational thing that any halfway competent developer would implement first - that is, if they were designing for any of the use cases that makes sense to me.

What RSS readers don’t do is what podcast clients (often) do. Many podcast clients include settings to automatically download the audio content of each episode. These are the only clients that I can see any point to.

If I’m sitting at a desktop (or even laptop) PC with a stable, high-speed internet connection, I can go to the webpage for whatever feed content I’m interested in. I can go to the page and download or stream the podcast at the time. The only reason to use a dedicated podcast app (for more than feed tracking) is to always keep the latest podcasts already downloaded to my device, “ready” in cases where I can’t or don’t want to download them when and where I am listening to them.

Lots of podcast client apps seem to share my intuition here. However, I have searched off and on for years to find a general purpose RSS client that is designed to account for this use case. I can’t find a single RSS client designed to download and archive full webpages. I don’t undersand why. I don’t understand why anyone would even sit down to write an RSS client app, and find themselves working on features like “favorites” for posts, built in browser or audio playing, etc., without first accounting for full HTML downloading of new posts in pages. It could just be integration with wget or something - but I haven’t been able to find any program that does this.

I must be missing something.

Does anyone know of a general purpose RSS client that supports automated full-file/webpage downloading?

Does anyone understand why this isn’t what people are always trying to do RSS?