i mean IOS and Mac OSX run the same kernel so i would not be surprised

A 14nm ARM SoC design from 2015 beat a 45nm x86 design from 2008? Well that proves it then, ARM is clearly better.

Yeah, it was so good that after a delayed launch they still had to redesign the cache and FPU to outperform the 6x86 and even have a chance of competing with the Pentiums. Integer performance was great, I’ll give you that much.

Not so much a question of whether apple’s technologies are ready, but rather whether they can convince other companies with large userbases to port their programs to ARM.

Adobe (think creative cloud) can probably be swayed to do so. Others, like Microsoft (Office), are likely to sabotage.

i think microsoft might go the arm route especially if a backroom deal with intel and staying x86 cant be made

they even did the whole thing with photoshop on arm running the win10 emulation layer

Microsoft is trying to gain a foothold on ARM themselves, but what are the chances they will support their direct competitor in doing the same?

Gonna need a sauce on that one.

It’s not all that recent actually and has been around for about a decade or so. Here’s an explanation of what I think is being discussed here:

Sometimes when you think that you know where things are heading, there will be a ground breaking invention that would change the entire scenario. One such seminal invental in the form of the introduction of high performance substrate (HPS) by the famous microarchitecture guru, Yale Patt. Although I am tempted to explain HPS in detail, I would rather consider it to be out of the scope of this blogpost. A very simple (not necessarily accurate) description would be that Patt succeeded in converting the CISC instruction to multiple RISC-like instructions or micro-ops.

It’s not strictly true, in the strictest academic sense, but the whole point of risc was that you could do multiple operations per instruction and that the cost of optimising code to make that safe and efficient is shifted to the compiler. When you have compilers reordering operations to be able to take advantage of pipeline-ing, superscaling, branch prediction, and loop stream detection, and when you have CPUs fetching up to 64 bytes worth of instructions per clock you’re basically reaping all the benefits of what were supposed to be features exclusive to RISC, just imagine your instruction set being 64 byte wide. It’s still cheaper and easier to go above 1 IPC with superscaling than it is to chase dumb 10GHz silicon.

I wonder, when will we have wider L1 cache lines, or have we just given up on that and are entirely all-in on more cores now.

Considering they did this once before, the whole Power to Intel switch… totally not unlikely. Would also boost the Mac as something more than a shiny designer x86 box perhaps?

One thing ARM has in it’s favor is SoftBank, nothing helps R+D like a boatload of cash.

I’ve been saying that Apple is going to kill the Mac, or merge it with the iPad or… something eventually. The Mac being a general purpose computer doesn’t really fit with their ethos. Nevermid that Apple treats Mac like a backwater - skipping multiple Intel processor generation on certain lines.

Apple is all about control of the user experience, and more and more, GREED! They want a control of what is on the Mac, and also a cut of all programs for it. It makes sense that it is ultimately folded into the iDevice ecosystem. Now that their custom ARM chips are starting to reach parity with x86 processors, I don’t see a reason not to. This begs the question, what happens to the ‘pro’ models and desktops? Will we see a ‘last hurrah’ x86 Mac Pro and iMac, or are they already dead and we don’t know it yet?

My prediction is that x86 and Mac as we know it goes bye bye. Mac gets folded into the iDevice category. ARM processors all around, and maybe Apple sells cloud compute resources for ‘Pro’ to augment any shortcomings. All app development will require a subscription to the cloud compute service.

Ubuntu convergence was this type of tech and Microsoft tried the same. Apple are making there own CPU’s now and there own OS. I can see them pulling this convergence of where you buy an apple display and keyboard for home and it just using your phone for the rest wireless and wireless power just put your phone down next to your home pc and away you go. That will bring ARM type software into mainstream PC use.

The apple phone CPU probably needs a one or two more iterations in performance but it doable. Considering its apple and locked down tech the apple DUMB display could have addition GPU and or CPU slaves the phone could leverage on to pull it off.

Let me propose a future and ask a question; Lets just say that ARM does actually match x86-64 for performance, what happens then to all of our software like Windows 10, the Adobe suite? How will development look for the platform? Will we all have to use Java and Swift? Can i still use C++? How would ARM interact with our graphics cards like Nvida Geforce or AMD’s Vega? Does ARM even support PCIE?

(I’m kinda still inexperienced as a programmer)

It will be very hard to kick x64 of desktop PC’s. Apple could do it in their ecosystem. You can run open source easy on a mac with OS X but open source has the code so your could compile open source code for arm etc.

x64 is not going away anytime soon. Will ARM be the better tech is interesting since it works well low power and even massive server centres want performance / power. They need solid software as well however.

I would think you would still be fine with C++. It would be nice to see x86 GPUs work with ARM CPUs, the possibilities…

would be kinda neat for like a home AI server which might become a possibility in the future

I think the problem with Apple’s cooling isn’t so much that their cooling units themselves are terrible (like those shitty HP Pavilion laptops you see on QVC), it’s that they make laptops TOO THIN!

There is literally no benefit anymore to making laptops any thinner than they already are, your taking a lot of features away for a tiny gain including the ability to cool well enough.

Thinness in and of itself doesnt have a bearing on CPU performance, but the limited heatsink size because of the small size does.

I actually have no problems with thinness. I own the 2016 12" donglebook so i know the struggle well.

Thinness without major compromises is good. While you cant do much for cooling on the donglebooks, the 13/15" macbooks, the mac mini, the imacs and the mac pros all have fans! those fans can be ramped up to compensate for the smaller heatsinks, BUT apple chooses not to. If you get fan control software for OSX and set custom fan speeds, you’ll find that the machines CAN actually somewhat cool CPU’s, but only as long as the fan is always running(which it isnt by default)

There is no need to switch programming language. GCC & clang both support ARM as well as a shitload of other architectures. The JVM itself is written in C++ after all…

Some apps (especially mobile games) are already written in C++ for performance reasons.

A lot of software will be ported if customers actually use ARM. As stated above most of the code can be reused, though some performance critical parts that have been written in assembly will need to be redone.

I don’t know whether current chips support PCIe but there is no reason manufacturers couldn’t add support.

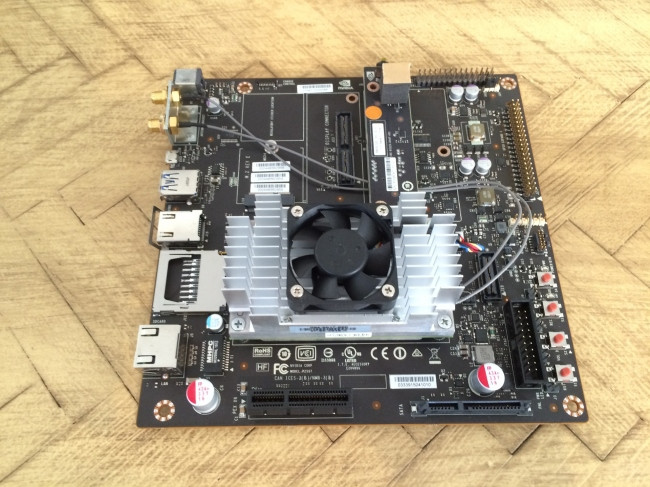

Scratch that. Nvidia’s dev boards apparently support PCIe: (slot at the very bottom)

Nvidia also already has mobile GPUs and iirc Qualcomm’s graphics units are based on a design from AMD