I’ve been attempting to set this up for the past couple days following a different guide and now this one using Ubuntu 19.04. Both guides have generated the same list of errors that Google can’t seem to find an answer for:

Error starting domain: internal error: qemu unexpectedly closed the monitor: 2019-07-28T14:54:10.600494Z qemu-system-x86_64: -device vfio-pci,host=0a:00.0,id=hostdev0,bus=pci.5,addr=0x0: vfio 0000:0a:00.0: failed to setup container for group 16: failed to set iommu for container: Operation not permitted

Traceback (most recent call last):

File "/usr/share/virt-manager/virtManager/asyncjob.py", line 75, in cb_wrapper

callback(asyncjob, *args, **kwargs)

File "/usr/share/virt-manager/virtManager/asyncjob.py", line 111, in tmpcb

callback(*args, **kwargs)

File "/usr/share/virt-manager/virtManager/libvirtobject.py", line 66, in newfn

ret = fn(self, *args, **kwargs)

File "/usr/share/virt-manager/virtManager/domain.py", line 1400, in startup

self._backend.create()

File "/usr/lib/python3/dist-packages/libvirt.py", line 1080, in create

if ret == -1: raise libvirtError ('virDomainCreate() failed', dom=self)

libvirt.libvirtError: internal error: qemu unexpectedly closed the monitor: 2019-07-28T14:54:10.600494Z qemu-system-x86_64: -device vfio-pci,host=0a:00.0,id=hostdev0,bus=pci.5,addr=0x0: vfio 0000:0a:00.0: failed to setup container for group 16: failed to set iommu for container: Operation not permitted

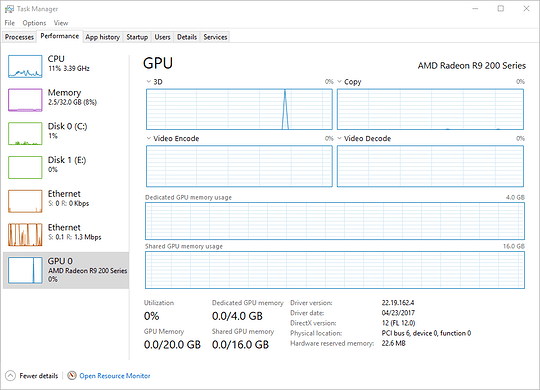

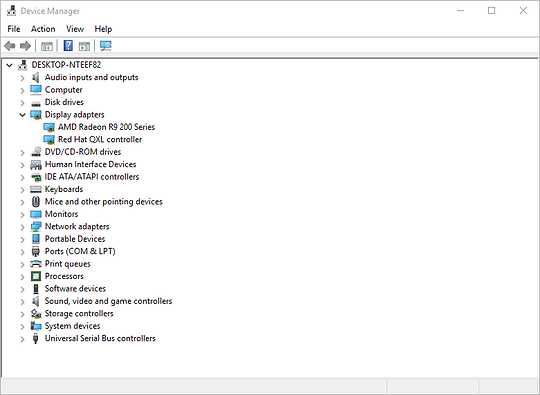

I’m guessing it’s some type of permission issue but I have no idea where to begin troubleshooting it. Hardware is 1950X, ASUS PRIME X399-A, and two identical R9 290X’s.

).

).