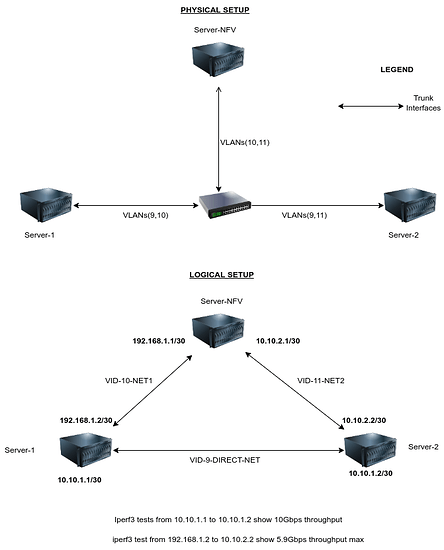

I’m having a challenge where I’m unable to pass 10Gbps worth of traffic via commodity hardware. The servers in use are Dell Poweredge C6420 servers with Dual 12-core Intel Silver 4214 2.2Ghz CPUs. NiCs on the servers are Intel X710 SFP+ NICs. I’m using iperf3 to test the bandwidth throughput and the results I’m getting is when I test throughput from one server to another via a direct switch connection I’m getting 10Gbps but if I add a 3rd server in between to route the traffic and act as a router then the bandwidth drops by half only to get around 5.6Gbps.

The purpose of this tests is to test using SDWAN and NFVs where I’d use a server as a router instead of buying expensive hardware routers. When it comes to the NFV software I’ve tested using Juniper vMX and Netgate TNSR and results are the same. This leads to it being a hardware config problem.

Any insight would be greatly appreciated.

How many 10Gbit network interfaces are running on Server-NFV?

Are you using bonding?

If you only have one 10Gbit active interface/cable, then 5Gbps when routing is full line speed, as traffic will have to go from client 1 to server and from server to client2 … am I missing something?

I haven’t benchmarked this, but those xeons are pretty slow and routing 10 gigabit isn’t trivial. Its probably not something that scales with core count.

Also (and probably more likely) as @madmatt above says - unless you’ve got one nic dedicated for each path you’ll be sharing bandwidth for each hop in and out of the machine.

If you have spare 10G nics, or possibly spare ports if you have dual nic cards in the boxes, try not using trunk ports and split each vlan to an individual NIC and see if performance improves. E.g., for your bottom left host, have one nic for 192.168.1.2/30 and a seperate physical nic handling 10.10.1.1/30.

My single E5-1650 v2 @ 3.50GHz (6 core) pulls 28Gbps when routing, 18Gbps when firewalling, running in a KVM vm, the Silver 4214 single core performance is about 10% lower than mines, but still … there should be plenty for a single core to be able to route 10Gbit/s …

Yeah like i said, haven’t bench-marked, so not surprised to be off.

Pretty sure his issue is as you said, need dedicated NICs.

Each server has 1 NIC, each with 2 ports. My assumption had been one port could be ingress while the other egress with each being to be able to push 10Gbps as the Intel X710 Manual says the NIC should be able to push at least that much. The NFV is a VM running on ESXI and I’ve given it 24GB RAM and 12cores

The NFV server has 2 10G cables going to it. I’d missed one on the diagram.

Ok, so yes, you should be getting 10gbps at least when routing.

Now, what software appliance is doing the routing? Did you check that it supports para virtual drivers when running in esxi?

You need to rule out a couple of things, and whatever is you are running you must make sure the virtual interfaces are not hardware emulated, but running either in passthrough or parabirtualized mode …

I’m running Juniper VMX but not in passthrough mode,using VMXNET3 driver

If it were my server,I’d deploy a plain Linux VM and test routing on that, to decide where to focus my attention, if the Linux VM performs better, then it will be a matter of tuning the juniper VM deployment to match performance. If the Linux VM has the same performance that would tell me that I need to look into the network setup/esci networking config … Have you assigned each 10gbit interface to a different virtual net or have you created a bond/lag and run all networks on top of it?

Each interface is tied to it’s own network. I will try your suggestion of testing using plain Linux then observe