Gotcha, I feared you were going to close the door and wait for the carpet to heat up ![]()

Switched from RHEL/podman to Debian11/portainer for docker management. The portainer UI is better imo.

Also started using Heimdall for a homepage. A little bit easier and cleaner way of getting to everything.

Anyone recommend a good Docker series or guide? I can get stuff up and running obviously but I honestly don’t know much about the nitty gritty of docker administration.

Also considering consolidating everything into my Ryzen 3600 machine and having the Dell 210ii be a cold spare in case I need to take the Ryzen offline. Now that I am happy with speed and stability of virtualized PFSense I think I am comfortable having everything in one machine. My Ryzen system has tons of compute to spare and I’d rather see it utilized.

Since you are already using Portainer, probably go with Novaspirit Tech with Pi-Hosted series. He uses Homer instead of Heimdall, which I convinced PLL to also use.

https : / / REDACTED . lol

I’m still not comfortable with the idea of a forbidden router.

what did you like about homer?

Router has been solid so I am sold. Cheapest way for me to get into 10G routing

Well, I personally never used Homer, but I didn’t like what PLL was using before, fire-something-or-another that was always showing popups to non-admins (basic visitors) that there are updates available. But Homer has the nice status feature for each service, so you know if a service is down or not by looking at the home page. Green = good, yellow I forget (I think planned maintenance?), red = down. Those are customizable, of course.

My own dashboard when I’ll do one, will be a static HTML page, maybe with some server-side scripting that does a check every minute and edits the homepage and changes the background or font color or something of a website depending if the service is up or down. Then whenever someone accesses or reloads the page, they’ll know the service is up or down. I can definitely do that in shell, but I could make it in Zabbix or maybe even Prometheus if I learn how to use the thing.

Procrastinating on schoolwork and migrated all of my services and my virtualized router to one machine. I now have the whole homelab in one box.

Dell R210ii is now a cold spare for networking appliances.

Everything is running on my Ryzen 3600x with 64GB of memory, 2 intel 480GB sata drives, and a 120GB sata SSD that is passed through to the PFsense vm so I can run it bare metal if I have to. Going to rebuild my TruNAS vm the same way with a dedicated OS drive and then the 36TB of hdd passed through.

What’s with everyone, trying to run virtualized TrueNAS with a passed through HBA, instead of running ZFS natively on Proxmox? I do not get it… is it because of the easy GUI for managing NFS, SMB and others in TrueNAS?

Basically yes.

Also so that down the road if I have the means to expand back out to separate machines I can just pull the drives and transplant them and I am back up and running on bare metal TruNAS with minimal dicking around hopefully.

Fair. But I still think migration from Proxmox to TrueNAs, at least for the NAS part is pretty easy, mostly a zfs-send away, or maybe a zfs export and import and rsync-ing maybe the /etc/exports file if you got a NFS. For samba, may be a bit more involved, but nothing too difficult, but for a home user, making it from scratch is faster than migration, if you got like 5 users.

REEEEEEEE!!!

Just plugged a spare SSD into the server and when I rebooted it whatever was on that SSD corrupted my bootloader for proxmox. Just had to reinstall. Luckily having the pfSense on it’s own SSD worked perferctly and I was able to just spec out a new VM and pass the drive through and it worked perfectly.

What is the best way to go about recovering the VM’s off of the old SSD’s? I would rather like to just import them if possible rather than rebuild them from scratch. The old Proxmox install was on 2 SSD’s in RAIDz0

Did you format your old SSDs? If so, tough luck, you’ll have to recreate them if you had no backup, and link the disk images to the VMs. It’s not difficult, but it takes a bit of time to do it manually.

Backup /etc/pve next time.

> z0

u wut m8?

Meant Z1 or RAID0?

Anyway, instead of formatting the entire drive for a borked bootloader, next time just repair grub by mounting the old root in a live environment (can be anything, even ubuntu desktop live boot works), rbind and rslave mount dev, proc and sys into the old root mount point and chroot into it, then repair grub.

Also, when you plugged the spare SSD, did you change the boot order in UEFI to the proxmox boot drive? Might have been just the UEFI bugging out from finding a new drive and not giving you the grub from the other ones.

No

Sorry meant this.

Do you have a guide or resource for doing this? I haven’t done that before.

That is what I thought at first but when I looked at the boot order in the BIOS rather than “proxmox” for the boot option like normal all it would list is “Linux Boot Loader” and then if I tried to boot it would blackscreen and fail at some point during the boot process.

I can still try to recover it. I just would like to figure out the simplest way to recover my VM’s. Either extracting them from the old drives or repairing the old installation.

I would repair the old one, just because you may have other settings, like networking and stuff configured. It isn’t hard to recover, but it’s preferable.

Probably have to write another one of my easy-to-follow guides. tl;dr, boot to a live environment, then:

mount /dev/sdb2 /mnt

mount /dev/sdb1 /mnt/boot

mount --rbind /dev /mnt/dev

mount --make-rslave /mnt/dev

mount --rbind /proc /mnt/proc

mount --make-rslave /mnt/proc

mount --rbind /sys /mnt/sys

mount --make-rslave /mnt/sys

chroot /mnt /bin/bash

grub-mkconfig -o /boot/grub/grub.cfg

Might be grub2-mkconfig, or on ubuntu it usually is grub-repair or repair-grub or something like that. It’s pretty generic between linux distros though. Also, for zfs mounting, you would need a distro with zfs support from the get-go, like either a proxmox iso, hrmpf, or an ubuntu desktop iso (server version won’t work, lmao, tried it when my proxmox install got borked due to power failure after UPS couldn’t keep it up long enough). You might be familiar with zfs import and mounting, if not, it’s just a few man pages away.

Thank you, I will give it a go tomorrow while the wife is out so that I can take the network offline while I mess around with this. Lesson learned, wipe drives before plugging them into your server.

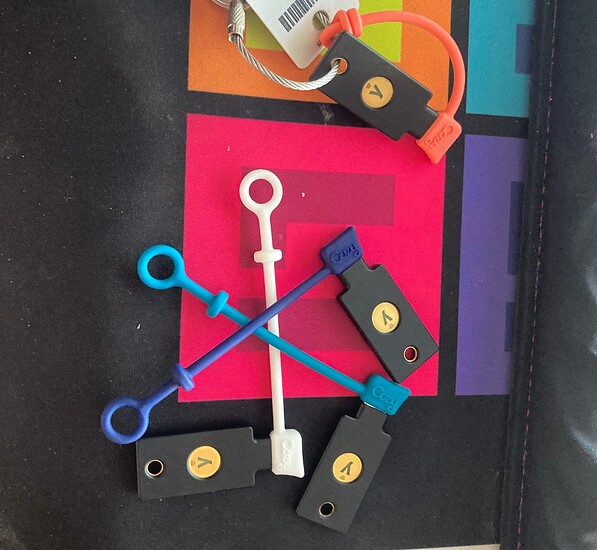

With the help of @PhaseLockedLoop I now have Yubikeys set up. 1 on my keyring, 1 on site and 2 cold spares at remote locations. All of my important accounts (Including Level1 lol) are now tied either to the key itself or the Yubico authenticator. SSH keys are loaded and I will now begin the process of distributing them to servers and networks that I access.

Really frustrating that my banks only use phone numbers and emails as 2FA systems.

Setting up my PiKVM for my remote backup server.

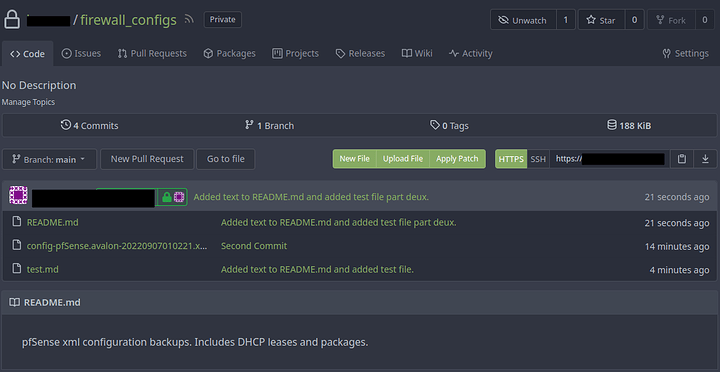

Have my personal git setup on a Linode instance. Now I can have a proper offsite backup of essential configs for my firewall and pihole, etc. Secured with my Yubikeys and signatures are linked to my keys as well when committing.

gpg encrypt is cool. Honestly makes encrypting/decrypting stuff easy as hell. I just wish it would use 25519 rather than AES256 but not a big deal.

When compute modules start to come back in stock I am going to get one of these.

https://m.aliexpress.com/item/3256804386522898.html?gatewayAdapt=Pc2Msite