I have a bit of experience with this stuff.

Before giving any actual advice some basic questions need to be answered since reality unfortunately isn’t like the spec sheets here.

Questions:

-

What operating system do you intend to use?

-

What motherboard do you want to use? Does it have enough PCIe lanes to natively operate each SSD or do you need something with an active PCIe Switch chipset, for example supplying 16 lanes to four NVMe SSDs but only 8 lanes are available in the motherboard PCIe slot?

-

Do you want to be able to hot-plug SSDs without having to reboot the system?

-

Do you want to use S3 Sleep (Suspend-to-RAM/memory) with the system in question?

Regards,

aBavarian Normie-Pleb

1 Like

Thanks for your reply!

ad 1) I want to use it at a Proxmox server (Debian based OS).

ad 2) My motherboard would be a Supermicro H12SSL-CT with an Epyc 7272 (has 128 PCIe 4.0 lanes).

ad 3) Yes, this would be my general approach as I currently have one U.2 drive installed via a PCIe adapter on the mainboard directly

ad 4) No. As it is a server it is running 24/7. It is only powered off for maintenance work and only rebooted if the OS needs it.

An with regards to Broadcom: I think that Broadcom HBA 9500i would be the thing I am looking for.

The only thing I do not know is: The card takes only 8 PCIe lanes but would support up to 16x U.2/3 (or SATA or SAS) drives.

One U.2 drive for example would need 4 lanes to run at full speed.

Any kind of magic I don’t know?

Nope going to be limited to 8x of pcie bandwith

Which means that you cannot get the full potential of each drive if you do IOPS on every device…

Thanks for the info.

In general PCIe bifurcation works on my other server. I split up a PCIe x16 into four x4 lanes which I use with a chinese M.2 carrier card.

For an HBA I would expect something different when it comes to such things.

At least there should be because the price also differs ~ € 800 for Broadcom vs € 50 for the chinese stuff?!

Broadcom allows mobo / cpu that dont do bifurcation to do it so its custom lower volume silicon. The market is also generally buisness so they can easily justify the price if they need it. Also 9500 16i are not $800 unless you only shop OEM stuff. I have two of them, 1 9400 16i and 1 9400 8i

First 9500 paid $538.48 shipped

2nd 9500 paid $306.34 shipped

prices are down on them.

Most of the cheaper ones you see on ebay work just fine, I have never had an issue with them and even wendell in some video said they are mostly fine as well.

Do the 9400 series also support this “tri-mode” (SATA/SAS/NVMe)?

Yes but are pcie gen 3

(remember 8x of gen 4 on the 9500 = 16 lanes of gen 3 so you can do 4 drives of u.2 gen 3 drives well close always overhead)

And which cage do you use?

I am currently using an Icybox cage for SATA SSDs which I’d like to replace.

I actually don’t use cages in 2/3 of my server they are just in an internal mount. I do have one of the icydock cages in my one chassis, but the 9400 does not work with hot swap(At least not out of the box might be a way to make it work), so its kinda not really worth it. And honestly I am not changing them that often so it doesnt really matter how quickly I can remove them.

I see - I also would not change drives that often but I prefere to have easy access to them rather working in an open server chassis.

This work or homelab stuff?

A mix of both.

In general I run the IT of a small company on the other side I am also using my server for personal stuff like my MP3 collections and some other medias I store on it.

Id probably skip the docks then in all honesty, they are quite expensive and not really worth it imo. Most U.2 Stuff is better then consumer lower tier SSDs and even then I have had 1 SSD fail on me and I was an early adopter and have some quite old drives still in service.

Docs = documentation or

docs = docking cages?

I am currently having 1x U.2 PCIe adapter but I would like to have another one to replace my mirrored SATA SSDs.

Unfortunately it seems that more and more enterprise NVMe flash is no longer available at M.2 slots but only on U.2/3.

Edited it already but too slow Docks

They do make m.2 to u.2 adpaters, the main reason for the u.2 shift is capacity you just cant fit that much on m.2 even with it being bigger layer cells.

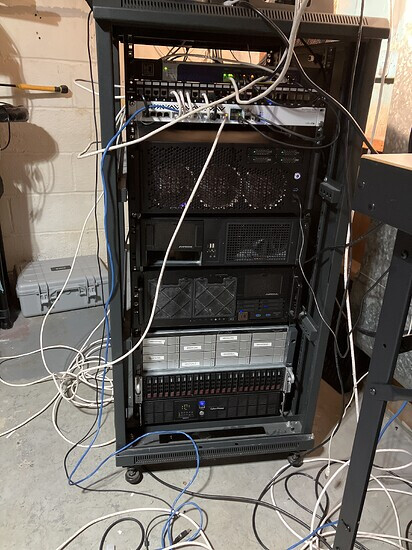

The dock system I have used for my u.2 internally that is ok is EverCool Dual 5.25 in. Drive Bay to Triple 3.5 in. HDD Cooling Box. in the one thats only holding 2 I just used the 2.5" cage, for the one I had 3 installed in I got 2.5 to 3.5 adapters to fit all 3.

Replaced the fan with a noctoua industrial and called it a day.

![]()