I’ve got myself a new HW and decided the TrueNAS Scale might be mature enough after receiving praise all over YouTube. After all, their version 22.02.2.1 is marked as release meaning it’s fit for general use, but not fit for business use: /redacted/

So anyway I was trying to set up 2 things - SMB shares and run a Plex VM with PCI passthrough for RTX2060. Current state of things is that TrueNAS Scale doesn’t boot, SMB shares sort of work but nobody understands how, and the VMs are broken and don’t even post. Before I give up, I wanted to ask if maybe I’m doing something wrong.

The build:

- SuperMicro X11SPM-TF

- Xeon 4208

- 6x 16GB DDR4 ECC, memtest ran 12hours OK

- Gigabyte RTX2060 6G

- 9x 10TB HPE MB010000GWAYN

- 2x 4TB Samsung SSDs (this is where the VM lives)

- 1x SuperMicro 32G SATA DOM (this is where TrueNAS lives)

I can go more into detail on the HW if anyone feels it’s related to the trouble I’m having.

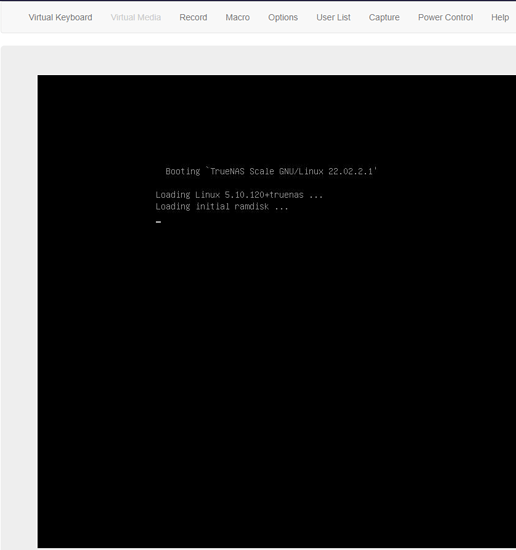

#1 - I can’t get it to boot. It gets stuck on “loading initial ramdisk” and it takes between 30 minutes and 10 hours to boot (don’t know how long exactly, I left it overnight and it was booted up in the morning. I tried restarting it again today after it has been turned off for 12 hours and the same thing is happening). I tried following the tutorial on disabling the serial console, but it doesn’t do anything. The issue persists and is frankly a deal breaker on it’s own.

The thread for it is here: /redacted/

#2 - I can’t get the VM to launch. So I have encrypted dataset that is locked after boot. I don’t know if this is the reason on why the VM doesn’t work. So after I created the VM with the OS disk pointing to the locked dataset, the UI hangs, keeps spinning the activity indicator endlessly and after F5 refresh the VM is created, but it cannot be launched, edited, or deleted. The problem was that the dataset it was stored in is locked and TrueNAS doesn’t know how to handle this. I tried deleting the VM like 10 times, but it silently fails, so I rebooted and the VM was gone. So I recreated it on unlocked dataset and it ran, installed Windows, nVidia drivers, Plex, all seems to work. I turn everything off and come to it next day. TrueNAS boots and attempts to auto-launch the VM, but because it’s on a locked dataset (again), the launch silently fails. I go in, unlock the dataset, now the VM starts but doesn’t post, the VNC screen stays blank, RDP never works and router shows no IP lease for the VM. I probably can delete it and redo it again, but I would rather figure out why this is happening. Relevant threads:

- /redacted/

- /redacted/

Those two issues are real deal breakers for me, I need this rather basic stuff to work and it just doesn’t. There’s more problems that I’m trying to get help with on iX forums (like, bonding NICs doesn’t really work and the SMB shares are somewhat implemented but the number of open topics on it is a clear indication that the way they are implemented is so confusing people are having hard time to set it up).

I’m trying to figure out whether Scale works for me asap, I thought a week in I’ll already be set up, but I keep hitting problems I didn’t expect in a production release.